-

Notifications

You must be signed in to change notification settings - Fork 8

HMSDK Design

Important

Please read HMSDK Overview instead because the current document is deprecated and no longer valid.

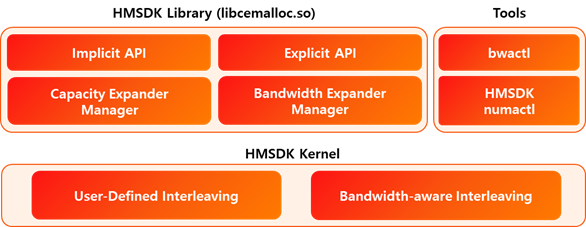

HMSDK consists of APIs, libraries, kernel, and tools that make it easy to use heterogeneous memory with different access latency/bandwidth.

The goal of HMSDK is to extend memory capacity and maximize system memory bandwidth with CXL memory.

| Capacity Expander | Bandwidth Expander |

|---|---|

| Extends the memory capacity with CXL memory. - Allocating memory to specified CXL memory. - Allocating memory to both Host and CXL memory according to the user-defined ratio. |

Maximizes the memory bandwidth with CXL memory. - Allocating memory to both Host and CXL memory with interleaving policy according to the bandwidth ratio. |

|

|

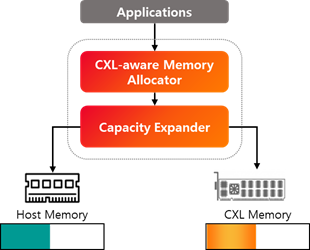

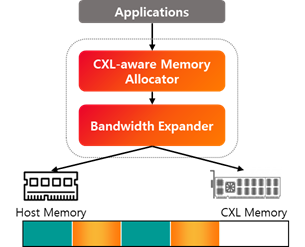

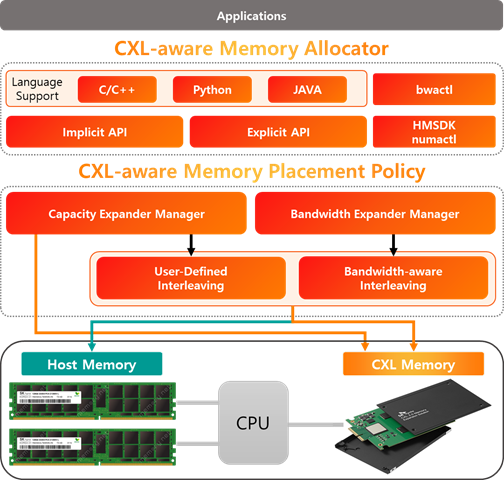

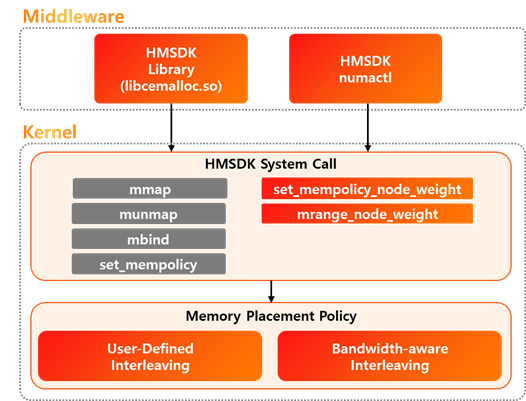

HMSDK consists of CXL-aware Memory Allocator and CXL-aware Memory Placement Policy as shown below.

CXL-aware Memory Allocator

- Language Support: C/C++, Python, JAVA

- Implicit API: APIs which do not need any modification of applications to use CXL memory.

- Explicit API: APIs in which users can selectively use CXL memory according to their needs.

- HMSDK numactl: Command Line Interface to use CXL memory.

CXL-aware Memory Placement Policy

- Capacity Expander Manager: Extends the memory capacity with CXL memory.

- Bandwidth Expander Manager: Maximizes the memory bandwidth with CXL memory.

- User-Defined Interleaving: Allocating memory to both Host and CXL memory based on the user-defined ratio.

- Bandwidth-aware Interleaving: Allocating memory to both Host and CXL memory with interleaving policy based on the bandwidth ratio.

HMSDK consists of a memory allocator library (libcemalloc.so), customized numactl,

bwactl for automatic bandwidth ratio calculation and linux kernel with our memory

allocation policy.

- HMSDK Library (libcemlloc.so): Provides custom memory allocators.

- numactl: Customized

numactlcommand line tool to use CXL memory. - bwactl: Automatic bandwidth ratio calculation tool (

bwactl.py). - HMSDK Kernel: Provides two new memory allocation policies in addition to Linux

Kernel's existing memory allocation policies.

- User-Defined Interleaving

- Bandwidth-aware Interleaving

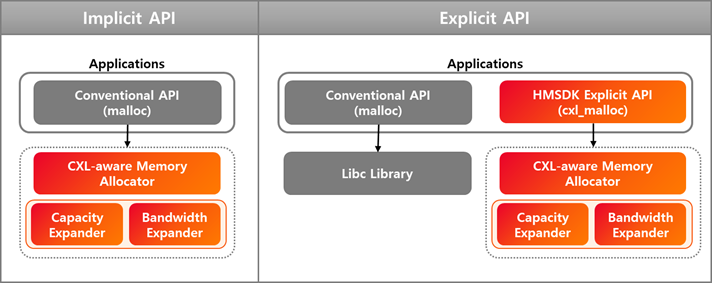

CXL-aware memory allocator provides two different types of APIs: 1) Implicit API and 2) Explicit API, depending on the usage of applications with CXL memory.

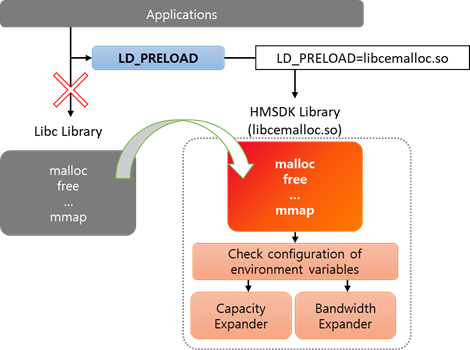

Since recent data center applications have huge and complex architectures, it would be difficult to apply CXL memory to the real system if there need to be many application source code changes. With implicit API, users do not need to modify the application's source code to use CXL memory. You can simply use the memory allocation API of HMSDK library without any source code modification.

- The

LD_PRELOADcommand enables the application to use HMSDK library (libcemalloc.so). - You can use HMSDK's Capacity Expander and Bandwidth Expander features with environment variables.

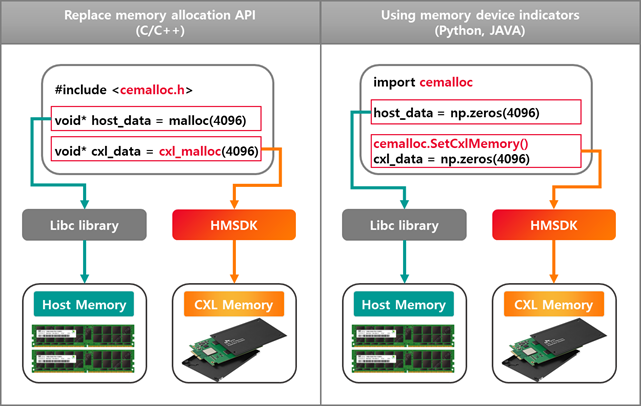

It should also be possible to selectively allocate to CXL memory according to the performance sensitivity of your data. Because there is a difference in performance between host memory and CXL memory, the overall system performance is also affected depending on where the data is allocated. Explicit API provides APIs for allocating to CXL memory, so you can optionally use CXL memory.

- Replace memory allocation API: For C/C++, you can replace the existing memory allocation APIs with the new memory allocation APIs of HMSDK.

- Using memory device indicator: For Python and Java, which do not explicitly invoke memory allocation functions, use the indicator APIs.

For efficient use of heterogeneous memory, HMSDK linux kernel provides two new memory allocation policies in addition to Linux Kernel's existing memory allocation policies (Default, Preferred, Bind, Interleaving, Preferred Many):

- User-Defined Interleaving

- Bandwidth-aware Interleaving

The above two new policies can be used through four existing system calls and two new system calls:

-

set_mempolicy_node_weight: Sets the interleaving weight of the calling thread to the user-defined (User-Defined) or predefined optimized (Bandwidth-aware) value. -

mrange_node_weight: Sets the interleaving weight of the memory range to the user-defined (User-Defined) or predefined optimized (Bandwidth-aware) value.

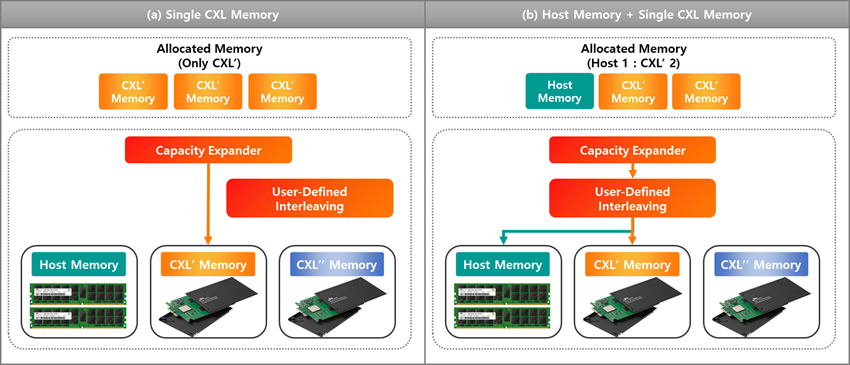

HMSDK provides memory placement policies that allow extending the memory capacity and maximizing the memory bandwidth.

Capacity Expander allows you to selectively allocate to CXL memory and allocate to both Host and CXL memory based on user-defined ratio.

Single CXL Memory:

- (a) Allocated to CXL’ Memory only.

- (b) Allocated to Host Memory and CXL’ Memory in a 1:2 ratio.

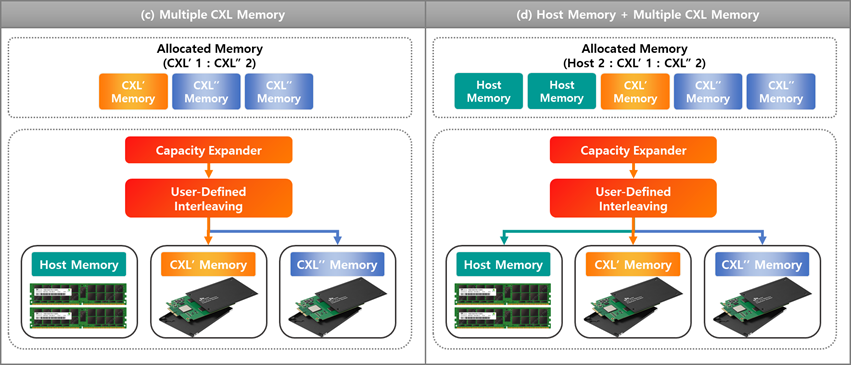

Multiple CXL Memory:

- (c) Allocated to CXL’ Memory and CXL’’ Memory in a 1:2 ratio.

- (d) Allocated to Host Memory, CXL’ memory, and CXL’’ Memory in a 2:1:2 ratio.

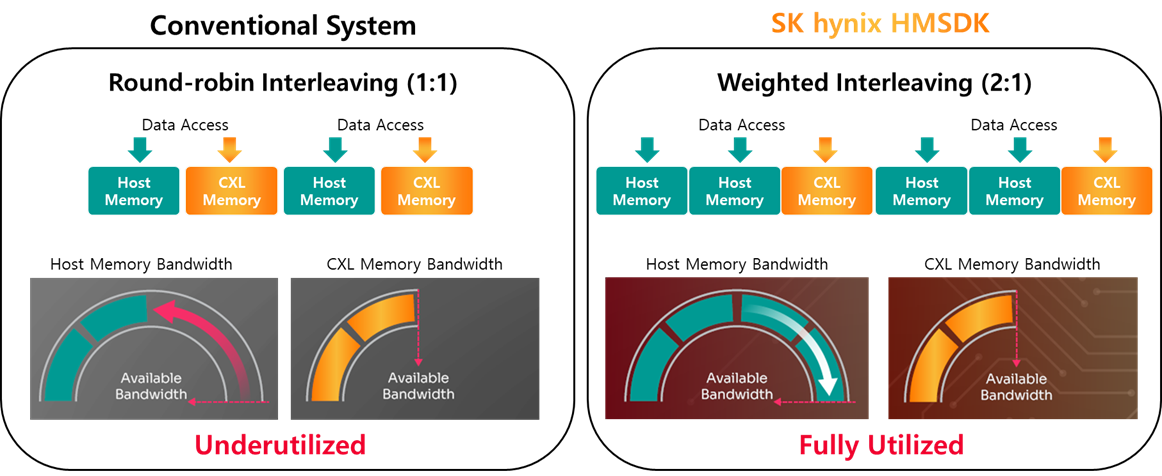

Bandwidth Expander enables maximizing system memory bandwidth through HMSDK's new memory policy, named Bandwidth-aware Interleaving. Traditional NUMA memory interleaving(Conventional Interleaving) allocates pages to each memory at the same rate in a round-robin manner. However, performance differences between memory devices can refrain the utilization of high-speed memory, which could cause overall degradation of the system performance.

Bandwidth-aware Interleaving is a memory allocation policy that increases the memory utilization of the fast memory (usually the host memory) by determining the page allocation ratio based on the bandwidth differences between the memories of each NUMA nodes. The picture below shows that host memory has twice the bandwidth compared to CXL memory. In this case, the bandwidth of two different types of memory is maximized by allocating to host and cxl memory in a 2:1 ratio. This helps to improve system performance and has the same effect as adding memory channels.

- Conventional Interleaving: equally allocating pages across multiple numa nodes.

- BW-Aware Interleaving: page allocation based on bandwidth aware weight across multiple numa nodes.