Superplatform gives the power back to the developers in the age of AI—self host your own AI platform!

Easiest way to run Superplatform is with Docker. Install Docker if you don't have it. Step into repo root and:

docker compose upto run the platform in foreground. It stops running if you Ctrl+C it. If you want to run it in the background:

docker compose up -dNow that the Superplatform is running you have a few options to interact with it.

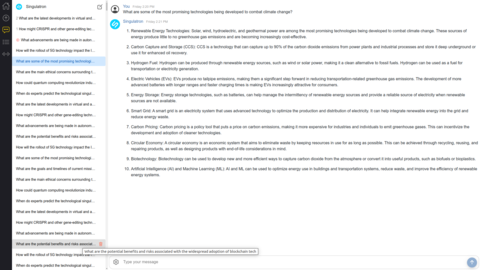

You can go to http://127.0.0.1:3901 and log in with username singulatron and password changeme and start using it just like you would use ChatGPT.

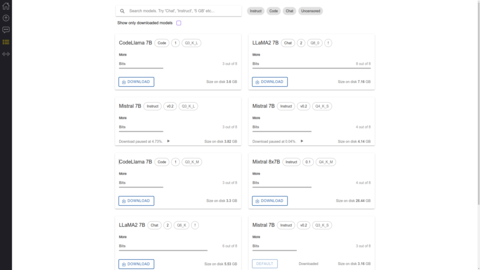

Click on the big "AI" button and download a model first. Don't worry, this model will be persisted across restarts (see volumes in the docker-compose.yaml).

For brevity the below example assumes you went to the UI and downloaded a model already. (That could also be done with clients but would be longer).

Let's do a sync prompting in JS. In your project run

npm i -s @superplatform/clientMake sure your package.json contains "type": "module", put the following snippet into index.js

import { UserSvcApi, PromptSvcApi, Configuration } from "@superplatform/client";

async function testDrive() {

let userService = new UserSvcApi();

let loginResponse = await userService.login({

request: {

slug: "singulatron",

password: "changeme",

},

});

const promptSvc = new PromptSvcApi(

new Configuration({

apiKey: loginResponse.token?.token,

})

);

let promptRsp = await promptSvc.addPrompt({

request: {

sync: true,

prompt: "Is a cat an animal? Just answer with yes or no please.",

},

});

console.log(promptRsp);

}

testDrive();and run

$ node index.js

{

answer: ' Yes, a cat is an animal.\n' +

'\n' +

'But if you meant to ask whether cats are domesticated animals or pets, then the answer is also yes. Cats belong to the Felidae family and are common household pets around the world. They are often kept for companionship and to control rodent populations.',

prompt: undefined

}Depending on your system it might take a while for the AI to respond. In case it takes long check the backend logs if it's processing, you should see something like this:

superplatform-backend-1 | {"time":"2024-11-27T17:27:14.602762664Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":1,"totalResponses":1}

superplatform-backend-1 | {"time":"2024-11-27T17:27:15.602328634Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":4,"totalResponses":9}

superplatform-backend-1 | {"time":"2024-11-27T17:27:16.602428481Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":5,"totalResponses":17}

superplatform-backend-1 | {"time":"2024-11-27T17:27:17.602586968Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":6,"totalResponses":24}

superplatform-backend-1 | {"time":"2024-11-27T17:27:18.602583176Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":6,"totalResponses":31}

superplatform-backend-1 | {"time":"2024-11-27T17:27:19.602576641Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":6,"totalResponses":38}

superplatform-backend-1 | {"time":"2024-11-27T17:27:20.602284446Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":6,"totalResponses":46}

superplatform-backend-1 | {"time":"2024-11-27T17:27:21.602178149Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":6,"totalResponses":53}

superplatform-backend-1 | {"time":"2024-11-27T17:27:22.602470024Z","level":"DEBUG","msg":"LLM is streaming","promptId":"prom_e3SA9bJV5u","responsesPerSecond":6,"totalResponses":61}

superplatform-backend-1 | {"time":"2024-11-27T17:27:23.174054316Z","level":"INFO","msg":"Saving chat message","messageId":"msg_e3SARBJAZe"}

superplatform-backend-1 | {"time":"2024-11-27T17:27:23.175854857Z","level":"DEBUG","msg":"Event published","eventName":"chatMessageAdded"}

superplatform-backend-1 | {"time":"2024-11-27T17:27:23.176260122Z","level":"DEBUG","msg":"Finished streaming LLM","error":"<nil>"}Superplatform is a microservices platform that first came to mind back in 2013 when I was working for an Uber competitor called Hailo. I shelved the idea, thinking someone else would eventually build it. Now, with the AI boom and all the AI apps we’re about to roll out, I’ve realized I’ll have to build it myself since no one else has.

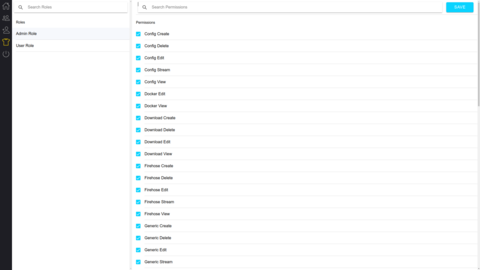

It's a server and ecosystem enables you to self-host AI models, build apps that leverage those models in any language, and utilize a microservices-based communal backend designed to support a diverse range of projects.

- Run open-source AI models privately on your own infrastructure, ensuring that your data and operations remain fully under your control.

- Build backendless application by using Superplatform as a database and AI prompting API. Like Firebase, but with a focus on AI.

- Build your own backend services around Superplatform. Run these services outside or inside the Superplatform platform.

- Superplatform is designed to make deploying third-party AI applications straightforward. With its focus on virtualization and containers (primarily Docker) and a microservices, API-first approach (using OpenAPI), Superplatform seamlessly integrates other applications into its ecosystem.

See this page to help you get started.

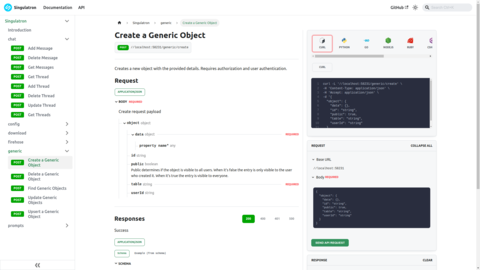

See https://superplatform.ai/docs/category/superplatform-api/

We have temporarily discontinued the distribution of the desktop version. Please refer to this page for alternative methods to run the software.

Superplatform is licensed under AGPL-3.0.