-

Notifications

You must be signed in to change notification settings - Fork 47

1. Dashboard

The Dashboard page provides insights on any work items at Backlog level (PBI, User Story, Bug, etc.). It excludes any levels higher such as Epic or Feature. Task work item type is not included in the dataset.

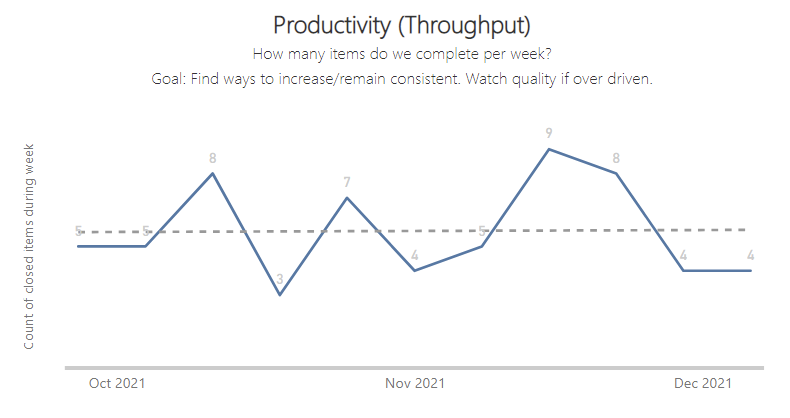

Productivity is measured as the number of completed items (PBIs/User Stories and Bugs) per week. This is commonly referred to as Throughput. The chart counts how many items were finished each calendar week, as well as plotting the trend over time.

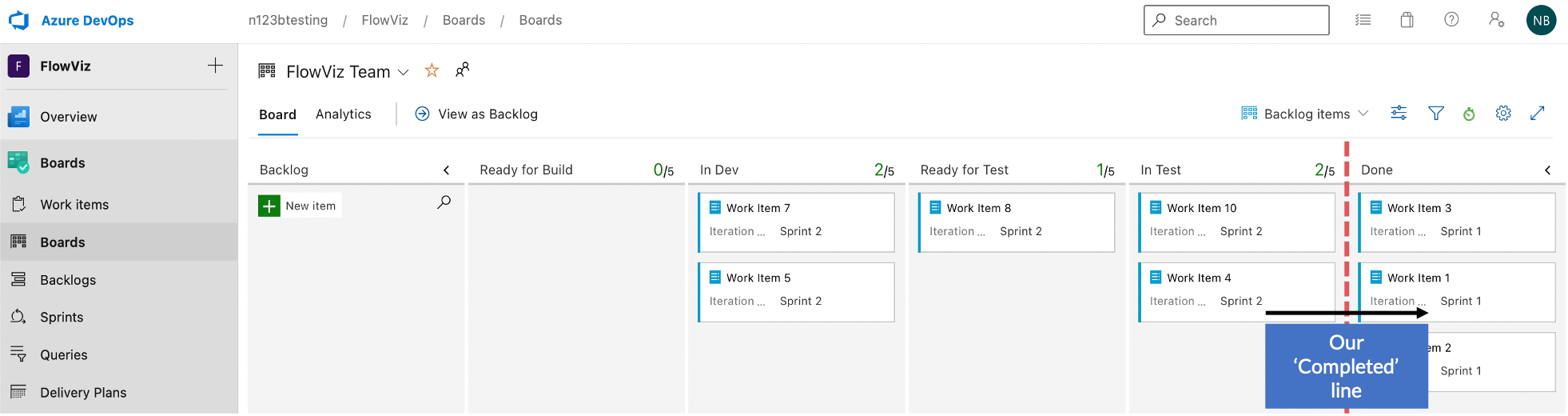

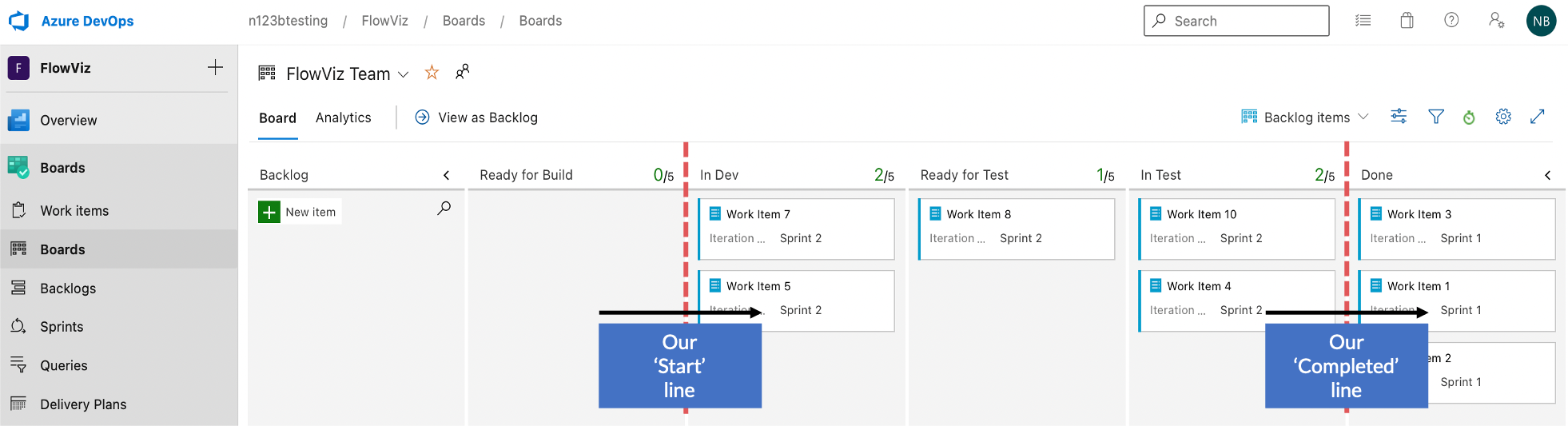

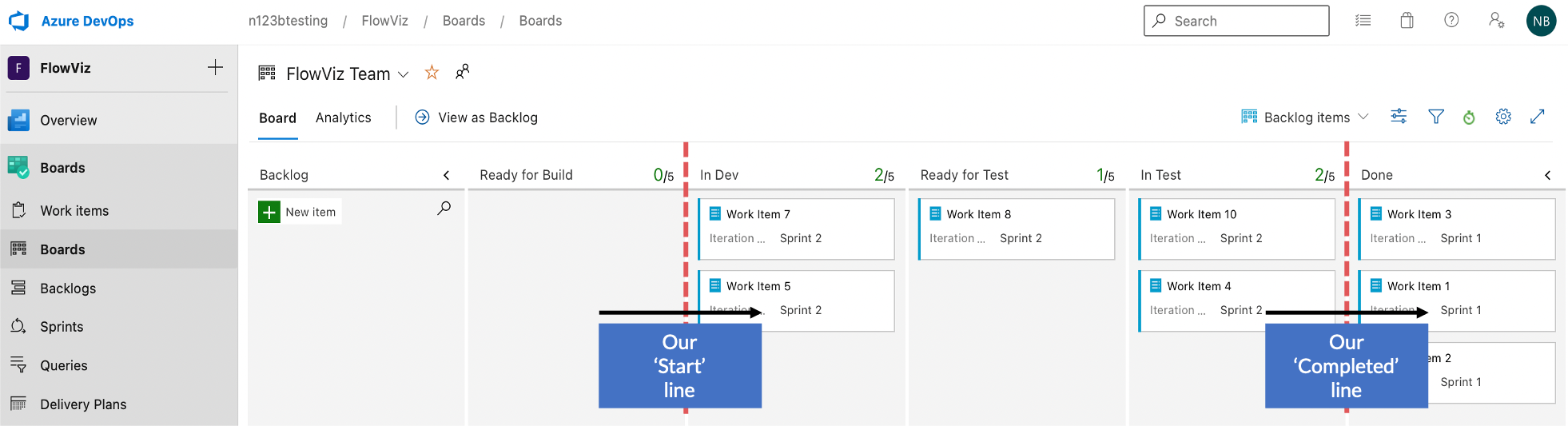

Any time an item moves into past our ‘completed’ line it is included in our Throughput count.

The intention is to help teams find what level of throughput per week is consistently achievable and to find ways to increase this over time by improving their processes.

When overdriven, it can lead to your Quality metric going up (the wrong direction!) as more bugs are reported. Sustainability may also move down into more orange bars (starting more than finishing.

Throughput can be gamed by breaking down items into smaller pieces (may not be a bad thing – so long as they are vertically slicing!). Teams could also prematurely sign-off work only to have bugs reported later (seen by increase in defect percentage in quality chart).

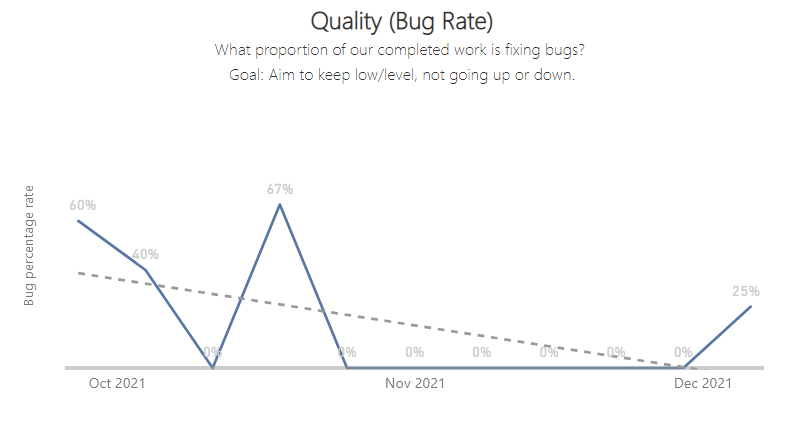

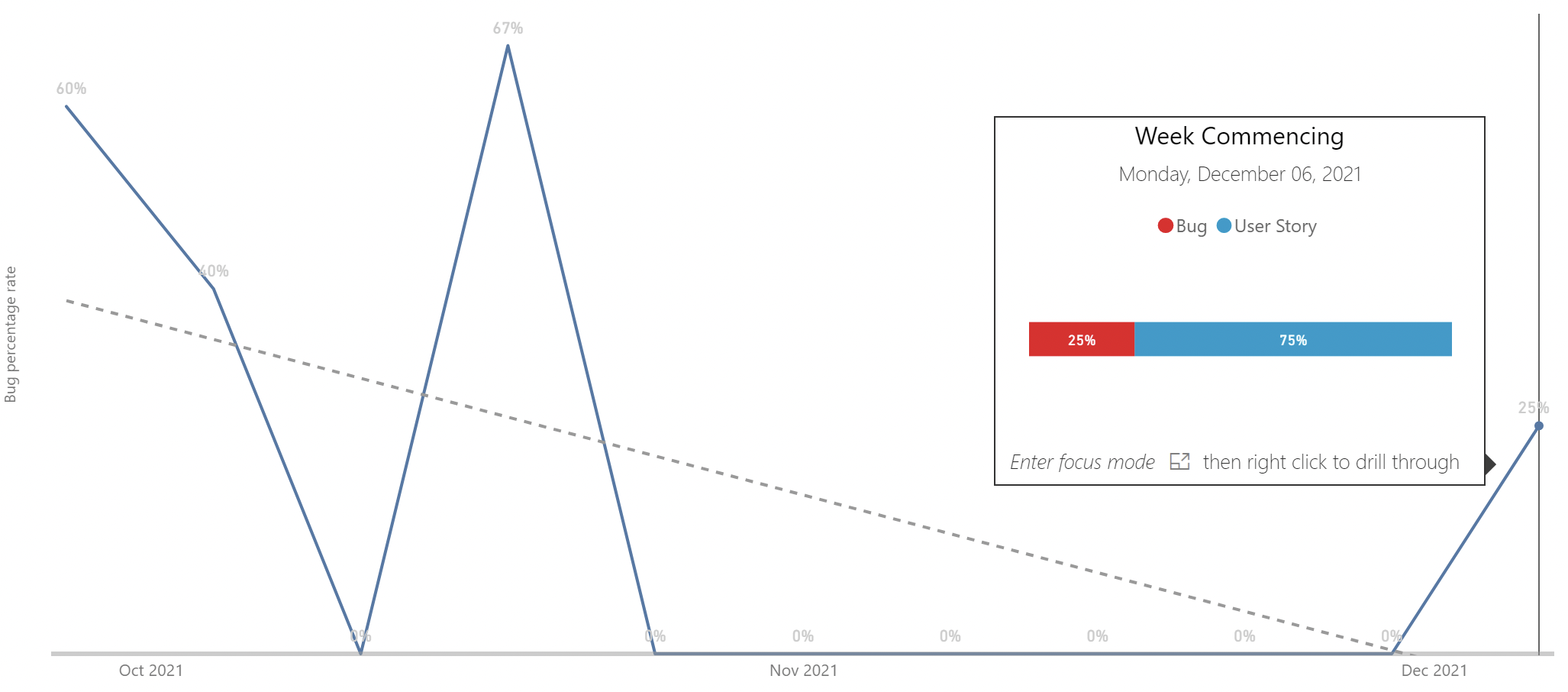

Quality is measured as the percentage of Throughput (completed work) that is bugs.

To calculate it, the number of bugs completed each week is divided by the total count of completed items that same week (with the result expressed as a percentage). For example, if you completed 4 Bugs in a week and 6 User Stories, then your Quality measure for that week would be 40%. This measure will be the same regardless of if bugs are treated at story level or task level.

The intention is to help teams continuously triage and complete bug work items at a consistent rate. Try and drive to keep the percentage level (and low!) without deferring bugs.

When overdriven, your Responsiveness metric may increase for bug work item types. The number of open defects may grow (and thus work item age for these items will increase) if PBI/User Story work is done in preference.

It is gamed by entering Bugs as PBIs/User Stories - which may seen by growing Throughput, but lowering customer satisfaction and complaints.

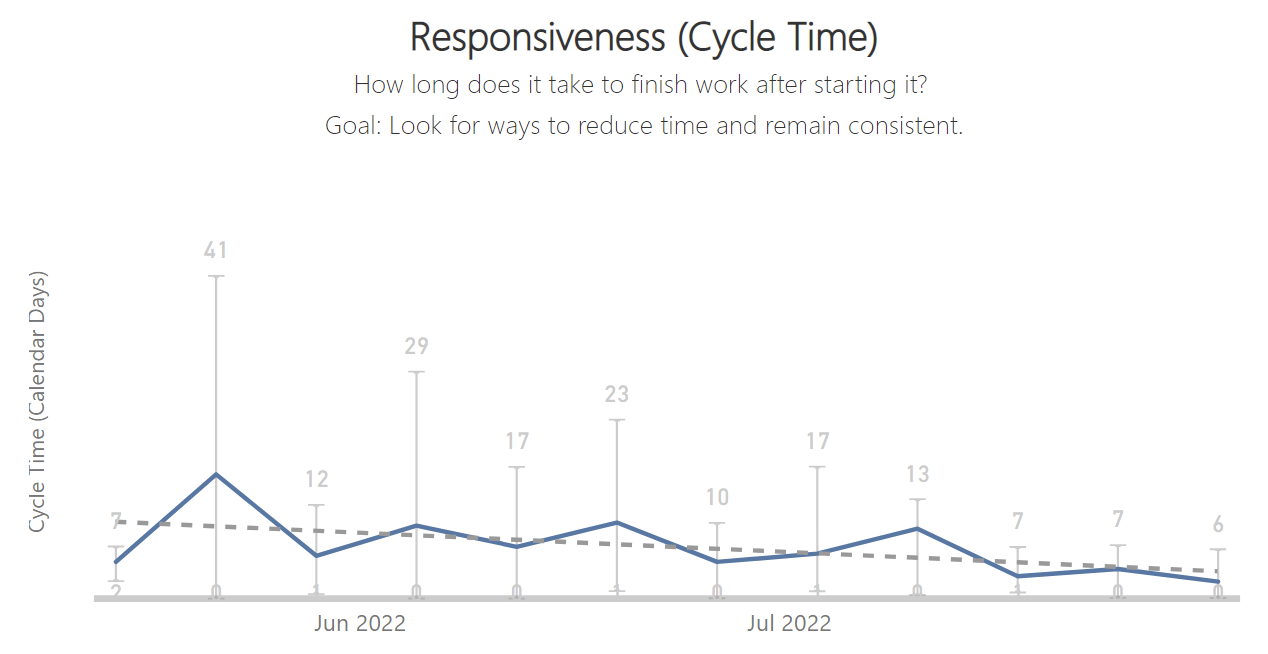

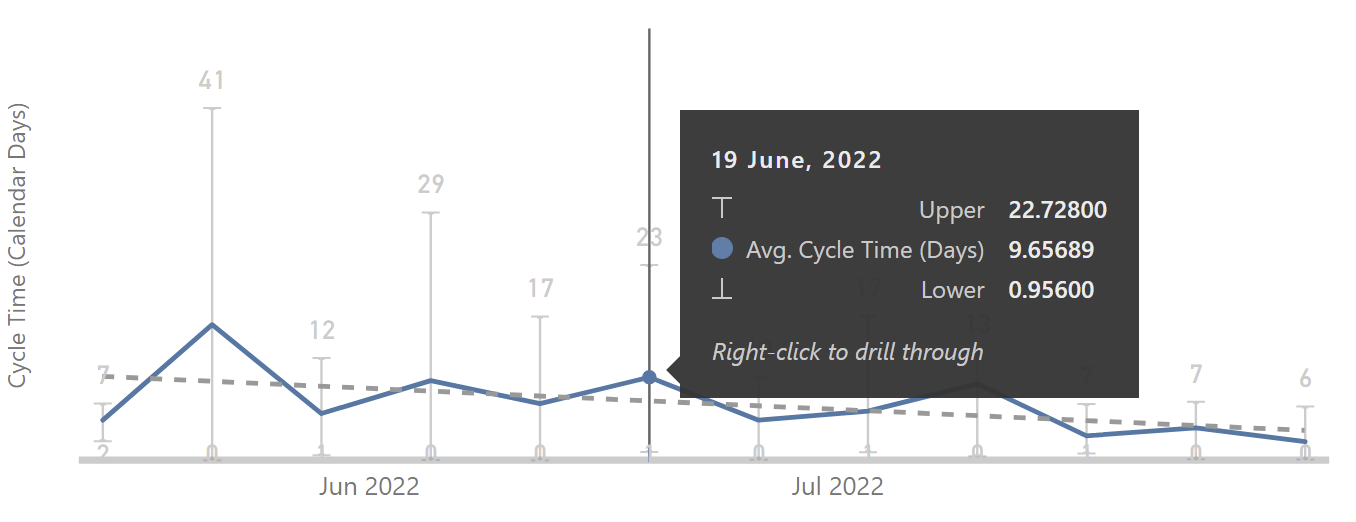

Responsiveness is measured as the average cycle time (the blue line) for all items completed in the same week. Cycle time is calculated in calendar days, not working days. Error bars are added for the upper (90th percentile) and lower (10th percentile) cycle times for a given week. Use the drill through to see the breakdown for each individual item.

Cycle Time is the time (in calendar days) taken from when an item first moves past our ‘Start’ line and over our ‘Completed’ line.

The intention is to help teams understand the cycle time for completing items, and to look for ways to reduce the time (and variability) by eliminating unnecessary steps and waste.

When overdriven, your Quality metric goes up as more bugs are reported due to premature completion. Sustainability chart has higher peaks as more work completes but then work starts on more bugs that are opened.

It is gamed by only starting fast, simple work (seen by initial increasing Productivity only to regress later) and/or premature completion of items as completed (seen by growing Quality (Bug Rate) percentage).

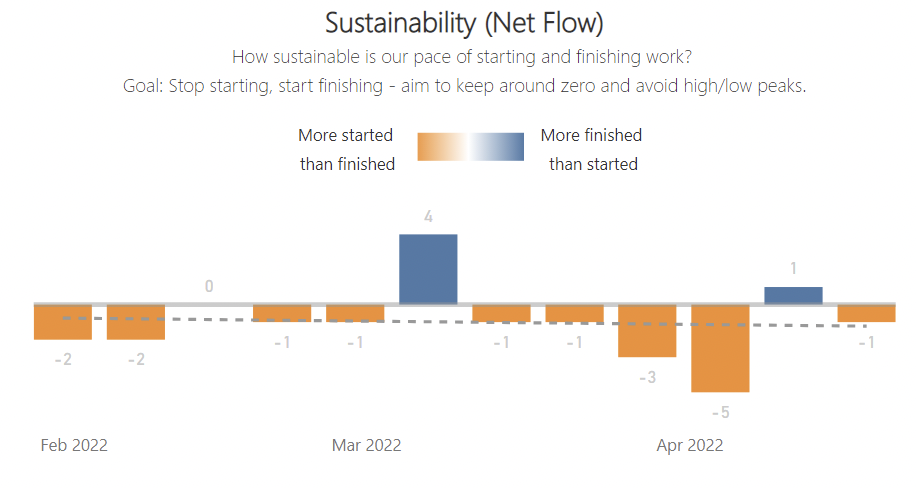

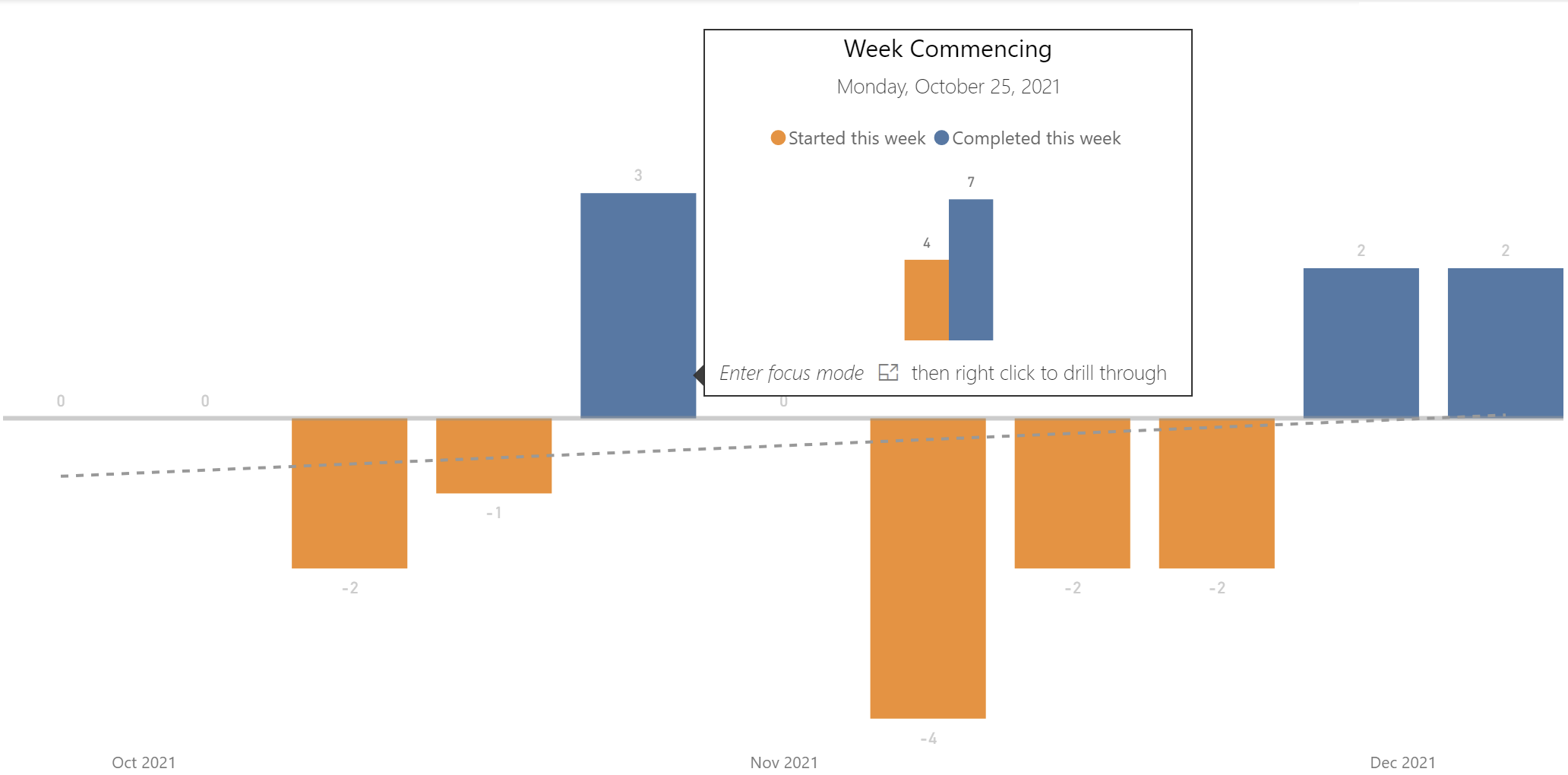

Sustainability is measured as net flow per week. The chart counts how many items were finished and subtracts how many items were started for the same calendar week, creating a positive bar (blue) if more is finished than started, or a negative bar (orange) if more items were started than finished.

Any time an item moves past our ‘Start’ line (i.e. goes 'In Progress') it is included in our Started count. Any time an item moves past our ‘Completed’ it is included in our Completed count.

Stop starting, start finishing! Help teams focus on finishing something in-progress (or helping someone on the team finish something) before starting something new. Aim for getting close to zero consistently (meaning we finish as many things as we start).

When overdriven, it can lead to your Quality metric going up (the wrong direction!) as more bugs are reported.

Throughput can be gamed as teams can prematurely sign-off work only to have bugs reported later (seen by increase in Quality (Bug Rate) chart). Teams can also slow down starting new work (seen by a decrease in Productivity (Throughput) chart).

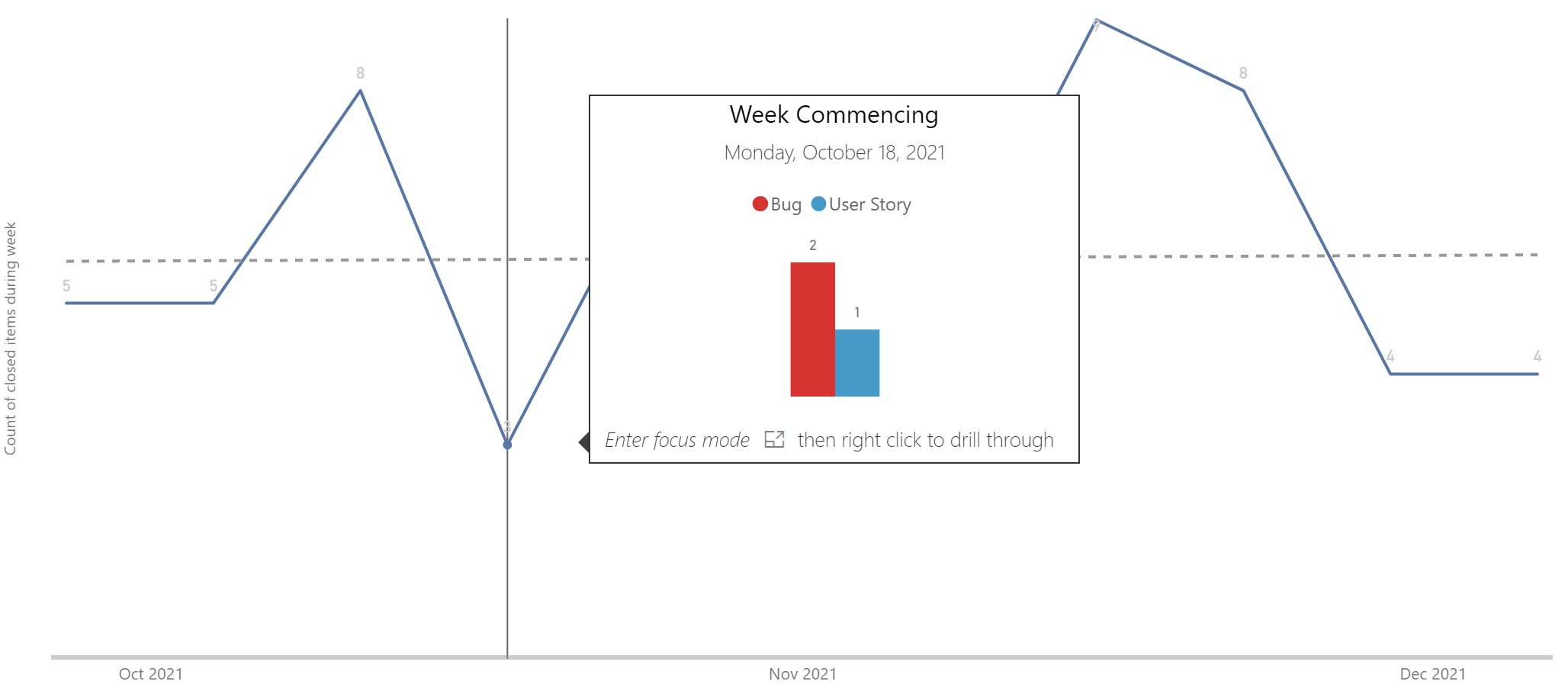

If you want to dig deeper into the insights, drill through is available on this page. Simply go into focus mode or right-click then hover on drill through and click the page (the title will be D1/D2/D3/D4). Here you can see the individual item, as well as the title acting as a hyperlink to the item (only works for Azure DevOps only).