Music Agent stands as an LLM-powered autonomous agent within the realm of music. Its modular and highly extensible framework liberates you to focus on the most imaginative aspects of music comprehension and composition!

- Accessibility: Music Agent dynamically selects the most appropriate methods for each music-related task.

- Unity: Music Agent unifies a wide array of tools into a single system, incorporating Huggingface models, GitHub projects, and Web APIs.

- Modularity: Music Agent offers high modularity, allowing users to effortlessly enhance its capabilities by integrating new functions.

To be created.

To set up the system from source, follow the steps below:

# Make sure git-lfs is installed

sudo apt-get update

sudo apt-get install -y git-lfs

# Install music-related libs

sudo apt-get install -y libsndfile1-dev

sudo apt-get install -y fluidsynth

sudo apt-get install -y ffmpeg

sudo apt-get install -y lilypond

# Clone the repository from muzic

git clone https://github.com/muzic

cd muzic/agentNext, install the dependent libraries. There might be some conflicts, but they should not affect the functionality of the system.

pip install --upgrade pip

pip install semantic-kernel

pip install -r requirements.txt

pip install numpy==1.23.0

pip install protobuf==3.20.3By following these steps, you will be able to successfully set up the system from the provided source.

cd models/ # Or your custom folder for tools

bash download.shP.S. Download Github parameters according to your own need:

To use muzic/roc, follow these steps:

cd YOUR_MODEL_DIR # models/ by default

cd muzic/roc- Download the checkpoint and database from the following link.

- Place the downloaded checkpoint file in the music-ckpt folder.

- Create a folder named database to store the downloaded database files.

To use DiffSinger, follow these steps:

cd YOUR_MODEL_DIR

cd DiffSinger- Down the checkpoint and config from the following link and unzip it in checkpoints folder.

- You can find other DiffSinger checkpoints in its docs

To use DDSP, follow these steps:

cd YOUR_MODEL_DIR

mkdir ddsp

cd ddsp

pip install gsutil

mkdir violin; gsutil cp gs://ddsp/models/timbre_transfer_colab/2021-07-08/solo_violin_ckpt/* violin/

mkdir flute; gsutil cp gs://ddsp/models/timbre_transfer_colab/2021-07-08/solo_flute_ckpt/* flute/To use audio synthesis, please download MS Basic.sf3 and place it in the main folder.

Change the config.yaml file to ensure that it is suitable for your application scenario.

# optional tools

huggingface:

token: YOUR_HF_TOKEN

spotify:

client_id: YOUR_CLIENT_ID

client_secret: YOUR_CLIENT_SECRET

google:

api_key: YOUR_API_KEY

custom_search_engine_id: YOUR_SEARCH_ENGINE_ID- Set your Hugging Face token.

- Set your Spotify Client ID and Secret, according to the doc.

- Set your Google API key and Google Custom Search Engine ID

fill the .env

OPENAI_API_KEY=""

OPENAI_ORG_ID=""

# optional

AZURE_OPENAI_DEPLOYMENT_NAME=""

AZURE_OPENAI_ENDPOINT=""

AZURE_OPENAI_API_KEY=""If you use Azure OpenAI, please pay attention to change use_azure_openai in config.yaml.

And now you can run the agent by:

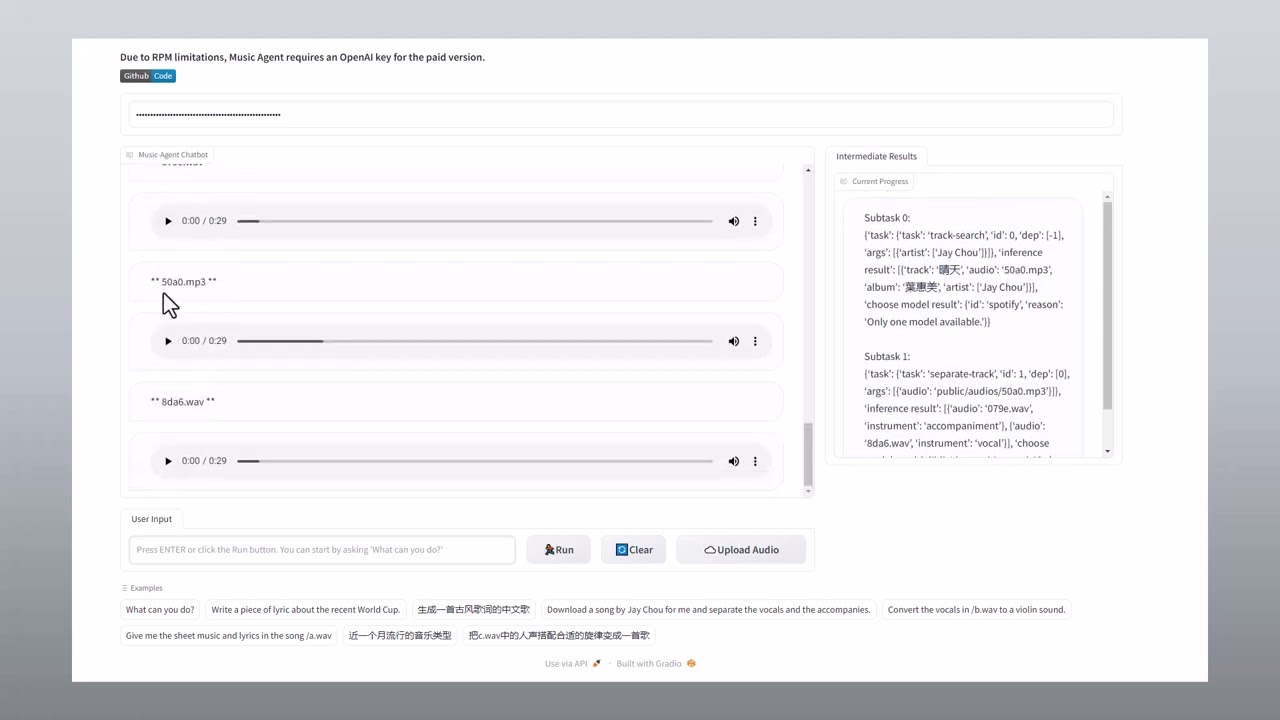

python agent.py --config config.yamlWe also provide gradio interface

python gradio_agent.py --config config.yamlNo .env file setup is required for Gradio interaction selection, but it does support only the OpenAI key.

If you use this code, please cite it as:

@article{yu2023musicagent,

title={MusicAgent: An AI Agent for Music Understanding and Generation with Large Language Models},

author={Yu, Dingyao and Song, Kaitao and Lu, Peiling and He, Tianyu and Tan, Xu and Ye, Wei and Zhang, Shikun and Bian, Jiang},

journal={arXiv preprint arXiv:2310.11954},

year={2023}

}