A Python package for enhancing the spatial resolution of Sentinel-2 satellite images to 2.5 meters 🚀

GitHub: https://github.com/IPL-UV/supers2 🌐

PyPI: https://pypi.org/project/supers2/ 🛠️

supers2 is a Python package designed to enhance the spatial resolution of Sentinel-2 satellite images to 2.5 meters using advanced neural network models. It facilitates downloading (cubo package), preparing, and processing the Sentinel-2 data and applies deep learning models to enhance the spatial resolution of the imagery.

Install the latest version from PyPI:

pip install cubo supers2import cubo

import numpy as np

import torch

import supers2# Create a Sentinel-2 L2A data cube for a specific location and date range

da = cubo.create(

lat=4.31,

lon=-76.2,

collection="sentinel-2-l2a",

bands=["B02", "B03", "B04", "B05", "B06", "B07", "B08", "B8A", "B11", "B12"],

start_date="2021-06-01",

end_date="2021-10-10",

edge_size=128,

resolution=10

)When converting the NumPy array to a PyTorch tensor, the use of cuda() is optional and depends on whether the user has access to a GPU. Below is the explanation for both cases:

-

GPU: If a GPU is available and CUDA is installed, you can transfer the tensor to the GPU using

.cuda(). This improves the processing speed, especially for large datasets or deep learning models. -

CPU: If no GPU is available, the tensor will be processed on the CPU, which is the default behavior in PyTorch. In this case, simply omit the

.cuda()call.

Here’s how you can handle both scenarios dynamically:

# Convert the data array to NumPy and scale

original_s2_numpy = (da[11].compute().to_numpy() / 10_000).astype("float32")

# Check if CUDA is available, use GPU if possible, otherwise fallback to CPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# Create the tensor and move it to the appropriate device (CPU or GPU)

X = torch.from_numpy(original_s2_numpy).float().to(device)In supers2, you can choose from several types of models to enhance the spatial resolution of Sentinel-2 images. Below are the configurations for each model type and their respective size options. Each model is configured using supers2.setmodel, where the sr_model_snippet argument defines the super-resolution model, and fusionx2_model_snippet and fusionx4_model_snippet correspond to additional fusion models.

CNN-based models are available in the following sizes: lightweight, small, medium, expanded, and large.

# Example configuration for a CNN model

models = supers2.setmodel(

sr_model_snippet="sr__opensrbaseline__cnn__lightweight__l1",

fusionx2_model_snippet="fusionx2__opensrbaseline__cnn__lightweight__l1",

fusionx4_model_snippet="fusionx4__opensrbaseline__cnn__lightweight__l1",

resolution="2.5m",

device=device

)Model size options (replace small with the desired size):

lightweightsmallmediumexpandedlarge

SWIN models are optimized for varying levels of detail and offer size options: lightweight, small, medium, and expanded.

# Example configuration for a SWIN model

models = supers2.setmodel(

sr_model_snippet="sr__opensrbaseline__swin__lightweight__l1",

fusionx2_model_snippet="fusionx2__opensrbaseline__cnn__lightweight__l1",

fusionx4_model_snippet="fusionx4__opensrbaseline__cnn__lightweight__l1",

resolution="2.5m",

device=device

)Available sizes:

lightweightsmallmediumexpanded

MAMBA models also come in various sizes, similar to SWIN and CNN: lightweight, small, medium, and expanded.

# Example configuration for a MAMBA model

models = supers2.setmodel(

sr_model_snippet="sr__opensrbaseline__mamba__lightweight__l1",

fusionx2_model_snippet="fusionx2__opensrbaseline__cnn__lightweight__l1",

fusionx4_model_snippet="fusionx4__opensrbaseline__cnn__lightweight__l1",

resolution="2.5m",

device=device

)Available sizes:

lightweightsmallmediumexpanded

The opensrdiffusion model is only available in the large size. This model is suited for deep resolution enhancement without additional configurations.

# Configuration for the Diffusion model

models = supers2.setmodel(

sr_model_snippet="sr__opensrdiffusion__large__l1",

fusionx2_model_snippet="fusionx2__opensrbaseline__cnn__lightweight__l1",

fusionx4_model_snippet="fusionx4__opensrbaseline__cnn__lightweight__l1",

resolution="2.5m",

device=device

)For fast interpolation, bilinear and bicubic interpolation models can be used. These models do not require complex configurations and are useful for quick evaluations of enhanced resolution.

from supers2.models.simple import BilinearSR, BicubicSR

# Bilinear Interpolation Model

bilinear_model = BilinearSR(device=device, scale_factor=4).to(device)

super_bilinear = bilinear_model(X[None])

# Bicubic Interpolation Model

bicubic_model = BicubicSR(device=device, scale_factor=4).to(device)

super_bicubic = bicubic_model(X[None])# Apply the model to enhance the image resolution to 2.5 meters

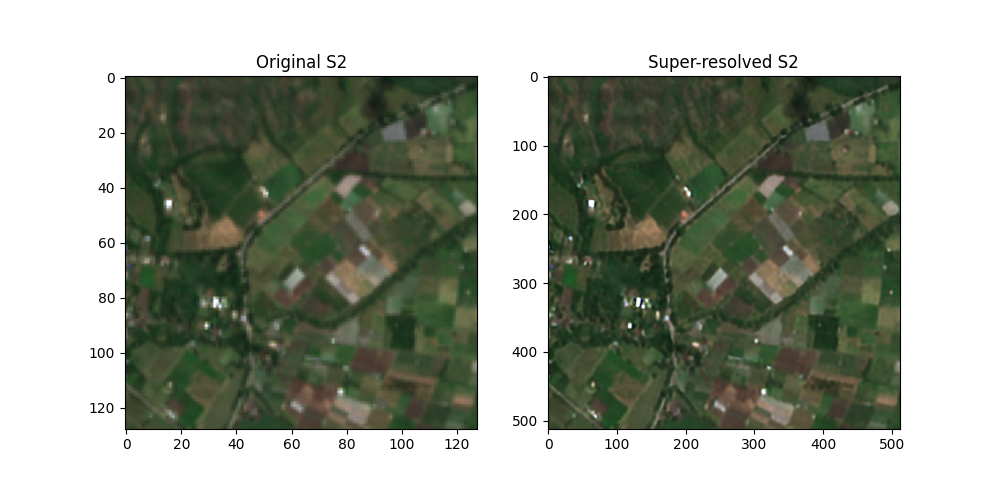

superX = supers2.predict(X, models=models, resolution="2.5m")import matplotlib.pyplot as plt

# Plot the original and enhanced-resolution images

fig, ax = plt.subplots(1, 2, figsize=(10, 5))

ax[0].imshow(X[[2, 1, 0]].permute(1, 2, 0).cpu().numpy()*4)

ax[0].set_title("Original S2")

ax[1].imshow(superX[[2, 1, 0]].permute(1, 2, 0).cpu().numpy()*4)

ax[1].set_title("Enhanced Resolution S2")

plt.show()- Enhance spatial resolution to 2.5 meters: Use advanced CNN models to enhance Sentinel-2 imagery.

- Neural network-based approach: Integration of multiple model sizes to fit different computing needs (small, lightweight).

- Python integration: Easily interact with data cubes through the Python API, supporting seamless workflows.