A pretrained universal neural network potential for

charge-informed atomistic modeling

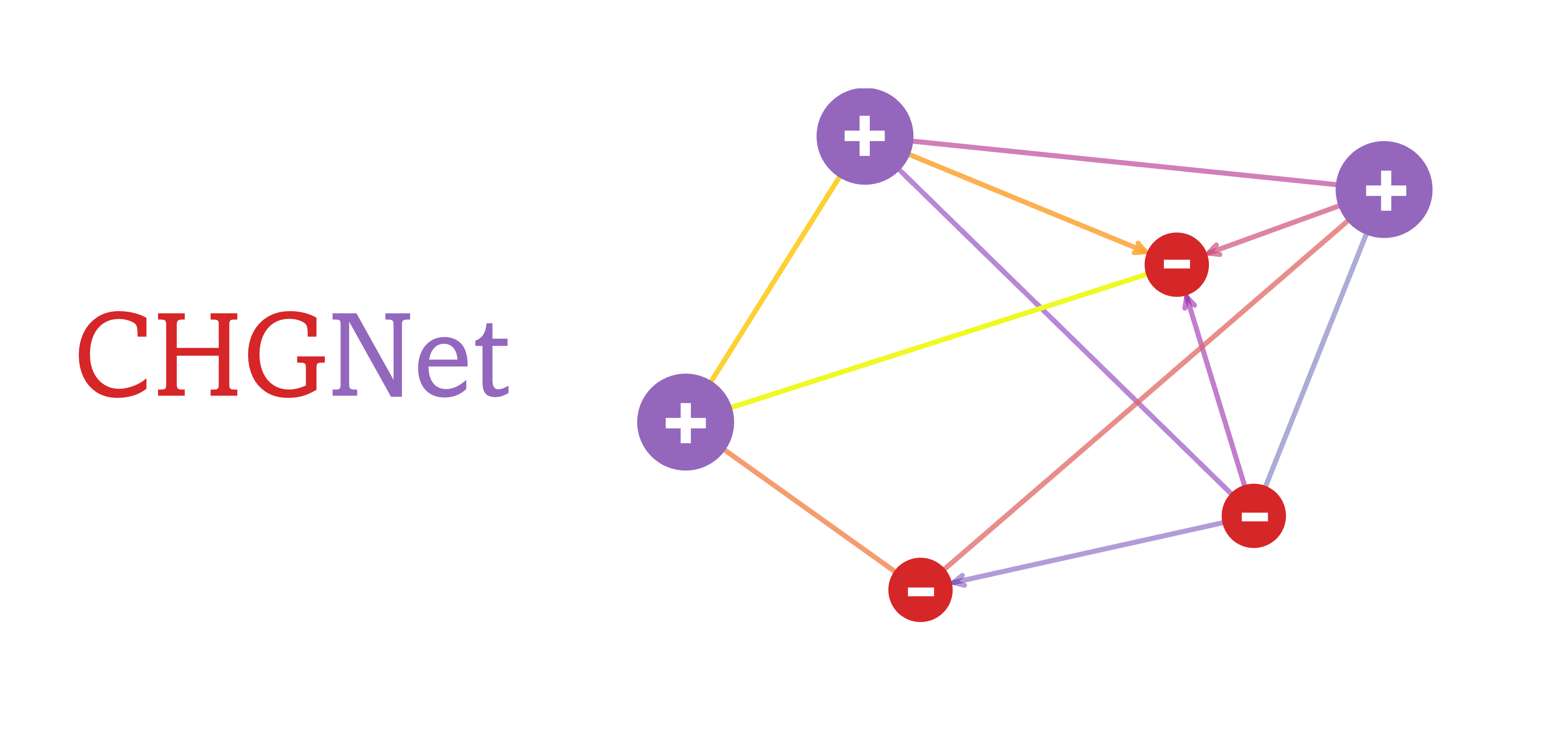

CHGNet highlights its ability to study electron interactions and charge distribution in atomistic modeling with near DFT accuracy. The charge inference is realized by regularizing the atom features with DFT magnetic moments, which carry rich information about both local ionic environments and charge distribution.

Pretrained CHGNet achieves SOTA performance in Matbench Discovery

| Notebooks | Links | Descriptions |

|---|---|---|

| CHGNet Basics | Examples for loading pre-trained CHGNet, predicting energy, force, stress, magmom as well as running structure optimization and MD. | |

| Tuning CHGNet | Examples of fine tuning the pretrained CHGNet to your system of interest. | |

| Visualize Relaxation | Crystal Toolkit that visualizes atom positions, energies and forces of a structure during CHGNet relaxation. |

You can install chgnet through pip:

pip install chgnetView API docs.

Pretrained CHGNet can predict the energy (eV/atom), force (eV/A), stress (GPa) and

magmom (

from chgnet.model.model import CHGNet

from pymatgen.core import Structure

chgnet = CHGNet.load()

structure = Structure.from_file('examples/o-LiMnO2_unit.cif')

prediction = chgnet.predict_structure(structure)

for key in ("energy", "forces", "stress", "magmom"):

print(f"CHGNet-predicted {key}={prediction[key[0]]}\n")Charge-informed molecular dynamics can be simulated with pretrained CHGNet through ASE environment

from chgnet.model.model import CHGNet

from chgnet.model.dynamics import MolecularDynamics

from pymatgen.core import Structure

import warnings

warnings.filterwarnings("ignore", module="pymatgen")

warnings.filterwarnings("ignore", module="ase")

structure = Structure.from_file("examples/o-LiMnO2_unit.cif")

chgnet = CHGNet.load()

md = MolecularDynamics(

atoms=structure,

model=chgnet,

ensemble="nvt",

temperature=1000, # in K

timestep=2, # in femto-seconds

trajectory="md_out.traj",

logfile="md_out.log",

loginterval=100,

use_device="cpu", # use 'cuda' for faster MD

)

md.run(50) # run a 0.1 ps MD simulationVisualize the magnetic moments after the MD run

from ase.io.trajectory import Trajectory

from pymatgen.io.ase import AseAtomsAdaptor

from chgnet.utils import solve_charge_by_mag

traj = Trajectory("md_out.traj")

mag = traj[-1].get_magnetic_moments()

# get the non-charge-decorated structure

structure = AseAtomsAdaptor.get_structure(traj[-1])

print(structure)

# get the charge-decorated structure

struct_with_chg = solve_charge_by_mag(structure)

print(struct_with_chg)CHGNet can perform fast structure optimization and provide site-wise magnetic moments. This makes it ideal for pre-relaxation and

MAGMOM initialization in spin-polarized DFT.

from chgnet.model import StructOptimizer

relaxer = StructOptimizer()

result = relaxer.relax(structure)

print("CHGNet relaxed structure", result["final_structure"])Fine-tuning will help achieve better accuracy if a high-precision study is desired. To train/tune a CHGNet, you need to define your data in a

pytorch Dataset object. The example datasets are provided in data/dataset.py

from chgnet.data.dataset import StructureData, get_train_val_test_loader

from chgnet.trainer import Trainer

dataset = StructureData(

structures=list_of_structures,

energies=list_of_energies,

forces=list_of_forces,

stresses=list_of_stresses,

magmoms=list_of_magmoms,

)

train_loader, val_loader, test_loader = get_train_val_test_loader(

dataset, batch_size=32, train_ratio=0.9, val_ratio=0.05

)

trainer = Trainer(

model=chgnet,

targets="efsm",

optimizer="Adam",

criterion="MSE",

learning_rate=1e-2,

epochs=50,

use_device="cuda",

)

trainer.train(train_loader, val_loader, test_loader)- The energy used for training should be energy/atom if you're fine-tuning the pretrained

CHGNet. - The pretrained dataset of

CHGNetcomes from GGA+U DFT withMaterialsProject2020Compatibility. The parameter for VASP is described inMPRelaxSet. If you're fine-tuning withMPRelaxSet, it is recommended to apply theMP2020compatibility to your energy labels so that they're consistent with the pretrained dataset. - If you're fine-tuning to functionals other than GGA, we recommend you refit the

AtomRef. CHGNetstress is in unit GPa, and the unit conversion has already been included indataset.py. SoVASPstress can be directly fed toStructureData- To save time from graph conversion step for each training, we recommend you use

GraphDatadefined indataset.py, which reads graphs directly from saved directory. To create saved graphs, seeexamples/make_graphs.py. - Apple’s Metal Performance Shaders

MPSis currently disabled until a stable version ofpytorchforMPSis released.

link to our paper: https://doi.org/10.48550/arXiv.2302.14231

Please cite the following:

@article{deng_2023_chgnet,

title={{CHGNet: Pretrained universal neural network potential for charge-informed atomistic modeling}},

author={Deng, Bowen and Zhong, Peichen and Jun, KyuJung and Riebesell, Janosh and Han, Kevin and Bartel, Christopher J and Ceder, Gerbrand},

journal={arXiv preprint arXiv:2302.14231},

year={2023},

url = {https://arxiv.org/abs/2302.14231}

}CHGNet is under active development, if you encounter any bugs in installation and usage,

please open an issue. We appreciate your contributions!