+

+

+

+

+

+

+

+ Contact us

+ +Contact us¶

+Would you like to contact us about actuated for your team or oranisation?

+Fill out this form, and we'll get in touch shortly after with next steps.

+Actuated ™ is a trademark of OpenFaaS Ltd.

+Keeping in touch¶

+-

+

- Follow us on Twitter - @selfactuated +

- GitHub - github.com/self-actuated +

- Customer Slack - self-actuated.slack.com +

Anything else?¶

+Looking for technical details about actuated? Try the FAQ.

+Are you running into a problem? Try the troubleshooting guide

+ + + + + + + + + +

+

+

+

+

+

+

+ Actuated Dashboard¶

+The actuated dashboard is available to customers for their enrolled organisations.

+For each organisation, you can see:

+-

+

- Today's builds so far - a quick picture of today's activity across all enrolled organisations +

- Runners - Your build servers and their status +

- Build queue - All builds queued for processing and their status +

- Insights - full build history and usage by organisation, repo and user +

- Job Increases - a list of jobs that have increased in duration over 5 minutes, plus a list of the affected jobs that week +

Plus:

+-

+

- CLI - install the CLI for management via command line +

- SSH Sessions - connect to a runner via SSH to debug issues or to explore - works on hosted and actuated runners +

Today's builds so far¶

+On this page, you'll get today's total builds, total build minutes and a break-down on the statuses - to see if you have a majority of successful or unsuccessful builds.

+

++Today's activity at a glance

+

Underneath this section, there are a number of tips for enabling a container cache, adjusting your subscription plan and for joining the actuated Slack.

+More detailed reports are available on the insights page.

+Runners¶

+Here you'll see if any of your servers are offline, in a draining status due a restart/update or online and ready to process builds.

+

The Ping time is how long it takes for the control-plane to check the agent's capacity.

+Build queue¶

+Only builds that are queued (not yet in progress), or already in progress will be shown on this page.

+

Find out how many builds are pending or running across your organisation and on which servers.

+Insights¶

+Three sets of insights are offered - all at the organisation level, so every repository is taken into account.

+You can also switch the time window between 28 days, 14 days, 7 days or today.

+The data is contrasted to the previous period to help you identify spikes and potential issues.

+The data for reports always starts from the last complete day of data, so the last 7 days will start from the previous day.

+Build history and usage by organisation.¶

+Understand when your builds are running at a higher level - across all of your organisations - in one place.

+

You can click on Minutes to switch to total time instead of total builds, to see if the demand on your servers is increasing or decreasing over time.

+Build history by repository¶

+

When viewing usage at a repository-level, you can easily identify anomalies and hot spots - like mounting build times, high failure rates or lots of cancelled jobs - implying a potential faulty interaction or trigger.

+Build history per user¶

+

This is where you get to learn who is trigger the most amount of builds, who may be a little less active for this period and where folks may benefit from additional training due a high failure rate of builds.

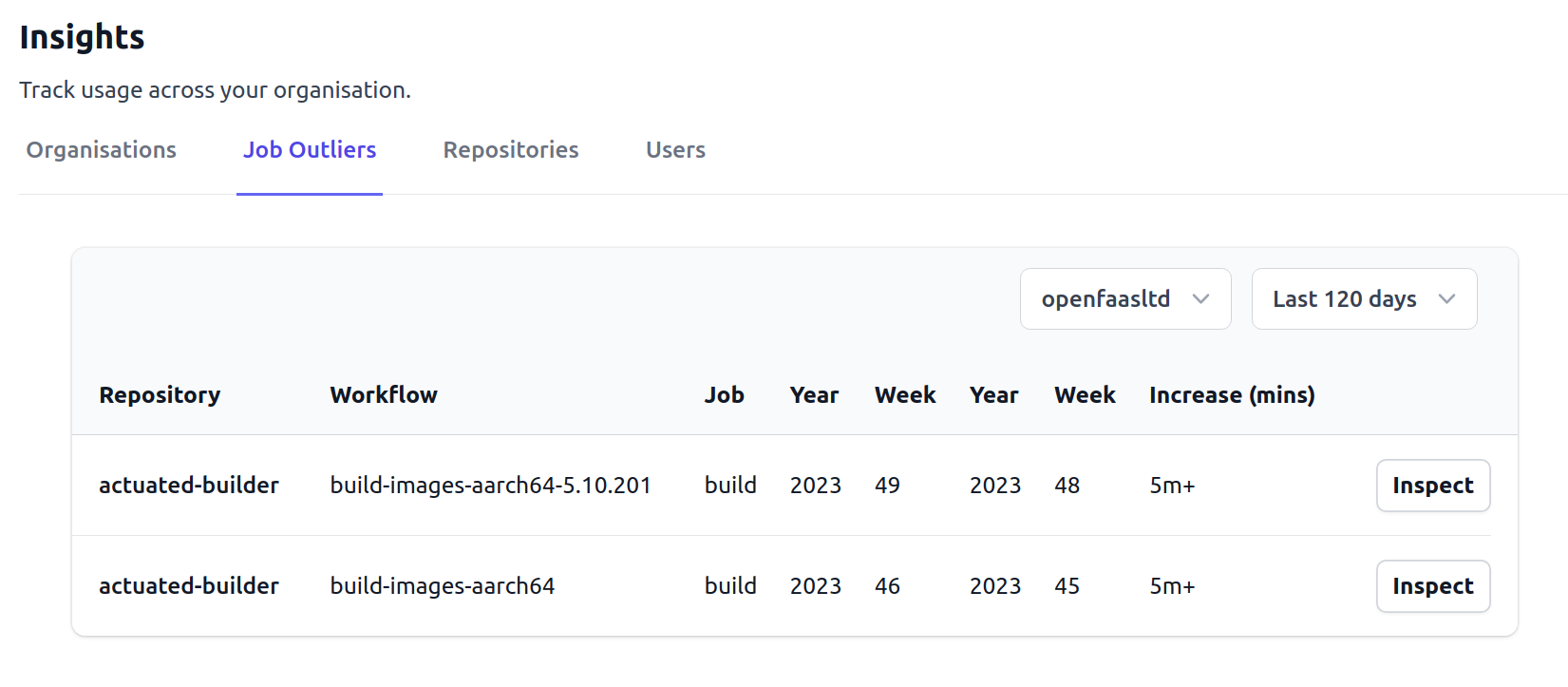

+Job Increases¶

+For up to 120 days of history, you can find jobs that have increased over 5 minutes in duration week-by-week. This feature was requested by a team whose builds were roughly 60 minutes each on GitHub's hosted runners, and 20 minutes each on actuated. They didn't want those times to creep up without it being noticed and rectified.

+

++Insights on outliers showing the time that the job increased by, and a button to drill down into the affected jobs that week.

+

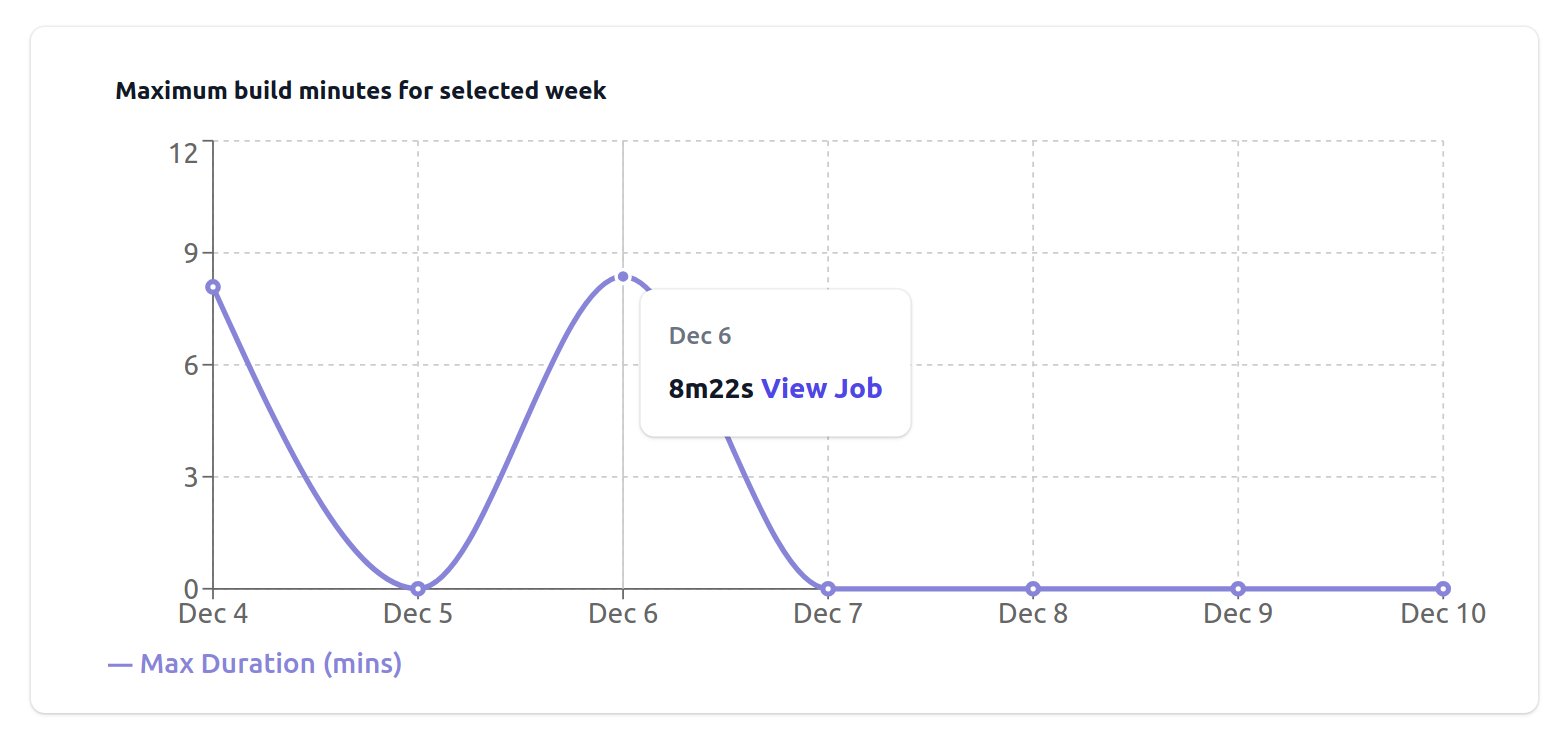

When you click "Inspect", a plot will be drawn with the maximum build time recorded on the days of the affected week. You can then click "View Job" to see what commit, Pull Request, or configuration change may have caused the increase.

+

++A plot with the longest job run on each day of the affected week

+

SSH Sessions¶

+Once you configure an action to pause at a set point by introducing our custom GitHub action step, you'll be able to copy and paste an SSH command and run it in your terminal.

+Your SSH keys will be pre-installed and no password is required.

+

++Viewing an SSH session to a hosted runner

+

See also: Example: Debug a job with SSH

+CLI¶

+The CLI page has download instructions, you can find the downloads for Linux, macOS and Windows here:

+ + + + + + + + + + +

+

+

+

+

+

+

+ Example: Custom VM sizes¶

+Our team will have configured your servers so that they always launch a pre-defined VM size, this keeps the user experience simple and predictable.

+However, you can also request a specific VM size with up to 32vCPU and as much RAM as is available in the server. vCPU can be over-committed safely, however over-committing on RAM is not advised because if all of the RAM is required, one of the running VMs may exit or be terminated.

+Certified for:

+-

+

-

x86_64

+ -

arm64including Raspberry Pi 4

+

Request a custom VM size¶

+For a custom size just append -cpu- and -gb to the above labels, for example:

x86_64 example:

+-

+

actuated-1cpu-2gb

+actuated-4cpu-16gb

+

64-bit Arm example:

+-

+

actuated-arm64-4cpu-16gb

+actuated-arm64-32cpu-64gb

+

You can change vCPU and RAM independently, there are no set combinations, so you can customise both to whatever you like.

+The upper limit for vCPU is 32.

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

name: specs

+

+on: push

+jobs:

+ specs:

+ runs-on: actuated-1cpu-2gb

+ steps:

+ - name: Print specs

+ run: |

+ nproc

+ free -h

+This will allocate 1x vCPU and 2GB of RAM to the VM. To run this same configuration for arm64, change runs-on to actuated-arm64-1cpu-2gb.

+

+

+

+

+

+

+

+ Example: Kubernetes with KinD¶

+Docker CE is preinstalled in the actuated VM image, and will start upon boot-up.

+Certified for:

+-

+

-

x86_64

+ -

arm64including Raspberry Pi 4

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

Try running a container to ping Google for 3 times:

+name: build

+

+on: push

+jobs:

+ ping-google:

+ runs-on: actuated-4cpu-16gb

+ steps:

+ - uses: actions/checkout@master

+ with:

+ fetch-depth: 1

+ - name: Run a ping to Google with Docker

+ run: |

+ docker run --rm -i alpine:latest ping -c 3 google.com

+Build a container with Docker:

+name: build

+

+on: push

+jobs:

+ build-in-docker:

+ runs-on: actuated-4cpu-16gb

+ steps:

+ - uses: actions/checkout@master

+ with:

+ fetch-depth: 1

+ - name: Build inlets-connect using Docker

+ run: |

+ git clone --depth=1 https://github.com/alexellis/inlets-connect

+ cd inlets-connect

+ docker build -t inlets-connect .

+ docker images

+To run this on ARM64, just change the actuated prefix from actuated- to actuated-arm64-.

+

+

+

+

+

+

+

+ Example: GitHub Actions cache¶

+Jobs on Actuated runners start in a clean VM each time. This means dependencies need to be downloaded and build artifacts or caches rebuilt each time. Caching these files in the actions cache can improve workflow execution time.

+A lot of the setup actions for package managers have support for caching built-in. See: setup-node, setup-python, etc. They require minimal configuration and will create and restore dependency caches for you.

+If you have custom workflows that could benefit from caching the cache can be configured manually using the actions/cache.

+Using the actions cache is not limited to GitHub hosted runners but can be used with self-hosted runners. Workflows using the cache action can be converted to run on Actuated runners. You only need to change runs-on: ubuntu-latest to runs-on: actuated.

Use the GitHub Actions cache¶

+In this short example we will build alexellis/registry-creds. This is a Kubernetes operator that can be used to replicate Kubernetes ImagePullSecrets to all namespaces.

+Enable caching on a supported action¶

+Create a new file at: .github/workflows/build.yaml and commit it to the repository.

name: build

+

+on: push

+

+jobs:

+ build:

+ runs-on: actuated-4cpu-12gb

+ steps:

+ - uses: actions/checkout@v3

+ with:

+ repository: "alexellis/registry-creds"

+ - name: Setup Golang

+ uses: actions/setup-go@v3

+ with:

+ go-version: ~1.19

+ cache: true

+ - name: Build

+ run: |

+ CGO_ENABLED=0 GO111MODULE=on \

+ go build -ldflags "-s -w -X main.Release=dev -X main.SHA=dev" -o controller

+To configure caching with the setup-go action you only need to set the cache input parameter to true.

The cache is populated the first time this workflow runs. Running the workflow after this should be significantly faster because dependency files and build outputs are restored from the cache.

+Manually configure caching¶

+If there is no setup action for your language that supports caching it can be configured manually.

+Create a new file at: .github/workflows/build.yaml and commit it to the repository.

name: build

+

+on: push

+

+jobs:

+ build:

+ runs-on: actuated-4cpu-12gb

+ steps:

+ - uses: actions/checkout@v3

+ with:

+ repository: "alexellis/registry-creds"

+ - name: Setup Golang

+ uses: actions/setup-go@v3

+ with:

+ go-version: ~1.19

+ cache: true

+ - name: Setup Golang caches

+ uses: actions/cache@v3

+ with:

+ path: |

+ ~/.cache/go-build

+ ~/go/pkg/mod

+ key: ${{ runner.os }}-go-${{ hashFiles('**/go.sum') }}

+ restore-keys: |

+ ${{ runner.os }}-go-

+ - name: Build

+ run: |

+ CGO_ENABLED=0 GO111MODULE=on \

+ go build -ldflags "-s -w -X main.Release=dev -X main.SHA=dev" -o controller

+The setup Setup Golang caches uses the cache action to configure caching.

The path parameter is used to set the paths on the runner to cache or restore. The key parameter sets the key used when saving the cache. A hash of the go.sum file is used as part of the cache key.

Further reading¶

+-

+

- Checkout the list of

actions/cacheexamples to configure caching for different languages and frameworks.

+ - See our blog: Make your builds run faster with Caching for GitHub Actions +

+

+

+

+

+

+

+

+ Example: Kubernetes with k3s¶

+You may need to access Kubernetes within your build. K3s is a for-production, lightweight distribution of Kubernetes that uses fewer resources than upstream. k3sup is a popular tool for installing k3s.

+Certified for:

+-

+

-

x86_64

+ -

arm64including Raspberry Pi 4

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

Note that it's important to make sure Kubernetes is responsive before performing any commands like running a Pod or installing a helm chart.

+name: k3sup-tester

+

+on: push

+jobs:

+ k3sup-tester:

+ runs-on: actuated-4cpu-16gb

+ steps:

+ - name: get arkade

+ uses: alexellis/setup-arkade@v1

+ - name: get k3sup and kubectl

+ uses: alexellis/arkade-get@master

+ with:

+ kubectl: latest

+ k3sup: latest

+ - name: Install K3s with k3sup

+ run: |

+ mkdir -p $HOME/.kube/

+ k3sup install --local --local-path $HOME/.kube/config

+ - name: Wait until nodes ready

+ run: |

+ k3sup ready --quiet --kubeconfig $HOME/.kube/config --context default

+ - name: Wait until CoreDNS is ready

+ run: |

+ kubectl rollout status deploy/coredns -n kube-system --timeout=300s

+ - name: Explore nodes

+ run: kubectl get nodes -o wide

+ - name: Explore pods

+ run: kubectl get pod -A -o wide

+To run this on ARM64, just change the actuated prefix from actuated- to actuated-arm64-.

+

+

+

+

+

+

+

+ Example: Test that compute time by compiling a Kernel¶

+Use this sample to test the raw compute speed of your hosts by building a Kernel.

+Certified for:

+-

+

-

x86_64

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

name: microvm-kernel

+

+on: push

+jobs:

+ microvm-kernel:

+ runs-on: actuated

+ steps:

+ - name: free RAM

+ run: free -h

+ - name: List CPUs

+ run: nproc

+ - name: get build toolchain

+ run: |

+ sudo apt update -qy

+ sudo apt-get install -qy \

+ git \

+ build-essential \

+ kernel-package \

+ fakeroot \

+ libncurses5-dev \

+ libssl-dev \

+ ccache \

+ bison \

+ flex \

+ libelf-dev \

+ dwarves

+ - name: clone linux

+ run: |

+ time git clone https://github.com/torvalds/linux.git linux.git --depth=1 --branch v5.10

+ cd linux.git

+ curl -o .config -s -f https://raw.githubusercontent.com/firecracker-microvm/firecracker/main/resources/guest_configs/microvm-kernel-x86_64-5.10.config

+ echo "# CONFIG_KASAN is not set" >> .config

+ - name: make config

+ run: |

+ cd linux.git

+ make oldconfig

+ - name: Make vmlinux

+ run: |

+ cd linux.git

+ time make vmlinux -j$(nproc)

+ du -h ./vmlinux

+When you have a build time, why not change runs-on: actuated to runs-on: ubuntu-latest to compare it to a hosted runner from GitHub?

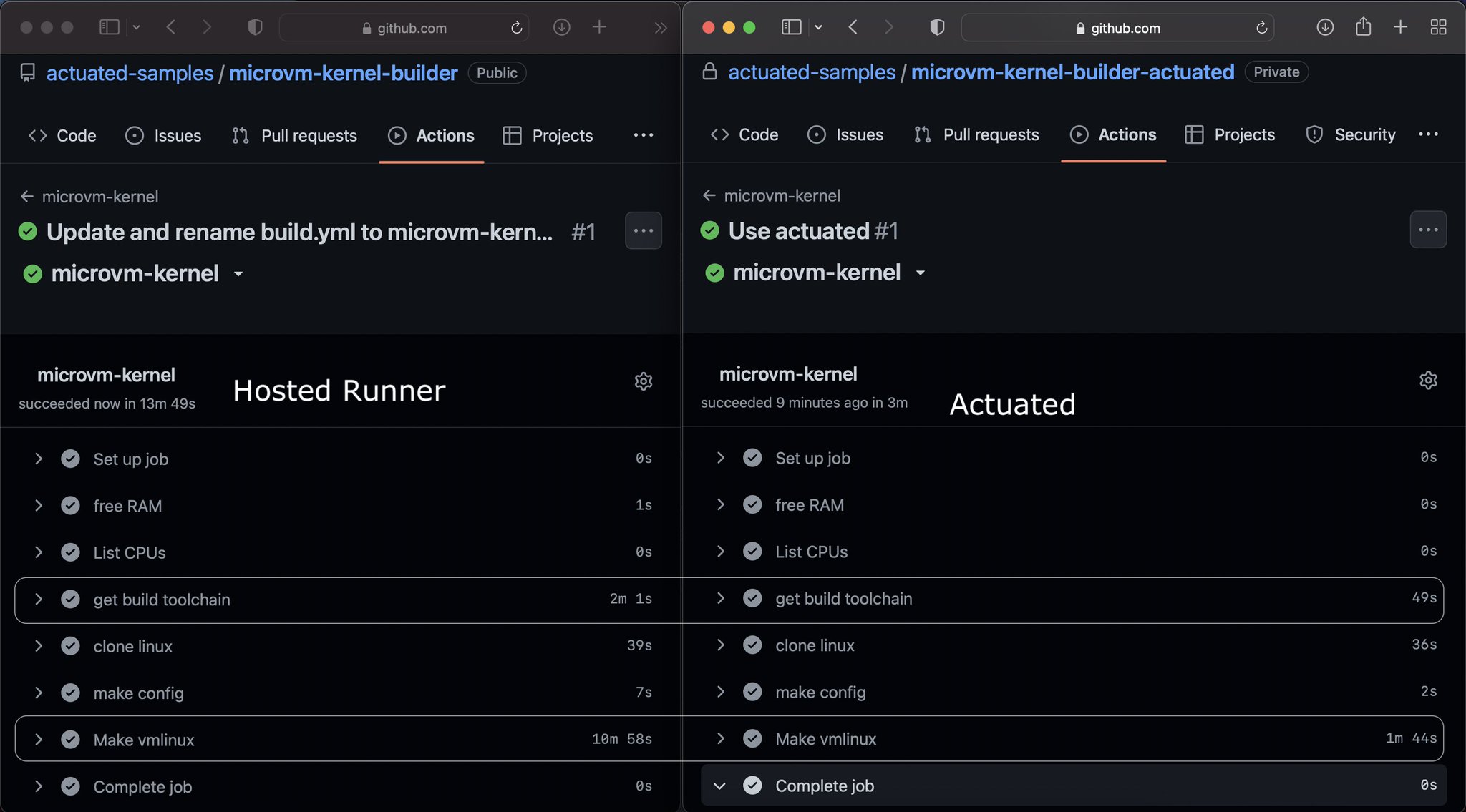

Here's our test, where our own machine built the Kernel 4x faster than a hosted runner:

+ + + + + + + + + + +

+

+

+

+

+

+

+ Example: Kubernetes with KinD¶

+You may need to access Kubernetes within your build. KinD is a popular option, and easy to run in an action.

+Certified for:

+-

+

-

x86_64

+ -

arm64including Raspberry Pi 4

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

Note that it's important to make sure Kubernetes is responsive before performing any commands like running a Pod or installing a helm chart.

+name: build

+

+on: push

+jobs:

+ start-kind:

+ runs-on: actuated-4cpu-16gb

+ steps:

+ - uses: actions/checkout@master

+ with:

+ fetch-depth: 1

+ - name: get arkade

+ uses: alexellis/setup-arkade@v1

+ - name: get kubectl and kubectl

+ uses: alexellis/arkade-get@master

+ with:

+ kubectl: latest

+ kind: latest

+ - name: Create a KinD cluster

+ run: |

+ mkdir -p $HOME/.kube/

+ kind create cluster --wait 300s

+ - name: Wait until CoreDNS is ready

+ run: |

+ kubectl rollout status deploy/coredns -n kube-system --timeout=300s

+ - name: Explore nodes

+ run: kubectl get nodes -o wide

+ - name: Explore pods

+ run: kubectl get pod -A -o wide

+ - name: Show kubelet logs

+ run: docker exec kind-control-plane journalctl -u kubelet

+To run this on ARM64, just change the actuated prefix from actuated- to actuated-arm64-.

Using a registry mirror for KinD¶

+Whilst the instructions for a registry mirror work for Docker, and for buildkit, KinD uses its own containerd configuration, so needs to be configured separately, as required.

+When using KinD, if you're deploying images which are hosted on the Docker Hub, then you'll probably need to either: authenticate to the Docker Hub, or configure the registry mirror running on your server.

+Here's an example of how to create a KinD cluster, using a registry mirror for the Docker Hub:

+#!/bin/bash

+

+kind create cluster --wait 300s --config /dev/stdin <<EOF

+kind: Cluster

+apiVersion: kind.x-k8s.io/v1alpha4

+containerdConfigPatches:

+- |-

+ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

+ endpoint = ["http://192.168.128.1:5000"]

+EOF

+With open source projects, you may need to run the build on GitHub's hosted runners some of the time, in which case, you can use a check whether the mirror is available:

+curl -f --connect-timeout 0.1 -s http://192.168.128.1:5000/v2/_catalog &> /dev/null

+

+if [ "$?" == "0" ]

+then

+ echo "Mirror found, configure KinD for the mirror"

+else

+ echo "Mirror not found, use defaults"

+fi

+To use authentication instead, create a Kubernetes secret of type docker-registry and then attach it to the default service account of each namespace within your cluster.

The OpenFaaS docs show how to do this for private registries, but the same applies for authenticating to the Docker Hub to raise rate-limits.

+You may also like Alex's alexellis/registry-creds project which will replicate your Docker Hub credentials into each namespace within a cluster, to make sure images are pulled with the correct credentials.

+ + + + + + + + + +

+

+

+

+

+

+

+ Example: Run a KVM guest¶

+It is possible to launch a Virtual Machine (VM) within a GitHub Action. Support for virtualization is not enabled by default for Actuated. The Agent has to be configured to use a custom kernel.

+There are some prerequisites to enable KVM support:

+-

+

aarch64runners are not supported at the moment.

+- A bare-metal host for the Agent is required. +

Nested virtualisation is a premium feature

+This feature requires a plan size of 15 concurrent builds or greater, however you can get a 14-day free trial by contacting our team directly through the actuated Slack.

+Configure the Agent¶

+-

+

-

+

Make sure nested virtualization is enabled on the Agent host.

+

+ -

+

Edit

+/etc/default/actuatedon the Actuated Agent and add thekvmsuffix to theAGENT_KERNEL_REFvariable:+- AGENT_KERNEL_REF="ghcr.io/openfaasltd/actuated-kernel:x86_64-latest" ++ AGENT_KERNEL_REF="ghcr.io/openfaasltd/actuated-kernel:x86_64-kvm-latest" +

+ -

+

Also add it to the

+AGENT_IMAGE_REFline:+- AGENT_IMAGE_REF="ghcr.io/openfaasltd/actuated-ubuntu22.04:x86_64-latest" ++ AGENT_IMAGE_REF="ghcr.io/openfaasltd/actuated-ubuntu22.04:x86_64-kvm-latest" +

+ -

+

Restart the Agent to use the new kernel.

++sudo systemctl daemon-reload && \ + sudo systemctl restart actuated +

+ -

+

Run a test build to verify KVM support is enabled in the runner. The specs script from the test build will report whether

+/dev/kvmis available.

+

Run a Firecracker microVM¶

+This example is an adaptation of the Firecracker quickstart guide that we run from within a GitHub Actions workflow.

+The workflow instals Firecracker, configures and boots a guest VM and then waits 20 seconds before shutting down the VM and exiting the workflow.

+-

+

-

+

Create a new repository and add a workflow file.

+The workflow file:

+./.github/workflows/vm-run.yaml:+name: vm-run + +on: push +jobs: +vm-run: + runs-on: actuated-4cpu-8gb + steps: + - uses: actions/checkout@master + with: + fetch-depth: 1 + - name: Install arkade + uses: alexellis/setup-arkade@v2 + - name: Install firecracker + run: sudo arkade system install firecracker + - name: Run microVM + run: sudo ./run-vm.sh +

+ -

+

Add the

+run-vm.shscript to the root of the repository.Running the script will:

+-

+

- Get the kernel and rootfs for the microVM +

- Start fireckracker and configure the guest kernel and rootfs +

- Start the guest machine +

- Wait for 20 seconds and kill the firecracker process so workflow finishes. +

The

+run-vm.shscript:+#!/bin/bash + +# Clone the example repo +git clone https://github.com/skatolo/nested-firecracker.git + +# Run the VM script +./nested-firecracker/run-vm.sh +

+ -

+

Hit commit and check the run logs of the workflow. You should find the login prompt of the running microVM in the logs.

+

+

The full example is available on GitHub

+For more examples and use-cases see:

+ + + + + + + + + + +

+

+

+

+

+

+

+ Example: Regression test against various Kubernetes versions¶

+This example launches multiple Kubernetes clusters in parallel for regression and end to end testing.

+In the example, We're testing the CRD for the inlets-operator on versions v1.16 through to v1.25. You could also switch out k3s for KinD, if you prefer.

+See also: Actuated with KinD

+

++Launching 10 Kubernetes clusters in parallel across your fleet of Actuated Servers.

+

Certified for:

+-

+

-

x86_64

+ -

arm64including Raspberry Pi 4

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

Customise both the array "k3s" with the versions you need to test and replace the step "Test crds" with whatever you need to install such as helm charts.

+name: k3s-test-matrix

+

+on:

+ pull_request:

+ branches:

+ - '*'

+ push:

+ branches:

+ - master

+ - main

+

+jobs:

+ kubernetes:

+ name: k3s-test-${{ matrix.k3s }}

+ runs-on: actuated-4cpu-12gb

+ strategy:

+ matrix:

+ k3s: [v1.16, v1.17, v1.18, v1.19, v1.20, v1.21, v1.22, v1.23, v1.24, v1.25]

+

+ steps:

+ - uses: actions/checkout@v1

+ - uses: alexellis/setup-arkade@v2

+ - uses: alexellis/arkade-get@master

+ with:

+ kubectl: latest

+ k3sup: latest

+

+ - name: Create Kubernetes ${{ matrix.k3s }} cluster

+ run: |

+ mkdir -p $HOME/.kube/

+ k3sup install \

+ --local \

+ --k3s-channel ${{ matrix.k3s }} \

+ --local-path $HOME/.kube/config \

+ --merge \

+ --context default

+ cat $HOME/.kube/config

+

+ k3sup ready --context default

+ kubectl config use-context default

+

+ # Just an extra test on top.

+ echo "Waiting for nodes to be ready ..."

+ kubectl wait --for=condition=Ready nodes --all --timeout=5m

+ kubectl get nodes -o wide

+

+ - name: Test crds

+ run: |

+ echo "Applying CRD"

+ kubectl apply -f https://raw.githubusercontent.com/inlets/inlets-operator/master/artifacts/crds/inlets.inlets.dev_tunnels.yaml

+The matrix will cause a new VM to be launched for each item in the "k3s" array.

+ + + + + + + + + +

+

+

+

+

+

+

+ Example: matrix-build - run a VM per each job in a matrix¶

+Use this sample to test launching multiple VMs in parallel.

+Certified for:

+-

+

-

x86_64

+ -

arm64including Raspberry Pi 4

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

name: CI

+

+on:

+ pull_request:

+ branches:

+ - '*'

+ push:

+ branches:

+ - master

+ - main

+

+jobs:

+ arkade-e2e:

+ name: arkade-e2e

+ runs-on: actuated-4cpu-12gb

+ strategy:

+ matrix:

+ apps: [run-job,k3sup,arkade,kubectl,faas-cli]

+ steps:

+ - name: Get arkade

+ run: |

+ curl -sLS https://get.arkade.dev | sudo sh

+ - name: Download app

+ run: |

+ echo ${{ matrix.apps }}

+ arkade get ${{ matrix.apps }}

+ file /home/runner/.arkade/bin/${{ matrix.apps }}

+The matrix will cause a new VM to be launched for each item in the "apps" array.

+ + + + + + + + + +

+

+

+

+

+

+

+ Example: Multi-arch with buildx¶

+A multi-arch or multi-platform container is effectively where you build the same container image for multiple different Operating Systems or CPU architectures, and link them together under a single name.

+So you may publish an image named: ghcr.io/inlets-operator/latest, but when this image is fetched by a user, a manifest file is downloaded, which directs the user to the appropriate image for their architecture.

If you'd like to see what these look like, run the following with arkade:

+arkade get crane

+

+crane manifest ghcr.io/inlets/inlets-operator:latest

+You'll see a manifests array, with a platform section for each image:

+{

+ "mediaType": "application/vnd.docker.distribution.manifest.list.v2+json",

+ "manifests": [

+ {

+ "mediaType": "application/vnd.docker.distribution.manifest.v2+json",

+ "digest": "sha256:bae8025e080d05f1db0e337daae54016ada179152e44613bf3f8c4243ad939df",

+ "platform": {

+ "architecture": "amd64",

+ "os": "linux"

+ }

+ },

+ {

+ "mediaType": "application/vnd.docker.distribution.manifest.v2+json",

+ "digest": "sha256:3ddc045e2655f06653fc36ac88d1d85e0f077c111a3d1abf01d05e6bbc79c89f",

+ "platform": {

+ "architecture": "arm64",

+ "os": "linux"

+ }

+ }

+ ]

+}

+Try an example¶

+This example is taken from the Open Source inlets-operator.

+It builds a container image containing a Go binary and uses a Dockerfile in the root of the repository. All of the images and corresponding manifest are published to GitHub's Container Registry (GHCR). The action itself is able to authenticate to GHCR using a built-in, short-lived token. This is dependent on the "permissions" section and "packages: write" being set.

+View publish.yaml, adapted for actuated:

+name: publish

+

+on:

+ push:

+ tags:

+ - '*'

+

+jobs:

+ publish:

++ permissions:

++ packages: write

+

+- runs-on: ubuntu-latest

++ runs-on: actuated-4cpu-12gb

+ steps:

+ - uses: actions/checkout@master

+ with:

+ fetch-depth: 1

+

++ - name: Setup mirror

++ uses: self-actuated/hub-mirror@master

+ - name: Get TAG

+ id: get_tag

+ run: echo TAG=${GITHUB_REF#refs/tags/} >> $GITHUB_ENV

+ - name: Get Repo Owner

+ id: get_repo_owner

+ run: echo "REPO_OWNER=$(echo ${{ github.repository_owner }} | tr '[:upper:]' '[:lower:]')" > $GITHUB_ENV

+

+ - name: Set up QEMU

+ uses: docker/setup-qemu-action@v3

+ - name: Set up Docker Buildx

+ uses: docker/setup-buildx-action@v3

+ - name: Login to container Registry

+ uses: docker/login-action@v3

+ with:

+ username: ${{ github.repository_owner }}

+ password: ${{ secrets.GITHUB_TOKEN }}

+ registry: ghcr.io

+

+ - name: Release build

+ id: release_build

+ uses: docker/build-push-action@v5

+ with:

+ outputs: "type=registry,push=true"

+ platforms: linux/amd64,linux/arm/v6,linux/arm64

+ build-args: |

+ Version=${{ env.TAG }}

+ GitCommit=${{ github.sha }}

+ tags: |

+ ghcr.io/${{ env.REPO_OWNER }}/inlets-operator:${{ github.sha }}

+ ghcr.io/${{ env.REPO_OWNER }}/inlets-operator:${{ env.TAG }}

+ ghcr.io/${{ env.REPO_OWNER }}/inlets-operator:latest

+You'll see that we added a Setup mirror step, this explained in the Registry Mirror example

The docker/setup-qemu-action@v3 step is responsible for setting up QEMU, which is used to emulate the different CPU architectures.

The docker/build-push-action@v5 step is responsible for passing in a number of platform combinations such as: linux/amd64 for cloud, linux/arm64 for Arm servers and linux/arm/v6 for Raspberry Pi.

Within the Dockerfile, we needed to make a couple of changes.

+You can pick to run the step in either the BUILDPLATFORM or TARGETPLATFORM. The BUILDPLATFORM is the native architecture and platform of the machine performing the build, this is usually amd64. The TARGETPLATFORM is important for the final step of the build, and will be injected based upon one each of the platforms you have specified in the step.

+- FROM golang:1.22 as builder

++ FROM --platform=${BUILDPLATFORM:-linux/amd64} golang:1.22 as builder

+For Go specifically, we also updated the go build command to tell Go to use cross-compilation based upon the TARGETOS and TARGETARCH environment variables, which are populated by Docker.

GOOS=${TARGETOS} GOARCH=${TARGETARCH} go build -o inlets-operator

+Learn more in the Docker Documentation: Multi-platform images

+Is it slow to build for Arm?¶

+Using QEMU can be slow at times, especially when building an image for Arm using a hosted GitHub Runner.

+We found that we could increase an Open Source project's build time by 22x - from ~ 36 minutes to 1 minute 26 seconds.

+See also How to make GitHub Actions 22x faster with bare-metal Arm

+To build a separate image for Arm on an Arm runner, and one for amd64, you could use a matrix build.

+Need a hand with GitHub Actions?¶

+Check your plan to see if access to Slack is included, if so, you can contact us on Slack for help and guidance.

+ + + + + + + + + +

+

+

+

+

+

+

+ Example: Publish an OpenFaaS function¶

+This example will create a Kubernetes cluster using KinD, deploy OpenFaaS using Helm, deploy a function, then invoke the function. There are some additional checks for readiness for Kubernetes and the OpenFaaS gateway.

+You can adapt this example for any other Helm charts you may have for E2E testing.

+We also recommend considering arkade for installing CLIs and common Helm charts for testing.

+Docker CE is preinstalled in the actuated VM image, and will start upon boot-up.

+Certified for:

+-

+

-

x86_64

+ -

arm64

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a new GitHub repository in your organisation.

+Add: .github/workflows/e2e.yaml

name: e2e

+

+on:

+ push:

+ branches:

+ - '*'

+ pull_request:

+ branches:

+ - '*'

+

+permissions:

+ actions: read

+ contents: read

+

+jobs:

+ e2e:

+ runs-on: actuated-4cpu-12gb

+ steps:

+ - uses: actions/checkout@master

+ with:

+ fetch-depth: 1

+ - name: get arkade

+ uses: alexellis/setup-arkade@v1

+ - name: get kubectl and kubectl

+ uses: alexellis/arkade-get@master

+ with:

+ kubectl: latest

+ kind: latest

+ faas-cli: latest

+ - name: Install Kubernetes KinD

+ run: |

+ mkdir -p $HOME/.kube/

+ kind create cluster --wait 300s

+ - name: Add Helm chart, update repos and apply namespaces

+ run: |

+ kubectl apply -f https://raw.githubusercontent.com/openfaas/faas-netes/master/namespaces.yml

+ helm repo add openfaas https://openfaas.github.io/faas-netes/

+ helm repo update

+ - name: Install the Community Edition (CE)

+ run: |

+ helm repo update \

+ && helm upgrade openfaas --install openfaas/openfaas \

+ --namespace openfaas \

+ --set functionNamespace=openfaas-fn \

+ --set generateBasicAuth=true

+ - name: Wait until OpenFaaS is ready

+ run: |

+ kubectl rollout status -n openfaas deploy/prometheus --timeout 5m

+ kubectl rollout status -n openfaas deploy/gateway --timeout 5m

+ - name: Port forward the gateway

+ run: |

+ kubectl port-forward -n openfaas svc/gateway 8080:8080 &

+

+ attempts=0

+ max=10

+

+ until $(curl --output /dev/null --silent --fail http://127.0.0.1:8080/healthz ); do

+ if [ ${attempts} -eq ${max} ]; then

+ echo "Max attempts reached $max waiting for gateway's health endpoint"

+ exit 1

+ fi

+

+ printf '.'

+ attempts=$(($attempts+1))

+ sleep 1

+ done

+ - name: Login to OpenFaaS gateway and deploy a function

+ run: |

+ PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

+ echo -n $PASSWORD | faas-cli login --username admin --password-stdin

+

+ faas-cli store deploy env

+

+ faas-cli invoke env <<< ""

+

+ curl -s -f -i http://127.0.0.1:8080/function/env

+

+ faas-cli invoke --async env <<< ""

+

+ kubectl logs -n openfaas deploy/queue-worker

+

+ faas-cli describe env

+If you'd like to deploy the function, check out a more comprehensive example of how to log in and deploy in Serverless For Everyone Else

+ + + + + + + + + +

+

+

+

+

+

+

+ Example: Publish an OpenFaaS function¶

+This example will publish an OpenFaaS function to GitHub's Container Registry (GHCR).

+-

+

- The example uses Docker's buildx and QEMU for a multi-arch build +

- Dynamic variables to inject the SHA and OWNER name from the repo +

- Uses the token that GitHub assigns to the action to publish the containers. +

You can also run this example on GitHub's own hosted runners.

+Docker CE is preinstalled in the actuated VM image, and will start upon boot-up.

+Certified for:

+-

+

-

x86_64

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+For alexellis' repository called alexellis/autoscaling-functions, then check out the .github/workflows/publish.yml file:

-

+

- The "Setup QEMU" and "Set up Docker Buildx" steps configure the builder to produce a multi-arch image. +

- The "OWNER" variable means this action can be run on any organisation without having to hard-code a username for GHCR. +

- Only the bcrypt function is being built with the

--filtercommand added, remove it to build all functions in the stack.yml.

+ --platforms linux/amd64,linux/arm64,linux/arm/v7will build for regular Intel/AMD machines, 64-bit Arm and 32-bit Arm i.e. Raspberry Pi, most users can reduce this list to just "linux/amd64" for a speed improvement

+

Make sure you edit runs-on: and set it to runs-on: actuated-4cpu-12gb

name: publish

+

+on:

+ push:

+ branches:

+ - '*'

+ pull_request:

+ branches:

+ - '*'

+

+permissions:

+ actions: read

+ checks: write

+ contents: read

+ packages: write

+

+jobs:

+ publish:

+ runs-on: actuated-4cpu-12gb

+ steps:

+ - uses: actions/checkout@master

+ with:

+ fetch-depth: 1

+ - name: Get faas-cli

+ run: curl -sLSf https://cli.openfaas.com | sudo sh

+ - name: Pull custom templates from stack.yml

+ run: faas-cli template pull stack

+ - name: Set up QEMU

+ uses: docker/setup-qemu-action@v3

+ - name: Set up Docker Buildx

+ uses: docker/setup-buildx-action@v3

+ - name: Get TAG

+ id: get_tag

+ run: echo ::set-output name=TAG::latest-dev

+ - name: Get Repo Owner

+ id: get_repo_owner

+ run: >

+ echo ::set-output name=repo_owner::$(echo ${{ github.repository_owner }} |

+ tr '[:upper:]' '[:lower:]')

+ - name: Docker Login

+ run: >

+ echo ${{secrets.GITHUB_TOKEN}} |

+ docker login ghcr.io --username

+ ${{ steps.get_repo_owner.outputs.repo_owner }}

+ --password-stdin

+ - name: Publish functions

+ run: >

+ OWNER="${{ steps.get_repo_owner.outputs.repo_owner }}"

+ TAG="latest"

+ faas-cli publish

+ --extra-tag ${{ github.sha }}

+ --build-arg GO111MODULE=on

+ --platforms linux/amd64,linux/arm64,linux/arm/v7

+ --filter bcrypt

+If you'd like to deploy the function, check out a more comprehensive example of how to log in and deploy in Serverless For Everyone Else

+ + + + + + + + + +

+

+

+

+

+

+

+ Example: Get system information about your microVM¶

+This sample reveals system information about your runner.

+Certified for:

+-

+

-

x86_64

+ -

arm64

+

Use a private repository if you're not using actuated yet

+GitHub recommends using a private repository with self-hosted runners because changes can be left over from a previous run, even when using Actions Runtime Controller. Actuated uses an ephemeral VM with an immutable image, so can be used on both public and private repos. Learn why in the FAQ.

+Try out the action on your agent¶

+Create a specs.sh file:

+#!/bin/bash

+

+echo Hostname: $(hostname)

+

+echo whoami: $(whoami)

+

+echo Information on main disk

+df -h /

+

+echo Memory info

+free -h

+

+echo Total CPUs:

+echo CPUs: $(nproc)

+

+echo CPU Model

+cat /proc/cpuinfo |grep "model name"

+

+echo Kernel and OS info

+uname -a

+

+if ! [ -e /dev/kvm ]; then

+ echo "/dev/kvm does not exist"

+else

+ echo "/dev/kvm exists"

+fi

+

+echo OS info: $(cat /etc/os-release)

+

+echo PATH: ${PATH}

+

+echo Egress IP:

+curl -s -L -S https://checkip.amazonaws.com

+Create a new file at: .github/workflows/build.yml and commit it to the repository.

name: CI

+

+on:

+ pull_request:

+ branches:

+ - '*'

+ push:

+ branches:

+ - master

+

+jobs:

+ specs:

+ name: specs

+ runs-on: actuated-4cpu-12gb

+ steps:

+ - uses: actions/checkout@v1

+ - name: Check specs

+ run: |

+ ./specs.sh

+Note how the hostname changes every time the job is run.

+Perform a basic benchmark¶

+Update the specs.sh file to include benchmarks for disk and network connection:

+echo Installing hdparm

+

+sudo apt update -qqqqy && sudo apt install -qqqqy hdparm

+

+echo Read speed

+

+sudo hdparm -t $(mount |grep "/ "|cut -d " " -f1)

+

+echo Write speed

+

+sync; dd if=/dev/zero of=./tempfile bs=1M count=1024; sync

+

+echo Where is this runner?

+

+curl -s http://ip-api.com/json|jq

+

+echo Information on main disk

+

+df -h /

+

+echo Public IP:

+

+curl -s -L -S https://checkip.amazonaws.com

+

+echo Checking speed

+sudo pip install speedtest-cli

+speedtest-cli

+For the fastest servers backed by NVMes, with VMs running on a dedicated drive, we tend to see:

+-

+

- Read speeds of 1000+ MB/s. +

- Write speeds of 1000+ MB/s. +

The Internet speed test will give you a good idea of how quickly large artifacts can be uploaded or downloading during jobs.

+The instructions for a Docker registry cache on the server can make using container images from public registries much quicker.

+ + + + + + + + + +

+

+

+

+

+

+

+ Expose agent

+ +Expose the agent's API over HTTPS¶

+The actuated agent serves HTTP, and is accessed by the Actuated control plane.

+We expect most of our customers to be using hosts with public IP addresses, and the combination of an API token plus TLS is a battle tested combination.

+For anyone running with private hosts within a firewall, a private peering option is available for enterprise companies, or our inlets network tunnel can be used with an IP allow list.

+For a host on a public cloud¶

+If you're running the agent on a host with a public IP, you can use the built-in TLS mechanism in the actuated agent to receive a certificate from Let's Encrypt, valid for 90 days. The certificate will be renewed by the actuated agent, so there are no additional administration tasks required.

+The installation will automatically configure the below settings. They are included just for reference, so you can understand what's involved or tweak the settings if necessary.

+

++Pictured: Accessing the agent's endpoint built-in TLS and Let's Encrypt

+

Determine the public IP of your instance:

+# curl -s http://checkip.amazonaws.com

+

+141.73.80.100

+Now imagine that your sub-domain is agent.example.com, you need to create a DNS A or DNS CNAME record of agent.example.com=141.73.80.100, changing both the sub-domain and IP to your own.

Once the agent is installed, edit /etc/default/actuated on the agent and set the following two variables:

+AGENT_LETSENCRYPT_DOMAIN="agent.example.com"

+AGENT_LETSENCRYPT_EMAIL="webmaster@agent.example.com"

+Restart the agent:

+sudo systemctl daemon-reload

+sudo systemctl restart actuated

+Your agent's endpoint URL is going to be: https://agent.example.com on port 443

Private hosts - private peering for enterprises¶

+For enterprise customers, we can offer private peering of the actuated agent for when your servers are behind a corporate firewall, or have no inbound Internet access.

+

++Peering example for an enterprise with two agents within their own private firewalls.

+

This option is built-into the actuated agent, and requires no additional setup, firewall or routing rules. It's similar to how the GitHub Actions agent works by creating an outbound connection, without relying on any inbound data path.

+-

+

- The client makes an outbound connect to the Actuated control-plane. +

- If for any reason, the connection gets closed or severed, it will reconnect automatically. +

- All traffic is encrypted with HTTPS. +

- Only the Actuated control-plane will be able to communicate with the agent, privately. +

Private hosts - behind NAT or at the office¶

+The default way to configure a server for actuated, is to have its HTTPS endpoint available on the public Internet. A quick and easy way to do that is with our inlets network tunnel tool. This works by creating a VM with a public IP address, then connecting a client from your private network to the VM. Then the port on the private machine becomes available on the public VM for the Actuated control-plane to access as required.

+An IP allow-list can also be configured with the egress IP address of the Actuated control-plane. We will provide the egress IP address upon request to customers.

+

++Pictured: Accessing the agent's private endpoint using an inlets-pro tunnel

+

Reach out to us if you'd like us to host a tunnel server for you, alternatively, you can follow the instructions below to set up your own.

+The inletsctl tool will create a HTTPS tunnel server with you on your favourite cloud with a HTTPS certificate obtained from Let's Encrypt.

+If you have just the one Actuated Agent:

+export AGENT_DOMAIN=agent1.example.com

+export LE_EMAIL=webmaster@agent1.example.com

+

+arkade get inletsctl

+sudo mv $HOME/.arkade/bin/inletsctl /usr/local/bin/

+

+inletsctl create \

+ --provider digitalocean \

+ --region lon1 \

+ --token-file $HOME/do-token \

+ --letsencrypt-email $LE_EMAIL \

+ --letsencrypt-domain $AGENT_DOMAIN

+Then note down the tunnel's wss:// URL and token.

+If you wish to configure an IP allow list, log into the VM with SSH and then edit the systemd unit file for inlets-pro. Add the actuated controller egress IP as per these instructions.

Then run a HTTPS client to expose your agent:

+inlets-pro http client \

+ --url $WSS_URL \

+ --token $TOKEN \

+ --upstream http://127.0.0.1:8081

+For two or more Actuated Servers:

+export AGENT_DOMAIN1=agent1.example.com

+export AGENT_DOMAIN2=agent2.example.com

+export LE_EMAIL=webmaster@agent1.example.com

+

+arkade get inletsctl

+sudo mv $HOME/.arkade/bin/inletsctl /usr/local/bin/

+

+inletsctl create \

+ --provider digitalocean \

+ --region lon1 \

+ --token-file $HOME/do-token \

+ --letsencrypt-email $LE_EMAIL \

+ --letsencrypt-domain $AGENT_DOMAIN1 \

+ --letsencrypt-domain $AGENT_DOMAIN2

+Then note down the tunnel's wss:// URL and token.

+Then run a HTTPS client to expose your agent, using the unique agent domain, run the inlets-pro client on the Actuated Servers:

+export AGENT_DOMAIN1=agent1.example.com

+inlets-pro http client \

+ --url $WSS_URL \

+ --token $TOKEN \

+ --upstream $AGENT1_DOMAIN=http://127.0.0.1:8081

+export AGENT_DOMAIN2=agent2.example.com

+inlets-pro http client \

+ --url $WSS_URL \

+ --token $TOKEN \

+ --upstream $AGENT1_DOMAIN=http://127.0.0.1:8081

+You can generate a systemd service (so that inlets restarts upon disconnection, and reboot) by adding --generate=systemd > inlets.service and running:

sudo cp inlets.service /etc/systemd/system/

+sudo systemctl daemon-reload

+sudo systemctl enable inlets.service

+sudo systemctl start inlets

+

+# Check status with:

+sudo systemctl status inlets

+Your agent's endpoint URL is going to be: https://$AGENT_DOMAIN.

Preventing the runner from accessing your local network¶

+Network segmentation

+Proper network segmentation of hosts running the actuated agent is required. This is to prevent runners from making outbound connections to other hosts on your local network. We will not accept any responsibility for your configuration.

+If hardware isolation is not available, iptables rules may provide an alternative for isolating the runners from your network.

+Imagine you were using a LAN range of 192.168.0.0/24, with a router of 192.168.0.1, then the following probes and tests show that the runner cannot access the host 192.168.0.101, and that nmap's scan will come up dry.

We add a rule to allow access to the router, but reject packets going via TCP or UDP to any other hosts on the network.

+sudo iptables --insert CNI-ADMIN \

+ --destination 192.168.0.1 --jump ACCEPT

+sudo iptables --insert CNI-ADMIN \

+ --destination 192.168.0.0/24 --jump REJECT -p tcp --reject-with tcp-reset

+sudo iptables --insert CNI-ADMIN \

+ --destination 192.168.0.0/24 --jump REJECT -p udp --reject-with icmp-port-unreachable

+You can test the efficacy of these rules by running nmap, mtr, ping and any other probing utilities within a GitHub workflow.

+name: CI

+

+on:

+ pull_request:

+ branches:

+ - '*'

+ push:

+ branches:

+ - master

+ - main

+

+jobs:

+ specs:

+ name: specs

+ runs-on: actuated-4cpu-12gb

+ steps:

+ - uses: actions/checkout@v1

+ - name: addr

+ run: ip addr

+ - name: route

+ run: ip route

+ - name: pkgs

+ run: |

+ sudo apt-get update && \

+ sudo apt-get install traceroute mtr nmap netcat -qy

+ - name: traceroute

+ run: traceroute 192.168.0.101

+ - name: Connect to ssh

+ run: echo | nc 192.168.0.101 22

+ - name: mtr

+ run: mtr -rw -c 1 192.168.0.101

+ - name: nmap for SSH

+ run: nmap -p 22 192.168.0.0/24

+ - name: Ping router

+ run: |

+ ping -c 1 192.168.0.1

+ - name: Ping 101

+ run: |

+ ping -c 1 192.168.0.101

+ +

+

+

+

+

+

+

+ Frequently Asked Questions (FAQ)¶

+How does it work?¶

+Actuated has three main parts:

+-

+

- an agent which knows how to run VMs, you install this on your hosts +

- a VM image and Kernel that we build which has everything required for Docker, KinD and K3s +

- a multi-tenant control plane that we host, which tells your agents to start VMs and register a runner on your GitHub organisation +

The multi-tenant control plane is run and operated by OpenFaaS Ltd as a SaaS.

+

++The conceptual overview showing how a MicroVM is requested by the control plane.

+

MicroVMs are only started when needed, and are registered with GitHub by the official GitHub Actions runner, using a short-lived registration token. The token is been encrypted with the public key of the agent. This ensures no other agent could use the token to bootstrap a token to the wrong organisation.

+Learn more: Self-hosted GitHub Actions API

+Glossary¶

+-

+

MicroVM- a lightweight, single-use VM that is created by the Actuated Agent, and is destroyed after the build is complete. Common examples include firecracker by AWS and Cloud Hypervisor

+Guest Kernel- a Linux kernel that is used together with a Root filesystem to boot a MicroVM and run your CI workloads

+Root filesystem- an immutable image maintained by the actuated team containing all necessary software to perform a build

+Actuated('Control Plane') - a multi-tenant SaaS run by the actuated team responsible for scheduling MicroVMs to the Actuated Agent

+Actuated Agent- the software component installed on your Server which runs a MicroVM when instructed by Actuated

+Actuated Server('Server') - a server on which the Actuated Agent has been installed, where your builds will execute.

+

How does actuated compare to a self-hosted runner?¶

+A self-hosted runner is a machine on which you've installed and registered the a GitHub runner.

+Quite often these machines suffer from some, if not all of the following issues:

+-

+

- They require several hours to get all the required packages correctly installed to mirror a hosted runner +

- You never update them out of fear of wasting time or breaking something which is working, meaning your supply chain is at risk +

- Builds clash, if you're building a container image, or running a KinD cluster, names will clash, dirty state will be left over +

We've heard in user interviews that the final point of dirty state can cause engineers to waste several days of effort chasing down problems.

+Actuated uses a one-shot VM that is destroyed immediately after a build is completed.

+Who is actuated for?¶

+actuated is primarily for software engineering teams who are currently using GitHub Actions or GitLab CI.

+-

+

- You can outsource your CI infrastructure to the actuated team +

- You'll get VM-level isolation, with no risks of side-effects between builds +

- You can run on much faster hardware +

- You'll get insights on how to fine-tune the performance of your builds +

- And save a significant amount of money vs. larger hosted runners if you use 10s or 100s of thousands of minutes per month +

For GitHub users, a GitHub organisation is required for installation, and runners are attached to individual repositories as required to execute builds.

+Is there a sponsored subscription for Open Source projects?¶

+We have a sponsored program with the CNCF and Ampere for various Open Source projects, you can find out more here: Announcing managed Arm CI for CNCF projects.

+Sponsored projects are required to add our GitHub badge to the top of their README file for each repository where the actuated is being used, along with any other GitHub badges such as build status, code coverage, etc.

+[](https://actuated.dev/)

+or

+<a href="https://actuated.dev/"><img alt="Arm CI sponsored by Actuated" src="https://docs.actuated.dev/images/actuated-badge.png" width="120px"></img></a>

+For an example of what this would look like, see the inletsctl project README.

+What kind of machines do I need for the agent?¶

+You'll need either: a bare-metal host (your own machine, Hetzner Dedicated, Equinix Metal, etc), or a VM that supports nested virtualisation such as those provided by OpenStack, GCP, DigitalOcean, Azure, or VMware.

+See also: Provision a Server section

+When will Jenkins, GitLab CI, BitBucket Pipeline Runners, Drone or Azure DevOps be supported?¶

+Support for GitHub Actions and GitLab CI is available.

+Unfortunately, other CI systems tend to expect runners to be available indefinitely, which is an anti-pattern. Why? They gather side-effects and often rely on the insecure use of Docker in Docker, privileged containers, or mounting the Docker socket.

+If you'd like to migrate to GitHub Actions, or GitLab CI, feel free to reach out to us for help.

+Is GitHub Enterprise supported?¶

+GitHub.com's Pro, Team and Enterprise Cloud plans are supported.

+GitHub Enterprise Server (GHES) is a self-hosted version of GitHub and requires additional onboarding steps. Please reach out to us if you're interested in using actuated with your installation of GHES.

+What kind of access is required to my GitHub Organisation?¶

+GitHub Apps provide fine-grained privileges, access control, and event data.

+Actuated integrates with GitHub using a GitHub App.

+The actuated GitHub App will request:

+-

+

- Administrative access to add/remove GitHub Actions Runners to individual repositories +

- Events via webhook for Workflow Runs and Workflow Jobs +

Did you know? The actuated service does not have any access to your code or private or public repositories.

+Can GitHub's self-hosted runner be used on public repos?¶

+Actuated VMs can be used with public repositories, however the standard self-hosted runner when used stand-alone, with Docker, or with Kubernetes cannot.

+The GitHub team recommends only running their self-hosted runners on private repositories.

+Why?

+I took some time to ask one of the engineers on the GitHub Actions team.

+++With the standard self-hosted runner, a bad actor could compromise the system or install malware leaving side-effects for future builds.

+

He replied that it's difficult for maintainers to secure their repos and workflows, and that bad actors could compromise a runner host due to the way they run multiple jobs, and are not a fresh environment for each build. It may even be because a bad actor could scan the local network of the runner and attempt to gain access to other systems.

+If you're wondering whether containers and Pods are a suitable isolation level, we would recommend against this since it usually involves one of either: mounting a docker socket (which can lead to escalation to root on the host) or running Docker In Docker (DIND) which requires a privileged container (which can lead to escalation to root on the host).

+So, can you use actuated on a public repo?

+Our contact at GitHub stated that through VM-level isolation and an immutable VM image, the primary concerns is resolved, because there is no way to have state left over or side effects from previous builds.

+Actuated fixes the isolation problem, and prevents side-effects between builds. We also have specific iptables rules in the troubleshooting guide which will isolate your runners from the rest of the network.

+Can I use the containers feature of GitHub Actions?¶

+Yes, it is supported, however it is not required, and may make it harder to debug your builds. We prefer and recommend running on the host directly, which gives better performance and a simpler experience. Common software and packages are already within the root filesystem, and can be added with setup-X actions, or arkade get or arkade system install.

GitHub Action's Running jobs in a container feature is supported, as is Docker, Buildx, Kubernetes, KinD, K3s, eBPF, etc.

+Example of running commands with the docker.io/node:latest image.

jobs:

+ specs:

+ name: test

+ runs-on: actuated-4cpu-12gb

+ container:

+ image: docker.io/node:latest

+ env:

+ NODE_ENV: development

+ ports:

+ - 3000

+ options: --cpus 1

+ steps:

+ - name: Check for dockerenv file

+ run: node --version

+How many builds does a single actuated VM run?¶

+When a VM starts up, it runs the GitHub Actions Runner ephemeral (aka one-shot) mode, so in can run at most one build. After that, the VM will be destroyed.

+See also: GitHub: ephemeral runners

+How are VMs scheduled?¶

+VMs are placed efficiently across your Actuated Servers using a scheduling algorithm based upon the amount of RAM reserved for the VM.

+Autoscaling of VMs is automatic. Let's say that you had 10 jobs pending, but given the RAM configuration, only enough capacity to run 8 of them? The second two would be queued until capacity one or more of those 8 jobs completed.

+If you find yourself regularly getting into a queued state, there are three potential changes to consider:

+-

+

- Using Actuated Servers with more RAM +

- Allocated less RAM to each job +

- Adding more Actuated Servers +

The plan you select will determine how many Actuated Servers you can run, so consider 1. and 2. before 3.

+Do I need to auto-scale the Actuated Servers?¶

+Please read the section "How are VMs scheduled".

+Auto-scaling Pods or VMs is a quick, painless operation that makes sense for customer traffic, which is generally unpredictable and can be very bursty.

+GitHub Actions tends to be driven by your internal development team, with a predictable pattern of activity. It's unlikely to vary massively day by day, which means autoscaling is less important than with a user-facing website.

+In addition to that, bare-metal servers can take 5-10 minutes to provision and may even include a setup fee or monthly commitment, meaning that what you're used to seeing with Kubernetes or AWS Autoscaling Groups may not translate well, or even be required for CI.

+If you are cost sensitive, you should review the options under Provision a Server section.

+Depending on your provider, you may also be able to hibernate or suspend servers on a cron schedule to save a few dollars. Actuated will hold jobs in a queue until a server is ready to take them again.

+What do I need to change in my workflows to use actuated?¶

+The changes to your workflow YAML file are minimal.

+Just set runs-on to the actuated label plus the amount of CPUs and RAM you'd like. The order is fixed, but the values for vCPU/RAM are flexible and can be set as required.

You can set something like: runs-on: actuated-4cpu-16gb or runs-on: actuated-arm64-8cpu-32gb.

Is 64-bit Arm supported?¶

+Yes, actuated is built to run on both Intel/AMD and 64-bit Arm hosts, check your subscription plan to see if 64-bit Arm is included. This includes a Raspberry Pi 4B, AWS Graviton, Oracle Cloud Arm instances and potentially any other 64-bit Arm instances which support virtualisation.

+What's in the VM image and how is it built?¶

+The VM image contains similar software to the hosted runner image: ubuntu-latest offered by GitHub. Unfortunately, GitHub does not publish this image, so we've done our best through user-testing to reconstruct it, including all the Kernel modules required to run Kubernetes and Docker.

The image is built automatically using GitHub Actions and is available on a container registry.

+The primary guest OS version is Ubuntu 22.04. Ubuntu 20.04 is available on request.

+What Kernel version is being used?¶

+The Firecracker team provides guest configurations. These may not LTS, or the latest version available, however they are fully functional for CI/CD use-cases and are known to work with Firecracker.

+Stable Kernel version:

+-

+

- x86_64 - Linux Kernel 5.10.201 +

- aarch64 - Linux Kernel 5.10.201 +

Experimental Kernel version:

+-

+

- aarch64 - Linux Kernel 6.1.90 +

Where are the Kernel headers / includes?¶

+Warning

+The following command is only designed for off the shelf cloud image builds of Ubuntu server, and will not work on actuated.

+apt-get install linux-headers-$(uname -r)

+For actuated, you'll need to take a different approach to build a DKMS or kmod module for your Kernel.

+Add self-actuated/get-kernel-sources to your workflow and run it before your build step.

+ - name: Install Kernel headers

+ uses: self-actuated/get-kernel-sources@v1

+An if statement can be added to the block, if you also run the same job on various other types of runners outside of actuated.

Where is the Kernel configuration?¶

+You can run a job to print out or dump the configuration from proc, or from /boot/.

+Just create a new job, or an SSH debug session and run:

+sudo modprobe configs

+cat /proc/config.gz | gunzip > /tmp/config

+

+# Look for a specific config option

+cat /tmp/config | grep "CONFIG_DEBUG_INFO_BTF"

+How easy is it to debug a runner?¶

+OpenSSH is pre-installed, but it will be inaccessible from your workstation by default.

+To connect, you can use an inlets tunnel, Wireguard VPN or Tailscale ephemeral token (remember: Tailscale is not free for your commercial use) to log into any agent.

+We also offer a SSH gateway in some of our tiers, tell us if this is important to you in your initial contact, or reach out to us via email if you're already a customer.

+See also: Debug a GitHub Action with SSH

+How can an actuated runner get IAM permissions for AWS?¶

+If you need to publish images to Amazon Elastic Container Registry (ECR), you can either assign a role to any EC2 bare-metal instances that you're using with actuated, or use GitHub's built-in OpenID Connect support.

+Web Identity Federation means that a job can assume a role within AWS using Secure Token Service (STS) without needing any long-lived credentials.

+Read more: Configuring OpenID Connect in Amazon Web Services

+Comparison to other solutions¶

+Feel free to book a call with us if you'd like to understand this comparison in more detail.

+| Solution | +Isolated VM | +Speed | +Efficient spread of jobs | +Safely build public repos? | +64-bit Arm support | +Maintenance required | +Cost | +

|---|---|---|---|---|---|---|---|

| Hosted runners | ++ | Poor | ++ | + | None | +Free minutes in plan * |

+Per build minute | +

| actuated | ++ | Bare-metal | ++ | + | Yes | +Very little | +Fixed monthly cost | +

| Standard self-hosted runners | ++ | Good | ++ | + | DIY | +Manual setup and updates | +OSS plus management costs | +

| actions-runtime-controller | ++ | Varies * |

++ | + | DIY | +Very involved | +OSS plus management costs | +

+++

1actions-runtime-controller requires use of separate build tools such as Kaniko, which break the developer experience of usingdockerordocker-compose. If Docker in Docker (DinD) is used, then there is a severe performance penalty and security risk.+

2Builds on public GitHub repositories are free with the standard hosted runners, however private repositories require billing information, after the initial included minutes are consumed.

You can only get VM-level isolation from either GitHub hosted runners or Actuated. Standard self-hosted runners have no isolation between builds and actions-runtime-controller requires either a Docker socket to be mounted or Docker In Docker (a privileged container) to build and run containers.

+How does actuated compare to a actions-runtime-controller (ARC)?¶

+actions-runtime-controller (ARC) describes itself as "still in its early stage of development". It was created by an individual developer called Yusuke Kuoka, and now receives updates from GitHub's team, after having been adopted into the actions GitHub Organisation.

+Its primary use-case is scale GitHub's self-hosted actions runner using Pods in a Kubernetes cluster. ARC is self-hosted software which means its setup and operation are complex, requiring you to create an properly configure a GitHub App along with its keys. For actuated, you only need to run a single binary on each of your runner hosts and send us an encrypted bootstrap token.

+If you're running npm install or maven, then this may be a suitable isolation boundary for you.

The default mode for ARC is a reuseable runner, which can run many jobs, and each job could leave side-effects or poison the runner for future job runs.

+If you need to build a container, in a container, on a Kubernetes node offers little isolation or security boundary.

+What if ARC is configured to use "rootless" containers? With a rootless container, you lose access to "root" and sudo, both of which are essential in any kind of CI job. Actuated users get full access to root, and can run docker build without any tricks or losing access to sudo. That's the same experience you get from a hosted runner by GitHub, but it's faster because it's on your own hardware.

You can even run minikube, KinD, K3s and OpenShift with actuated without any changes.

+ARC runs a container, so that should work on any machine with a modern Kernel, however actuated runs a VM, in order to provide proper isolation.

+That means ARC runners can run pretty much anywhere, but actuated runners need to be on a bare-metal machine, or a VM that supports nested virtualisation.

+See also: Where can I run my agents?

+Doesn't Kaniko fix all this for ARC?¶

+Kaniko, by Google is an open source project for building containers. It's usually run as a container itself, and usually will require root privileges in order to mount the various filesystems layers required.

+See also: Root user inside a container is root on the host

+If you're an ARC user and for various reasons, cannot migrate away to a more secure solution like actuated, Kaniko may be a step in the right direction. Google Cloud users could also create a dedicated node pool with gVisor enabled, for some additional isolation.

+However, it can only build containers, and still requires root, and itself is often run in Docker, so we're getting back to the same problems that actuated set out to solve.

+In addition, Kaniko cannot and will not help you to run that container that you've just built to validate it to run end to end tests, neither can it run a KinD cluster, or a Minikube cluster.

+Do we need to run my Actuated Servers 24/7?¶

+Let's say that you wanted to access a single 64-bit Arm runner to speed up your Arm builds from 33 minutes to < 2 minutes like in this example.

+The two cheapest options for 64-bit Arm hardware would be:

+-

+

- Buy a Mac Mini M1, host it in your office or a co-lo with Asahi Linux installed. That's a one-time cost and will last for several years. +

- Or you could rent an AWS a1.metal by the hour from AWS with very little up front cost, and pay for the time you use it. +

In both cases, we're not talking about a significant amount of money, however we are sometimes asked about whether Actuated Servers need to be running 24/7.

+The answer if that it's a trade-off between cost and convenience. We recommend running them continually, however you can turn them off when you're not using them if you think it is worth your time to do so.

+If you only needed to run Arm builds from 9-5pm, you could absolutely delete the VM and re-create it with a cron job, just make sure you restore the required files from the original registration of the agent. You may also be able to "suspend" or "hibernate" the host at a reduced cost, this depends on the hosting provider. Feel free to reach out to us if you need help with this.

+Is there GPU support?¶

+Yes, both for GitHub and GitLab CI.

+See also: Accelerate GitHub Actions with dedicated GPUs

+Can Virtual Machines be launched within a GitHub Action?¶

+It is possible to launch a Virtual Machine (VM) with KVM from within a Firecracker MicroVM.

+Use-cases may include: building and snapshotting VM images, running Packer, launching VirtualBox and Vagrant, accelerating the Android emulator, building packages for NixOS and other testing which requires KVM.

+It's disabled by default, but you can opt-in to the feature by following the steps in this article:

+How to run a KVM guest in your GitHub Actions

+At time of writing, only Intel and AMD CPUs support nested virtualisation.

+What about Arm? According to our contacts at Ampere, the latest versions of Arm hardware have some support for nested virtualisation, but the patches for the Linux Kernel are not ready.

+Can I use a VM for an actuated server instead of bare-metal?¶

+If /dev/kvm is available within the VM, or the VM can be configured so that nested virtualisation is available, then you can use a VM as an actuated server. Any VMs that are launched for CI jobs will be launched with nested virtualisation, and will have some additional overheads compared to a bare-metal server.

See also: Provision a server

+Is Windows or MacOS supported?¶

+Linux is the only supported platform for actuated at this time on a AMD64 or 64-bit Arm architecture. We may consider other operating systems in the future, feel free to reach out to us.

+Is Actuated free and open-source?¶

+Actuated currently uses the Firecracker project to launch MicroVMs to isolate jobs during CI. Firecracker is an open source Virtual Machine Manager used by Amazon Web Services (AWS) to run serverless-style workloads for AWS Lambda.

+Actuated is a commercial B2B product and service created and operated by OpenFaaS Ltd.

+Read the End User License Agreement (EULA)

+The website and documentation are available on GitHub and we plan to release some open source tools in the future to improve customer experience.

+Is there a risk that we could get "locked-in" to actuated?¶

+No, you can move back to either hosted runners (pay per minute from GitHub) or self-managed self-hosted runners at any time. Bear in mind that actuated solves painful issues with both hosted runners and self-managed self-hosted runners.

+Why is the brand called "actuated" and "selfactuated"?¶

+The name of the software, product and brand is: "actuated". In some places "actuated" is not available, and we liked "selfactuated" more than "actuatedhq" or "actuatedio" because it refers to the hybrid experience of self-hosted runners.

+Privacy policy & data security¶

+Actuated is a managed service operated by OpenFaaS Ltd, registered company number: 11076587.

+It has both a Software as a Service (SaaS) component ("control plane") aka ("Actuated") and an agent ("Actuated Agent"), which runs on a Server supplied by the customer ("Customer Server").

+Data storage¶

+The control-plane of actuated collects and stores:

+-

+

- Job events for the organisation where a label of "actuated*" is found, including:

-

+

- Organisation name +

- Repository name +

- Actor name for each job +

- Build name +

- Build start / stop time +

- Build status +

+

The following is collected from agents:

+-

+

- Agent version +

- Hostname & uptime +

- Platform information - Operating System and architecture +

- System capacity - total and available RAM & CPU +

In addition, for support requests, we may need to collect the logs of the actuated agent process remotely from:

+-

+

- VMs launched for jobs, stored at

/var/log/actuated/

+

This information is required to operate the control plane including scheduling of VMs and for technical support.

+Upon cancelling a subscription, a customer may request that their data is deleted. In addition, they can uninstall the GitHub App from their organisation, and deactivate the GitHub OAuth application used to authenticate to the Actuated Dashboard.

+Data security & encryption¶

+TLS is enabled on the actuated control plane, the dashboard and on each agent. The TLS certificates have not expired and and have no known issues.

+Each customer is responsible for hosting their own Servers and installing appropriate firewalls or access control.

+Each Customer Server requires a unique token which is encrypted using public key cryptography, before being shared with OpenFaaS Ltd. This token is used to authenticate the agent to the control plane.

+Traffic between the control plane and Customer Server is only made over HTTPS, using TLS encryption and API tokens. In addition, the token required for GitHub Actions is double encrypted with an RSA key pair, so that only the intended agent can decrypt and use it. These tokens are short-lived and expire after 59 minutes.

+Event data recorded from GitHub Actions is stored and used to deliver quality of service and scheduling. This data is stored on a server managed by DigitalOcean LLC in the United Kingdom. The control plane is hosted with Linode LLC in the United Kingdom.

+No data is shared with third parties.

+Software Development Life Cycle¶

+-

+

- A Version Control System (VCS) is being Used - GitHub is used by all employees to store code +

- Only Authorized Employees Access Version Control - multiple factor authentication (MFA) is required by all employees +

- Only Authorized Employees Change Code - no changes can be pushed to production without having a pull request approval from senior management +

- Production Code Changes Restricted - Only authorized employees can push orm make changes to production code +

- All changes are documented through pull requests tickets and commit messages +

- Vulnerability management - vulnerability management is provided by GitHub.com. Critical vulnerabilities are remediated in a timely manner +