diff --git a/.github/workflows/llm_unit_tests.yml b/.github/workflows/llm_unit_tests.yml

index 0590531a372..583cbf2c31e 100644

--- a/.github/workflows/llm_unit_tests.yml

+++ b/.github/workflows/llm_unit_tests.yml

@@ -375,7 +375,7 @@ jobs:

shell: bash

run: |

python -m pip uninstall datasets -y

- python -m pip install transformers==4.34.0 datasets peft==0.5.0 accelerate==0.23.0

+ python -m pip install transformers==4.36.0 datasets peft==0.10.0 accelerate==0.23.0

python -m pip install bitsandbytes scipy

# Specific oneapi position on arc ut test machines

if [[ '${{ matrix.pytorch-version }}' == '2.1' ]]; then

@@ -422,4 +422,4 @@ jobs:

if: ${{ always() }}

shell: bash

run: |

- pip uninstall sentence-transformers -y || true

\ No newline at end of file

+ pip uninstall sentence-transformers -y || true

diff --git a/.github/workflows/manually_build_for_testing.yml b/.github/workflows/manually_build_for_testing.yml

index 5150bada7b5..00eeb7a9d58 100644

--- a/.github/workflows/manually_build_for_testing.yml

+++ b/.github/workflows/manually_build_for_testing.yml

@@ -18,6 +18,7 @@ on:

- ipex-llm-finetune-qlora-cpu

- ipex-llm-finetune-qlora-xpu

- ipex-llm-xpu

+ - ipex-llm-cpp-xpu

- ipex-llm-cpu

- ipex-llm-serving-xpu

- ipex-llm-serving-cpu

@@ -150,6 +151,35 @@ jobs:

sudo docker push 10.239.45.10/arda/${image}:${TAG}

sudo docker rmi -f ${image}:${TAG} 10.239.45.10/arda/${image}:${TAG}

+ ipex-llm-cpp-xpu:

+ if: ${{ github.event.inputs.artifact == 'ipex-llm-cpp-xpu' || github.event.inputs.artifact == 'all' }}

+ runs-on: [self-hosted, Shire]

+

+ steps:

+ - uses: actions/checkout@f43a0e5ff2bd294095638e18286ca9a3d1956744 # actions/checkout@v3

+ with:

+ ref: ${{ github.event.inputs.sha }}

+ - name: docker login

+ run: |

+ docker login -u ${DOCKERHUB_USERNAME} -p ${DOCKERHUB_PASSWORD}

+ - name: ipex-llm-cpp-xpu

+ run: |

+ echo "##############################################################"

+ echo "####### ipex-llm-cpp-xpu ########"

+ echo "##############################################################"

+ export image=intelanalytics/ipex-llm-cpp-xpu

+ cd docker/llm/cpp/

+ sudo docker build \

+ --no-cache=true \

+ --build-arg http_proxy=${HTTP_PROXY} \

+ --build-arg https_proxy=${HTTPS_PROXY} \

+ --build-arg no_proxy=${NO_PROXY} \

+ -t ${image}:${TAG} -f ./Dockerfile .

+ sudo docker push ${image}:${TAG}

+ sudo docker tag ${image}:${TAG} 10.239.45.10/arda/${image}:${TAG}

+ sudo docker push 10.239.45.10/arda/${image}:${TAG}

+ sudo docker rmi -f ${image}:${TAG} 10.239.45.10/arda/${image}:${TAG}

+

ipex-llm-cpu:

if: ${{ github.event.inputs.artifact == 'ipex-llm-cpu' || github.event.inputs.artifact == 'all' }}

runs-on: [self-hosted, Shire]

diff --git a/README.md b/README.md

index 61eef9117e0..ab44c7a3edd 100644

--- a/README.md

+++ b/README.md

@@ -183,6 +183,8 @@ Over 50 models have been optimized/verified on `ipex-llm`, including *LLaMA/LLaM

| DeciLM-7B | [link](python/llm/example/CPU/HF-Transformers-AutoModels/Model/deciLM-7b) | [link](python/llm/example/GPU/HF-Transformers-AutoModels/Model/deciLM-7b) |

| Deepseek | [link](python/llm/example/CPU/HF-Transformers-AutoModels/Model/deepseek) | [link](python/llm/example/GPU/HF-Transformers-AutoModels/Model/deepseek) |

| StableLM | [link](python/llm/example/CPU/HF-Transformers-AutoModels/Model/stablelm) | [link](python/llm/example/GPU/HF-Transformers-AutoModels/Model/stablelm) |

+| CodeGemma | [link](python/llm/example/CPU/HF-Transformers-AutoModels/Model/codegemma) | [link](python/llm/example/GPU/HF-Transformers-AutoModels/Model/codegemma) |

+| Command-R/cohere | [link](python/llm/example/CPU/HF-Transformers-AutoModels/Model/cohere) | [link](python/llm/example/GPU/HF-Transformers-AutoModels/Model/cohere) |

## Get Support

- Please report a bug or raise a feature request by opening a [Github Issue](https://github.com/intel-analytics/ipex-llm/issues)

diff --git a/docker/llm/README.md b/docker/llm/README.md

index 47a2fac7f7a..52cc64c6fa7 100644

--- a/docker/llm/README.md

+++ b/docker/llm/README.md

@@ -28,7 +28,7 @@ This guide provides step-by-step instructions for installing and using IPEX-LLM

### 1. Prepare ipex-llm-cpu Docker Image

-Run the following command to pull image from dockerhub:

+Run the following command to pull image:

```bash

docker pull intelanalytics/ipex-llm-cpu:2.1.0-SNAPSHOT

```

diff --git a/docker/llm/finetune/qlora/cpu/docker/Dockerfile b/docker/llm/finetune/qlora/cpu/docker/Dockerfile

index 15b96d6c371..553c4d3ac7a 100644

--- a/docker/llm/finetune/qlora/cpu/docker/Dockerfile

+++ b/docker/llm/finetune/qlora/cpu/docker/Dockerfile

@@ -20,7 +20,8 @@ RUN echo "deb [signed-by=/usr/share/keyrings/intel-oneapi-archive-keyring.gpg] h

RUN mkdir -p /ipex_llm/data && mkdir -p /ipex_llm/model && \

# Install python 3.11.1

- apt-get update && apt-get install -y curl wget gpg gpg-agent software-properties-common git gcc g++ make libunwind8-dev zlib1g-dev libssl-dev libffi-dev && \

+ apt-get update && \

+ apt-get install -y curl wget gpg gpg-agent software-properties-common git gcc g++ make libunwind8-dev libbz2-dev zlib1g-dev libssl-dev libffi-dev && \

mkdir -p /opt/python && \

cd /opt/python && \

wget https://www.python.org/ftp/python/3.11.1/Python-3.11.1.tar.xz && \

@@ -39,15 +40,16 @@ RUN mkdir -p /ipex_llm/data && mkdir -p /ipex_llm/model && \

rm -rf /var/lib/apt/lists/* && \

pip install --upgrade pip && \

export PIP_DEFAULT_TIMEOUT=100 && \

- pip install --upgrade torch==2.1.0 && \

+ # install torch CPU version

+ pip install --upgrade torch==2.1.0 --index-url https://download.pytorch.org/whl/cpu && \

# install CPU ipex-llm

pip install --pre --upgrade ipex-llm[all] && \

# install ipex and oneccl

pip install https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/cpu/intel_extension_for_pytorch-2.1.0%2Bcpu-cp311-cp311-linux_x86_64.whl && \

pip install oneccl_bind_pt -f https://developer.intel.com/ipex-whl-stable && \

# install huggingface dependencies

- pip install datasets transformers==4.35.0 && \

- pip install fire peft==0.5.0 && \

+ pip install datasets transformers==4.36.0 && \

+ pip install fire peft==0.10.0 && \

pip install accelerate==0.23.0 && \

pip install bitsandbytes && \

# get qlora example code

diff --git a/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s b/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s

index 7757a23162a..469a1bbf631 100644

--- a/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s

+++ b/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s

@@ -61,8 +61,8 @@ RUN mkdir -p /ipex_llm/data && mkdir -p /ipex_llm/model && \

pip install intel_extension_for_pytorch==2.0.100 && \

pip install oneccl_bind_pt -f https://developer.intel.com/ipex-whl-stable && \

# install huggingface dependencies

- pip install datasets transformers==4.35.0 && \

- pip install fire peft==0.5.0 && \

+ pip install datasets transformers==4.36.0 && \

+ pip install fire peft==0.10.0 && \

pip install accelerate==0.23.0 && \

# install basic dependencies

apt-get update && apt-get install -y curl wget gpg gpg-agent && \

diff --git a/docker/llm/finetune/qlora/xpu/docker/Dockerfile b/docker/llm/finetune/qlora/xpu/docker/Dockerfile

index e53d4ec9394..8123b057381 100644

--- a/docker/llm/finetune/qlora/xpu/docker/Dockerfile

+++ b/docker/llm/finetune/qlora/xpu/docker/Dockerfile

@@ -3,7 +3,7 @@ ARG http_proxy

ARG https_proxy

ENV TZ=Asia/Shanghai

ARG PIP_NO_CACHE_DIR=false

-ENV TRANSFORMERS_COMMIT_ID=95fe0f5

+ENV TRANSFORMERS_COMMIT_ID=1466677

# retrive oneapi repo public key

RUN curl -fsSL https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS-2023.PUB | gpg --dearmor | tee /usr/share/keyrings/intel-oneapi-archive-keyring.gpg && \

@@ -33,7 +33,7 @@ RUN curl -fsSL https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-P

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/ && \

# install huggingface dependencies

pip install git+https://github.com/huggingface/transformers.git@${TRANSFORMERS_COMMIT_ID} && \

- pip install peft==0.5.0 datasets accelerate==0.23.0 && \

+ pip install peft==0.6.0 datasets accelerate==0.23.0 && \

pip install bitsandbytes scipy && \

git clone https://github.com/intel-analytics/IPEX-LLM.git && \

mv IPEX-LLM/python/llm/example/GPU/LLM-Finetuning/common /common && \

diff --git a/docs/readthedocs/source/_templates/sidebar_quicklinks.html b/docs/readthedocs/source/_templates/sidebar_quicklinks.html

index d821185ab70..b720c4f7e49 100644

--- a/docs/readthedocs/source/_templates/sidebar_quicklinks.html

+++ b/docs/readthedocs/source/_templates/sidebar_quicklinks.html

@@ -37,6 +37,9 @@

Run Coding Copilot (Continue) in VSCode with Intel GPU

+

+ Run Dify on Intel GPU

+

Run Open WebUI with IPEX-LLM on Intel GPU

@@ -58,6 +61,13 @@

Finetune LLM with Axolotl on Intel GPU

+

+ Run PrivateGPT with IPEX-LLM on Intel GPU

+

+

+ Run IPEX-LLM serving on Multiple Intel GPUs

+ using DeepSpeed AutoTP and FastApi

+

diff --git a/docs/readthedocs/source/_toc.yml b/docs/readthedocs/source/_toc.yml

index 482210e6442..2e408b1a0e9 100644

--- a/docs/readthedocs/source/_toc.yml

+++ b/docs/readthedocs/source/_toc.yml

@@ -26,13 +26,16 @@ subtrees:

- file: doc/LLM/Quickstart/chatchat_quickstart

- file: doc/LLM/Quickstart/webui_quickstart

- file: doc/LLM/Quickstart/open_webui_with_ollama_quickstart

+ - file: doc/LLM/Quickstart/privateGPT_quickstart

- file: doc/LLM/Quickstart/continue_quickstart

+ - file: doc/LLM/Quickstart/dify_quickstart

- file: doc/LLM/Quickstart/benchmark_quickstart

- file: doc/LLM/Quickstart/llama_cpp_quickstart

- file: doc/LLM/Quickstart/ollama_quickstart

- file: doc/LLM/Quickstart/llama3_llamacpp_ollama_quickstart

- file: doc/LLM/Quickstart/fastchat_quickstart

- file: doc/LLM/Quickstart/axolotl_quickstart

+ - file: doc/LLM/Quickstart/deepspeed_autotp_fastapi_quickstart

- file: doc/LLM/Overview/KeyFeatures/index

title: "Key Features"

subtrees:

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/axolotl_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/axolotl_quickstart.md

index 615f104d3a4..afbdc7c9234 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/axolotl_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/axolotl_quickstart.md

@@ -33,7 +33,7 @@ git clone https://github.com/OpenAccess-AI-Collective/axolotl/tree/v0.4.0

cd axolotl

# replace requirements.txt

remove requirements.txt

-wget -O requirements.txt https://github.com/intel-analytics/ipex-llm/blob/main/python/llm/example/GPU/LLM-Finetuning/axolotl/requirements-xpu.txt

+wget -O requirements.txt https://raw.githubusercontent.com/intel-analytics/ipex-llm/main/python/llm/example/GPU/LLM-Finetuning/axolotl/requirements-xpu.txt

pip install -e .

pip install transformers==4.36.0

# to avoid https://github.com/OpenAccess-AI-Collective/axolotl/issues/1544

@@ -92,7 +92,14 @@ Configure oneAPI variables by running the following command:

```

-Configure accelerate to avoid training with CPU

+Configure accelerate to avoid training with CPU. You can download a default `default_config.yaml` with `use_cpu: false`.

+

+```cmd

+mkdir -p ~/.cache/huggingface/accelerate/

+wget -O ~/.cache/huggingface/accelerate/default_config.yaml https://raw.githubusercontent.com/intel-analytics/ipex-llm/main/python/llm/example/GPU/LLM-Finetuning/axolotl/default_config.yaml

+```

+

+As an alternative, you can config accelerate based on your requirements.

```cmd

accelerate config

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

index 1d465f3ef8f..b43b8cbd3c0 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

@@ -39,17 +39,30 @@ Follow the guide that corresponds to your specific system and device from the li

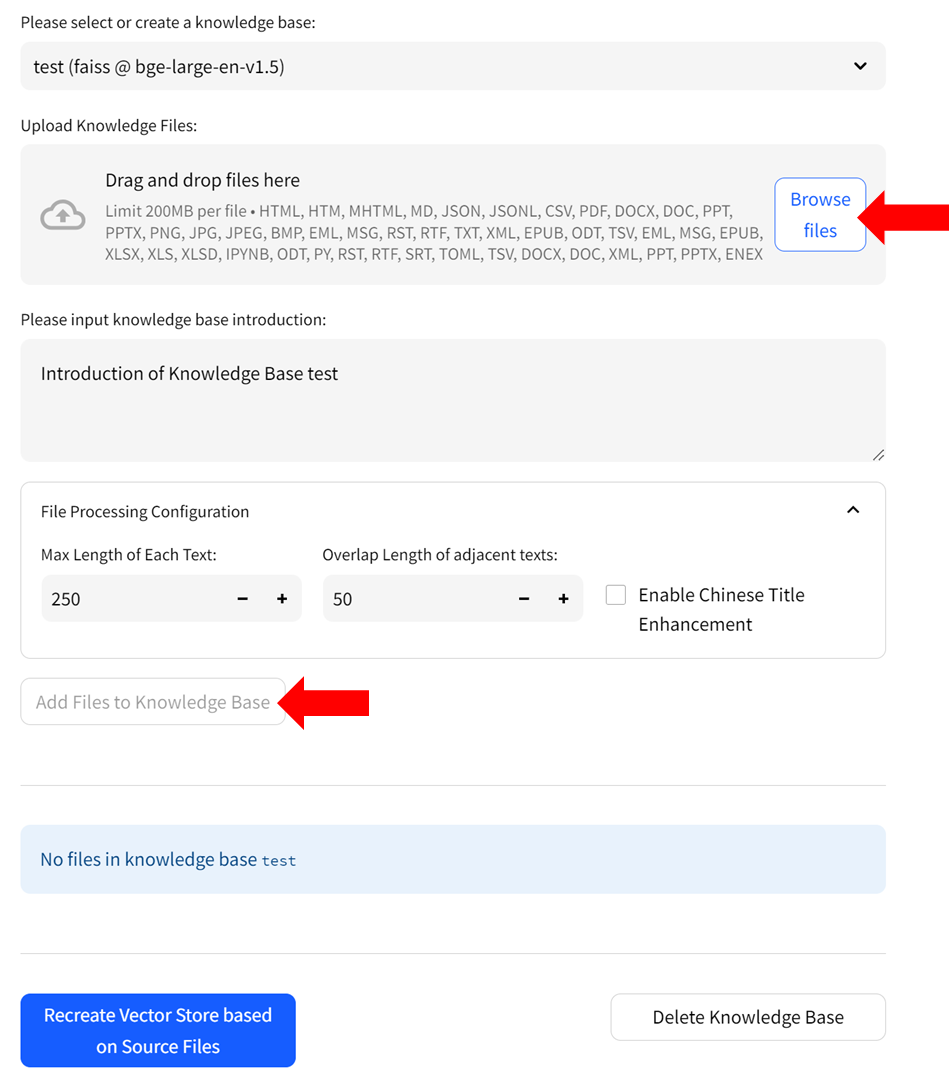

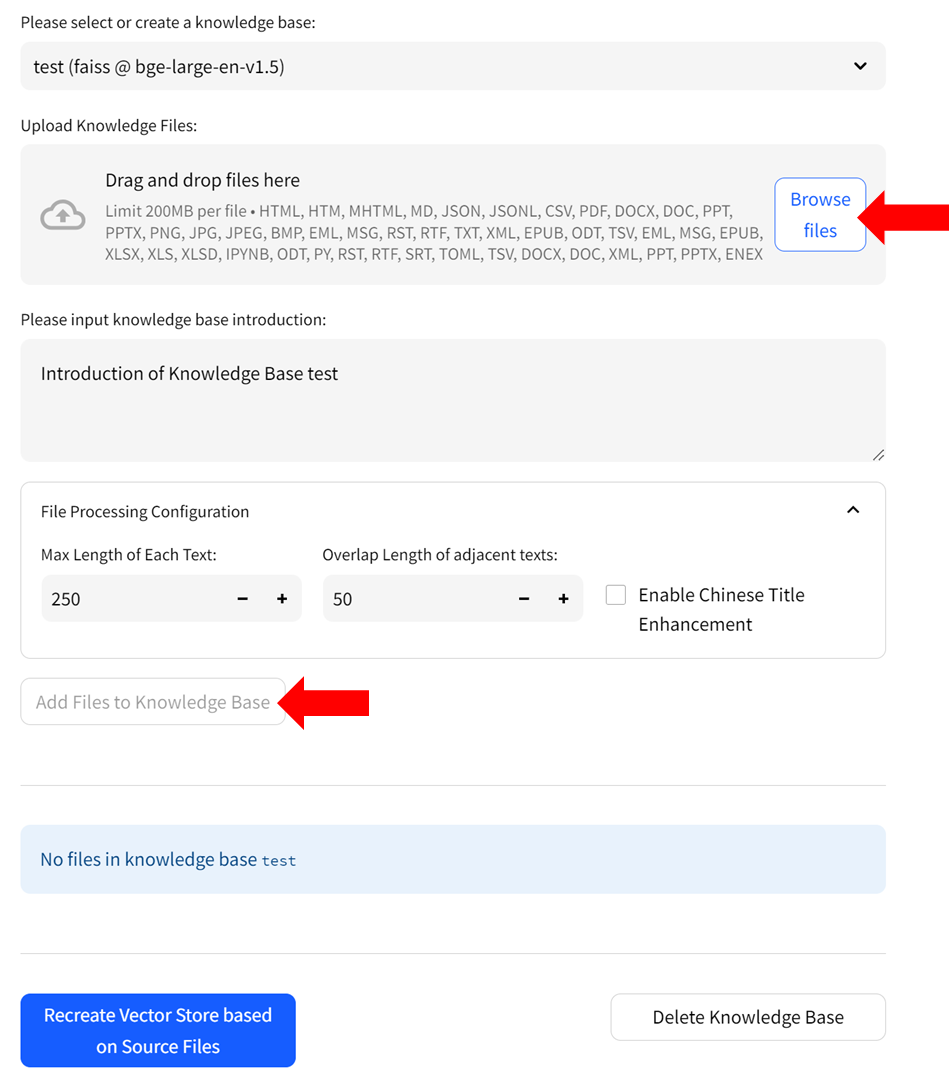

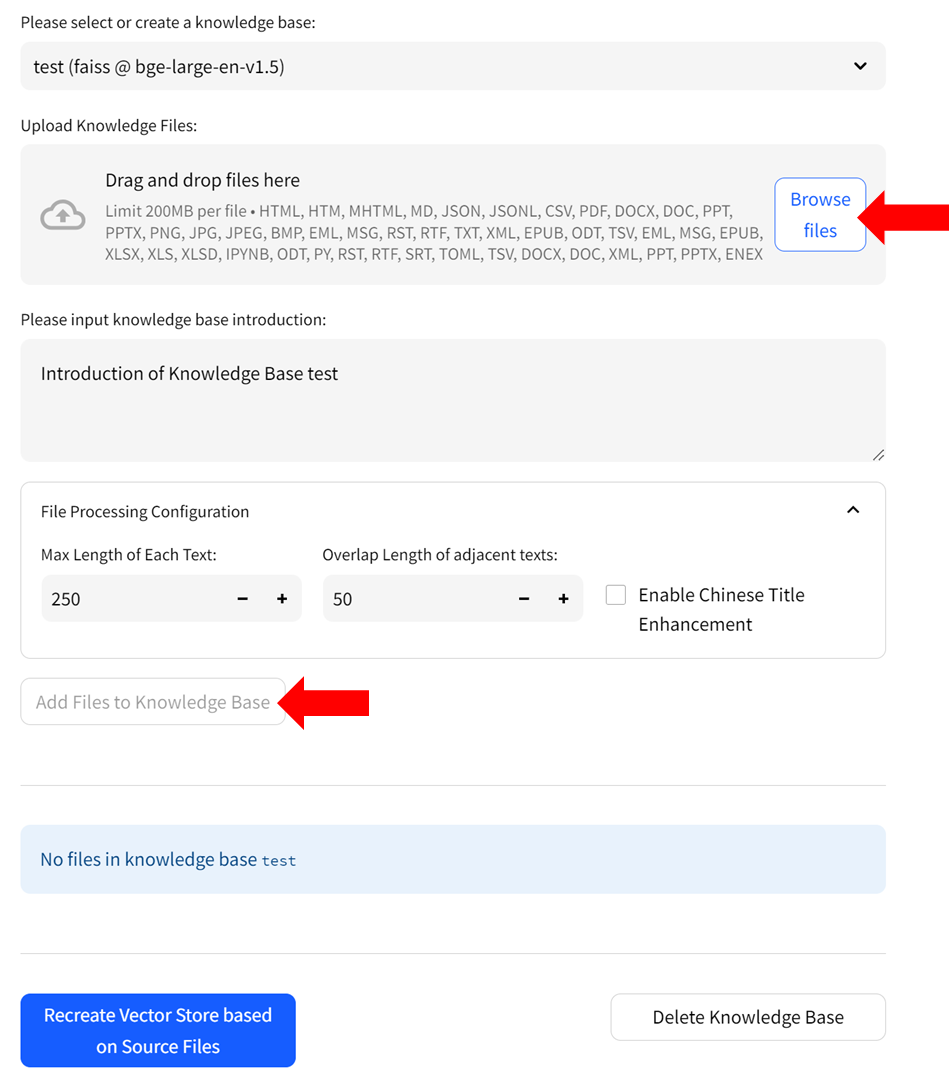

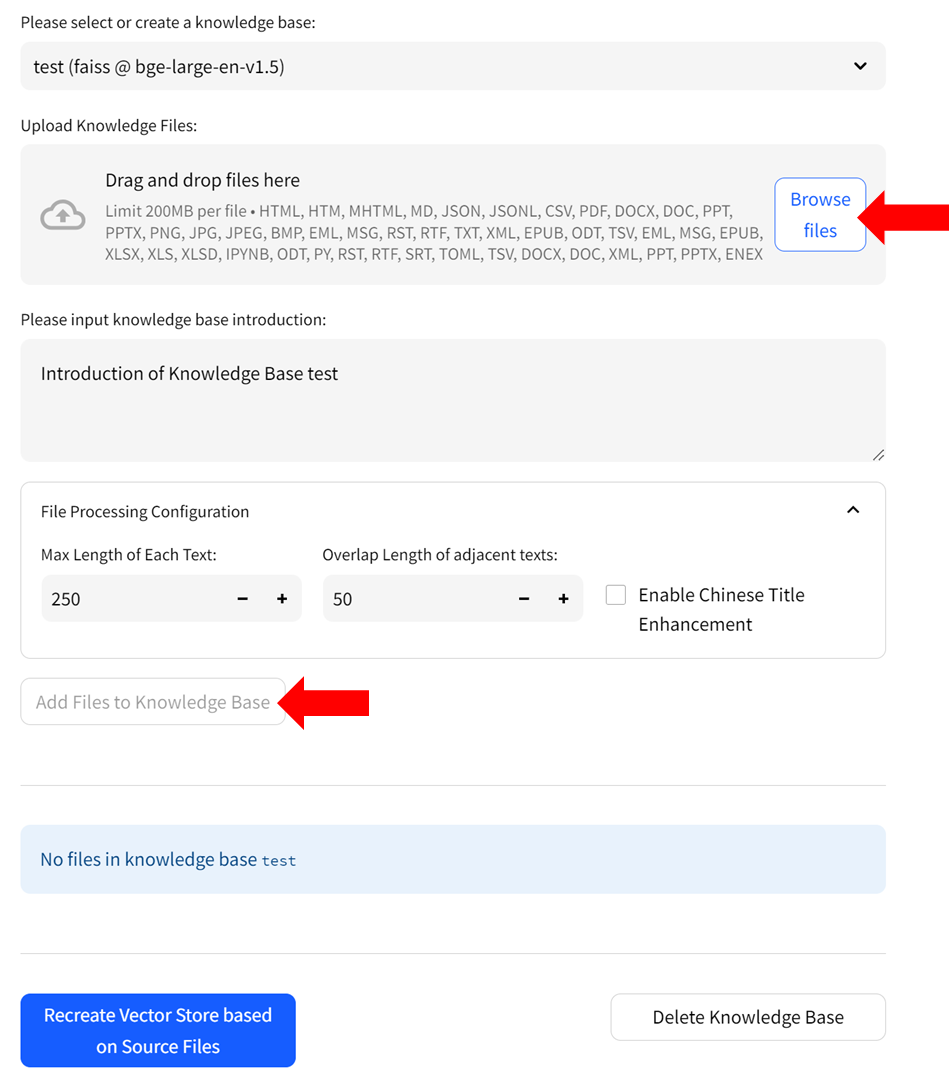

#### Step 1: Create Knowledge Base

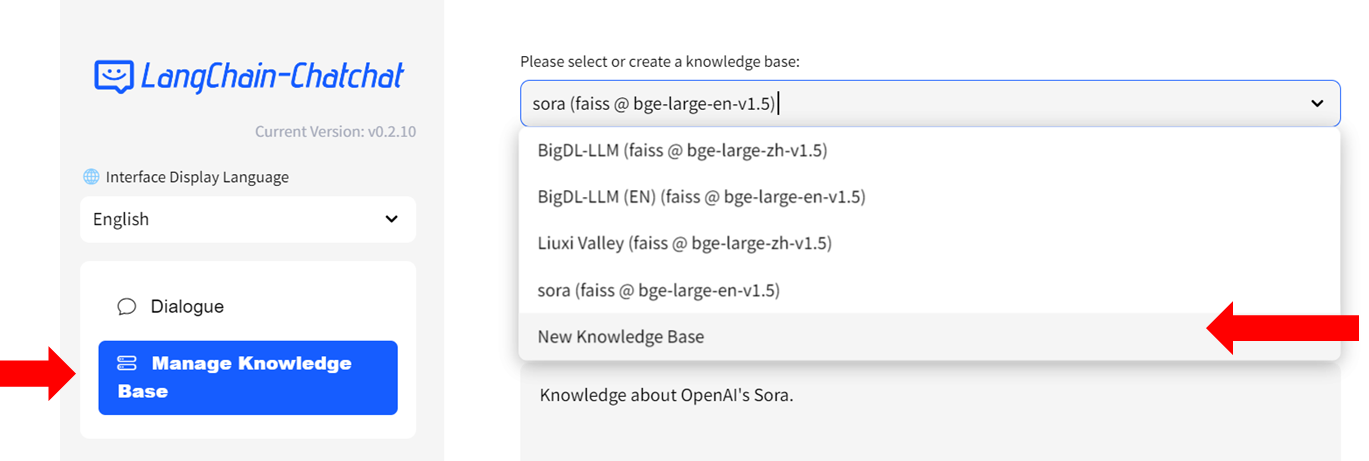

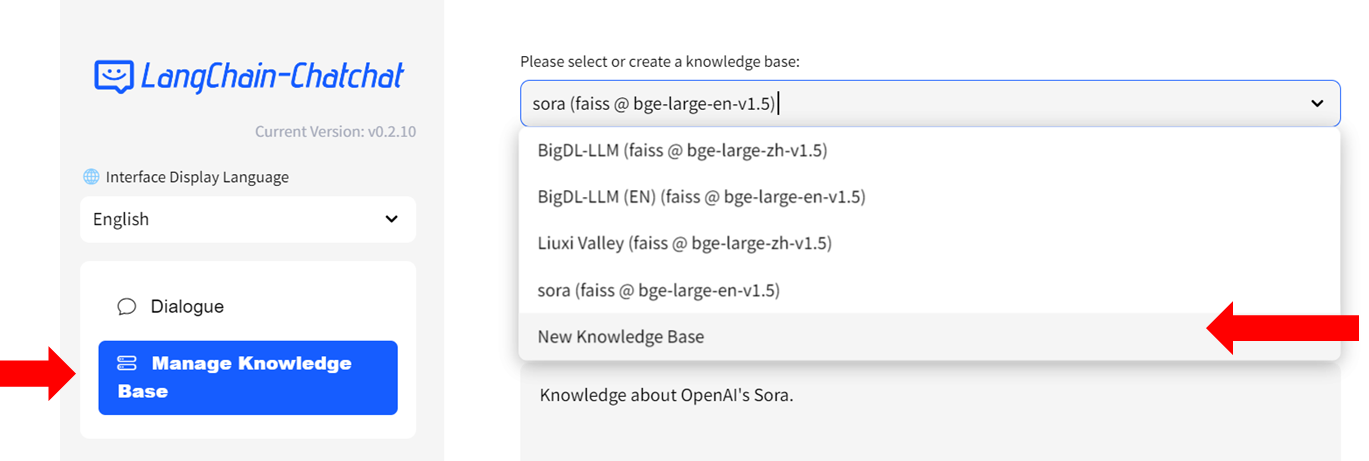

- Select `Manage Knowledge Base` from the menu on the left, then choose `New Knowledge Base` from the dropdown menu on the right side.

-

+

+

+  +

+

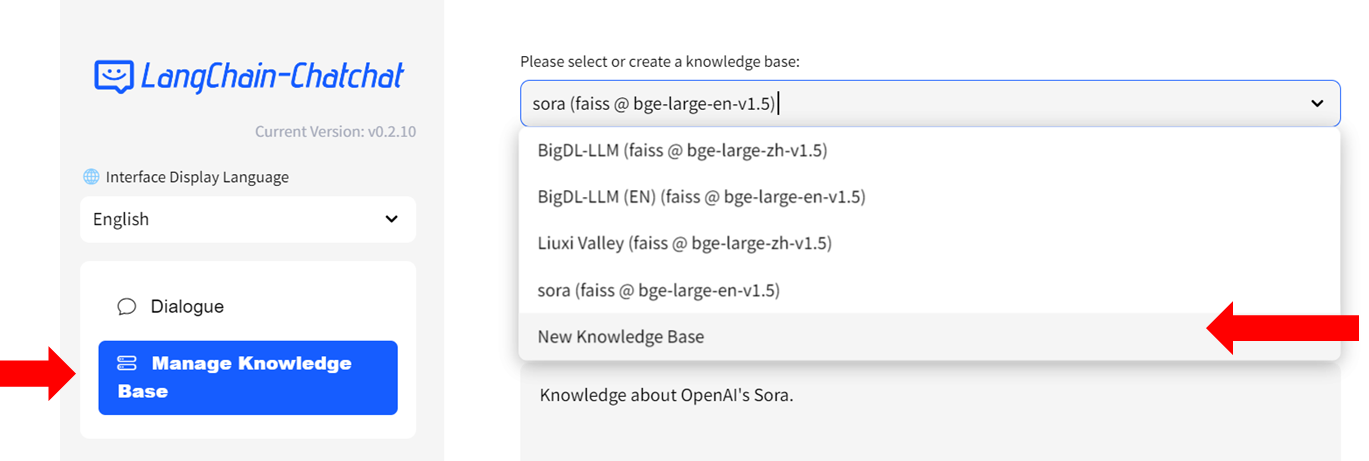

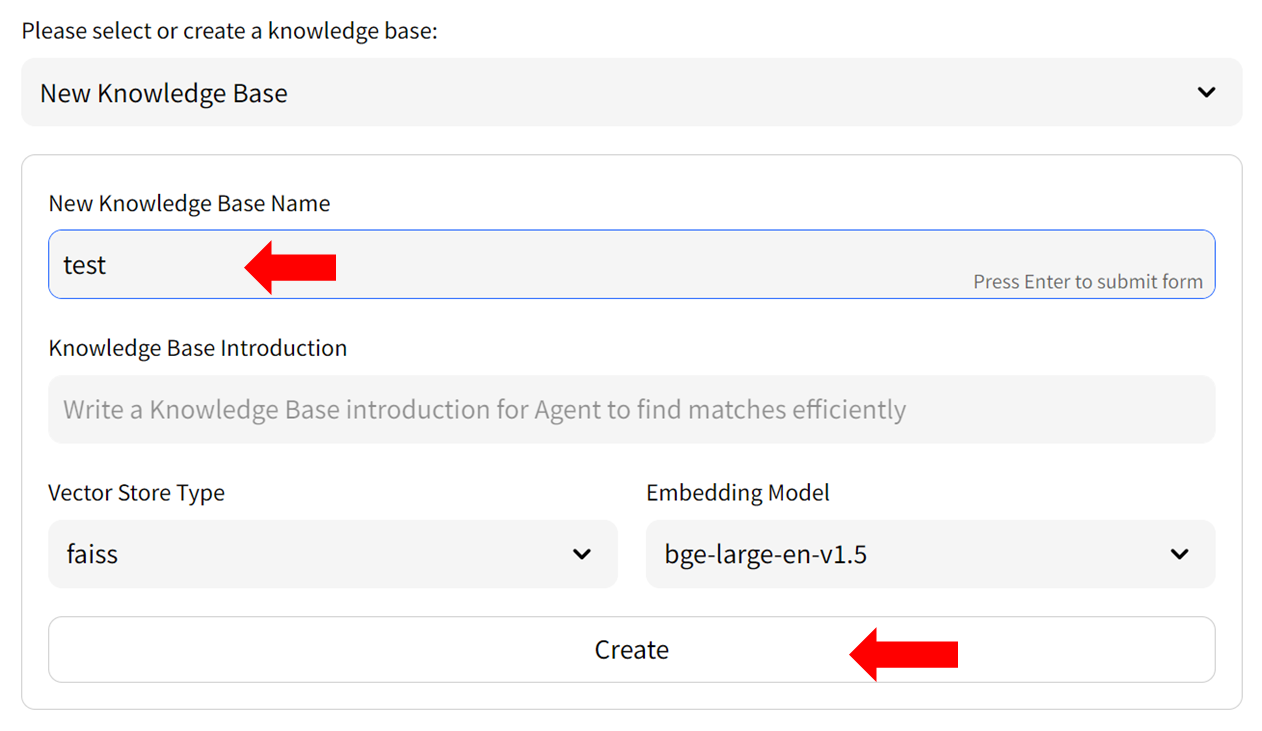

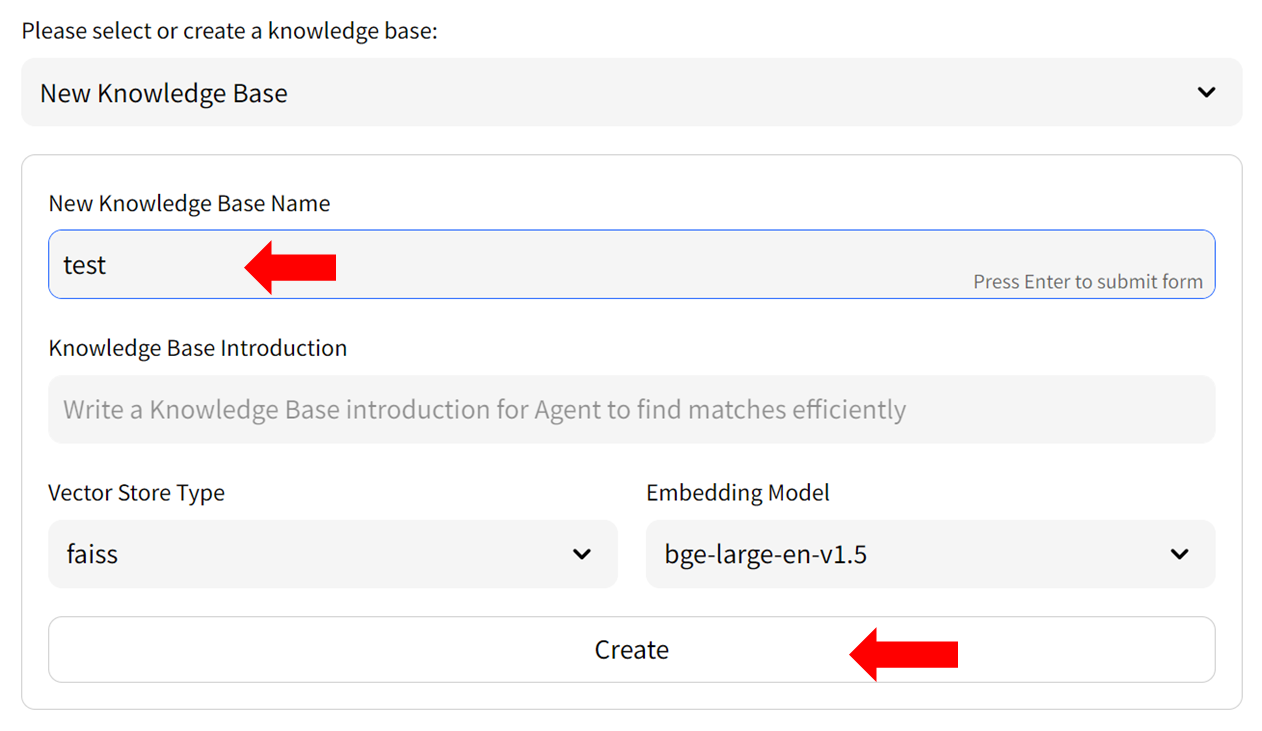

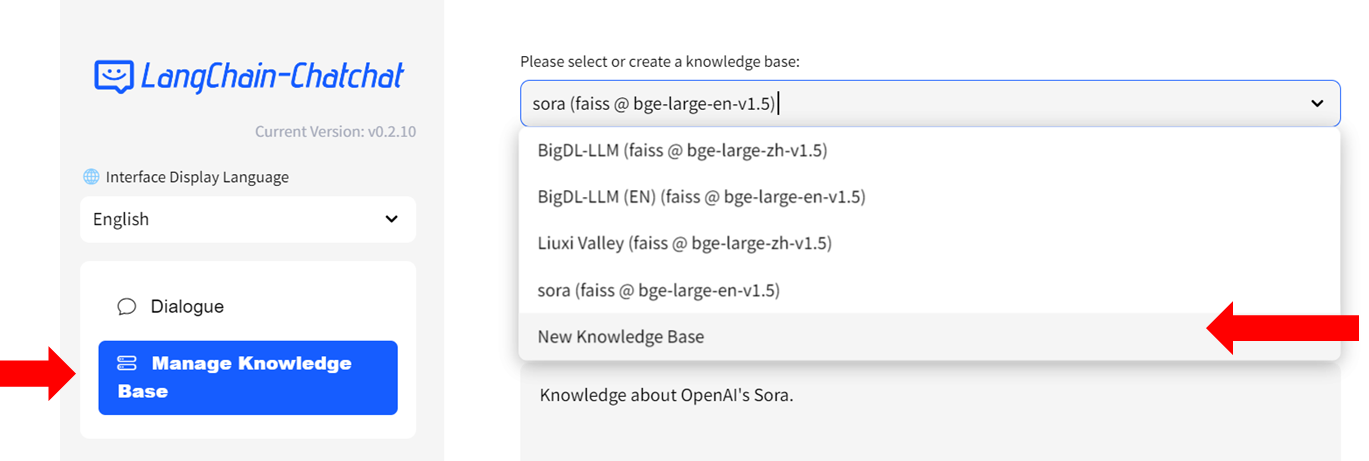

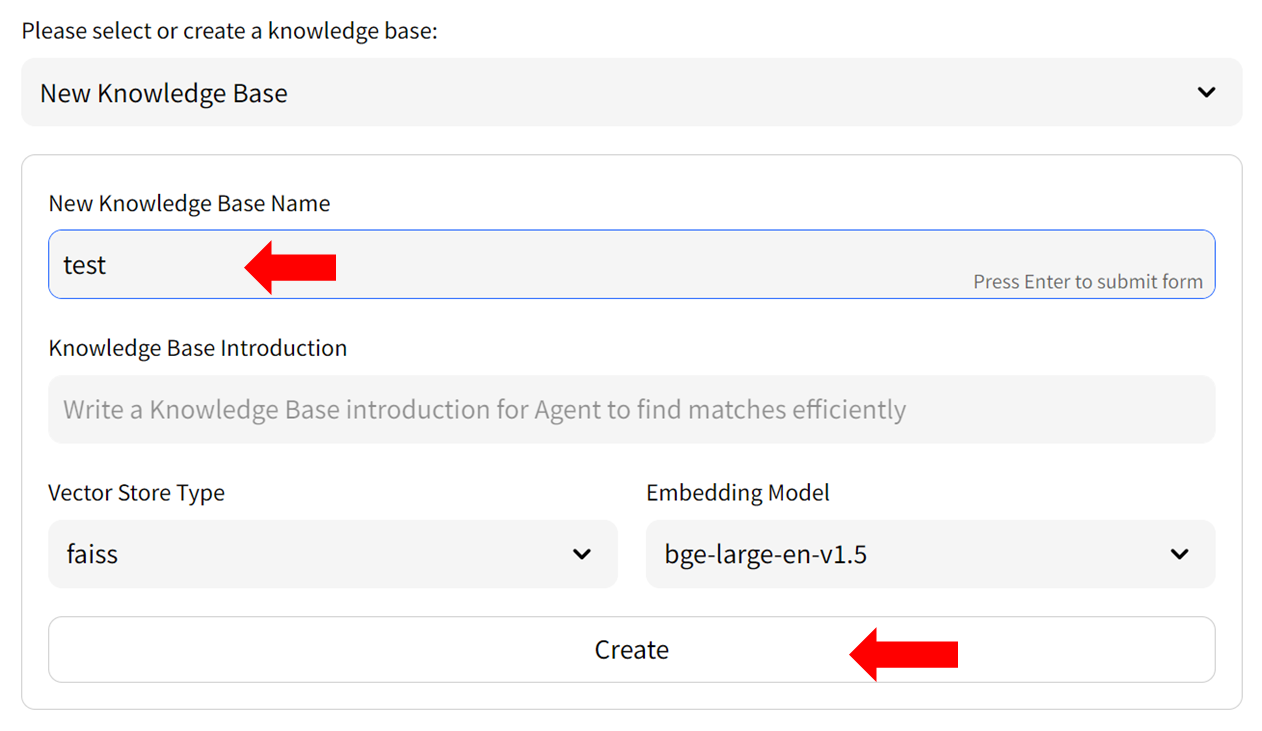

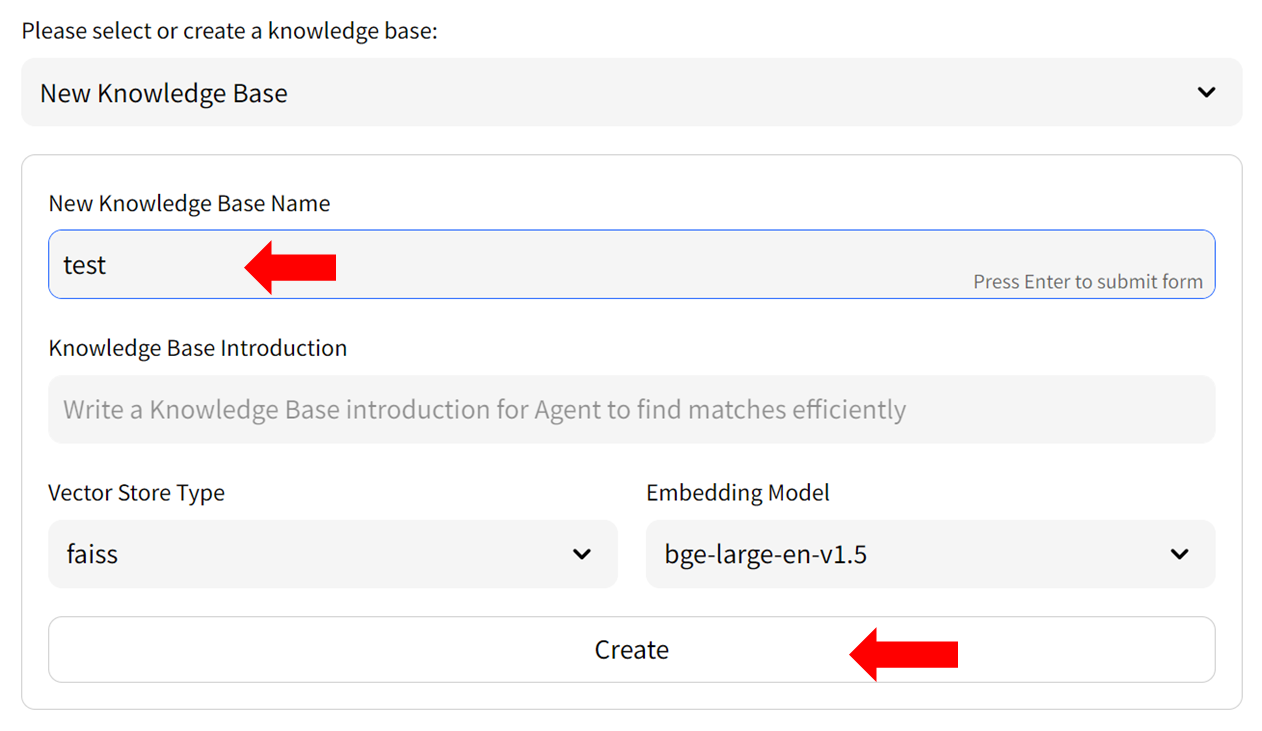

- Fill in the name of your new knowledge base (example: "test") and press the `Create` button. Adjust any other settings as needed.

-

+

+

- Fill in the name of your new knowledge base (example: "test") and press the `Create` button. Adjust any other settings as needed.

-

+

+

+  +

+

- Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on `Add files to Knowledge Base` button to build the vector store. Note: this process may take several minutes.

-

+

+

- Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on `Add files to Knowledge Base` button to build the vector store. Note: this process may take several minutes.

-

+

+

+  +

#### Step 2: Chat with RAG

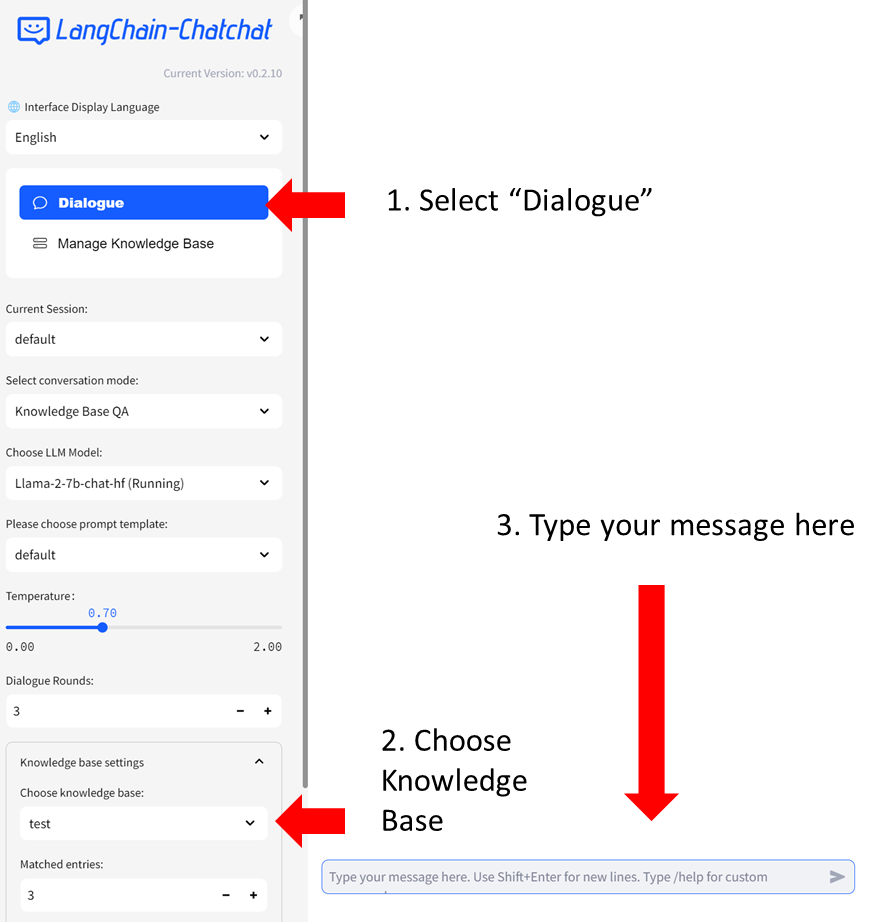

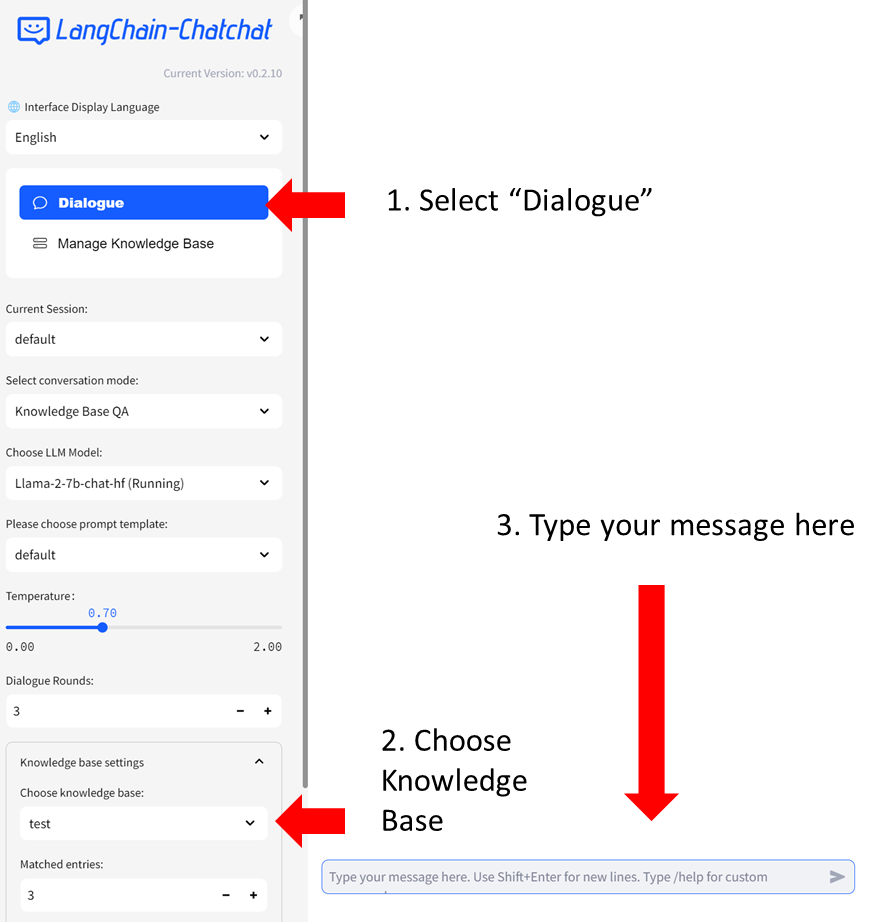

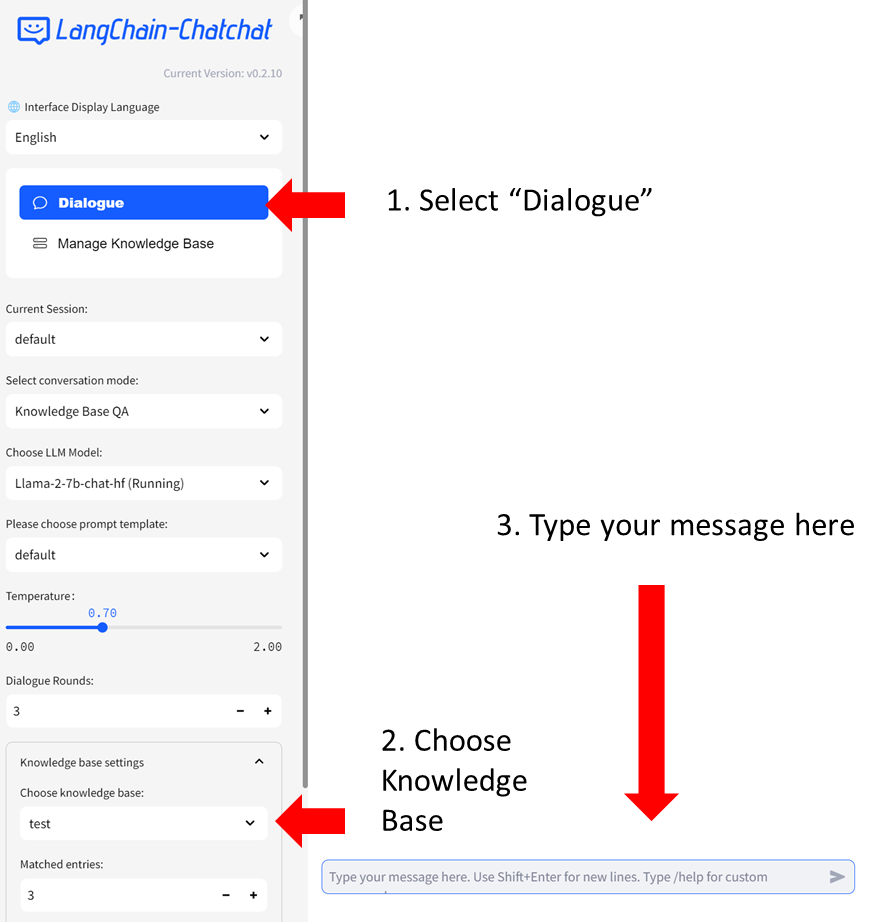

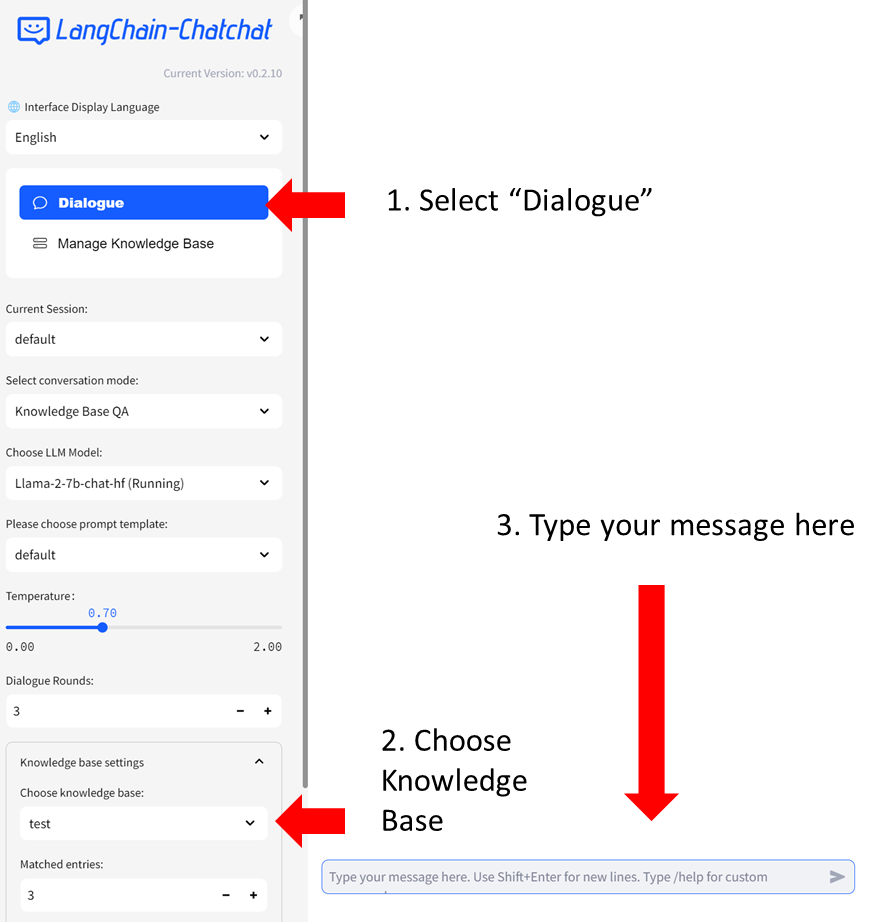

You can now click `Dialogue` on the left-side menu to return to the chat UI. Then in `Knowledge base settings` menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

-

+

#### Step 2: Chat with RAG

You can now click `Dialogue` on the left-side menu to return to the chat UI. Then in `Knowledge base settings` menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

-

+

+  +

+

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/deepspeed_autotp_fastapi_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/deepspeed_autotp_fastapi_quickstart.md

new file mode 100644

index 00000000000..f99c673190f

--- /dev/null

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/deepspeed_autotp_fastapi_quickstart.md

@@ -0,0 +1,102 @@

+# Run IPEX-LLM serving on Multiple Intel GPUs using DeepSpeed AutoTP and FastApi

+

+This example demonstrates how to run IPEX-LLM serving on multiple [Intel GPUs](../README.md) by leveraging DeepSpeed AutoTP.

+

+## Requirements

+

+To run this example with IPEX-LLM on Intel GPUs, we have some recommended requirements for your machine, please refer to [here](../README.md#recommended-requirements) for more information. For this particular example, you will need at least two GPUs on your machine.

+

+## Example

+

+### 1. Install

+

+```bash

+conda create -n llm python=3.11

+conda activate llm

+# below command will install intel_extension_for_pytorch==2.1.10+xpu as default

+pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

+pip install oneccl_bind_pt==2.1.100 --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

+# configures OneAPI environment variables

+source /opt/intel/oneapi/setvars.sh

+pip install git+https://github.com/microsoft/DeepSpeed.git@ed8aed5

+pip install git+https://github.com/intel/intel-extension-for-deepspeed.git@0eb734b

+pip install mpi4py fastapi uvicorn

+conda install -c conda-forge -y gperftools=2.10 # to enable tcmalloc

+```

+

+> **Important**: IPEX 2.1.10+xpu requires Intel® oneAPI Base Toolkit's version == 2024.0. Please make sure you have installed the correct version.

+

+### 2. Run tensor parallel inference on multiple GPUs

+

+When we run the model in a distributed manner across two GPUs, the memory consumption of each GPU is only half of what it was originally, and the GPUs can work simultaneously during inference computation.

+

+We provide example usage for `Llama-2-7b-chat-hf` model running on Arc A770

+

+Run Llama-2-7b-chat-hf on two Intel Arc A770:

+

+```bash

+

+# Before run this script, you should adjust the YOUR_REPO_ID_OR_MODEL_PATH in last line

+# If you want to change server port, you can set port parameter in last line

+

+# To avoid GPU OOM, you could adjust --max-num-seqs and --max-num-batched-tokens parameters in below script

+bash run_llama2_7b_chat_hf_arc_2_card.sh

+```

+

+If you successfully run the serving, you can get output like this:

+

+```bash

+[0] INFO: Started server process [120071]

+[0] INFO: Waiting for application startup.

+[0] INFO: Application startup complete.

+[0] INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

+```

+

+> **Note**: You could change `NUM_GPUS` to the number of GPUs you have on your machine. And you could also specify other low bit optimizations through `--low-bit`.

+

+### 3. Sample Input and Output

+

+We can use `curl` to test serving api

+

+```bash

+# Set http_proxy and https_proxy to null to ensure that requests are not forwarded by a proxy.

+export http_proxy=

+export https_proxy=

+

+curl -X 'POST' \

+ 'http://127.0.0.1:8000/generate/' \

+ -H 'accept: application/json' \

+ -H 'Content-Type: application/json' \

+ -d '{

+ "prompt": "What is AI?",

+ "n_predict": 32

+}'

+```

+

+And you should get output like this:

+

+```json

+{

+ "generated_text": "What is AI? Artificial intelligence (AI) refers to the development of computer systems able to perform tasks that would normally require human intelligence, such as visual perception, speech",

+ "generate_time": "0.45149803161621094s"

+}

+

+```

+

+**Important**: The first token latency is much larger than rest token latency, you could use [our benchmark tool](https://github.com/intel-analytics/ipex-llm/blob/main/python/llm/dev/benchmark/README.md) to obtain more details about first and rest token latency.

+

+### 4. Benchmark with wrk

+

+We use wrk for testing end-to-end throughput, check [here](https://github.com/wg/wrk).

+

+You can install by:

+```bash

+sudo apt install wrk

+```

+

+Please change the test url accordingly.

+

+```bash

+# set t/c to the number of concurrencies to test full throughput.

+wrk -t1 -c1 -d5m -s ./wrk_script_1024.lua http://127.0.0.1:8000/generate/ --timeout 1m

+```

\ No newline at end of file

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md

index 7d4af4c83be..f1d444b9132 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md

@@ -1,39 +1,73 @@

# Run Dify on Intel GPU

-We recommend start the project following [Dify docs](https://docs.dify.ai/getting-started/install-self-hosted/local-source-code)

-## Server Deployment

-### Clone code

+

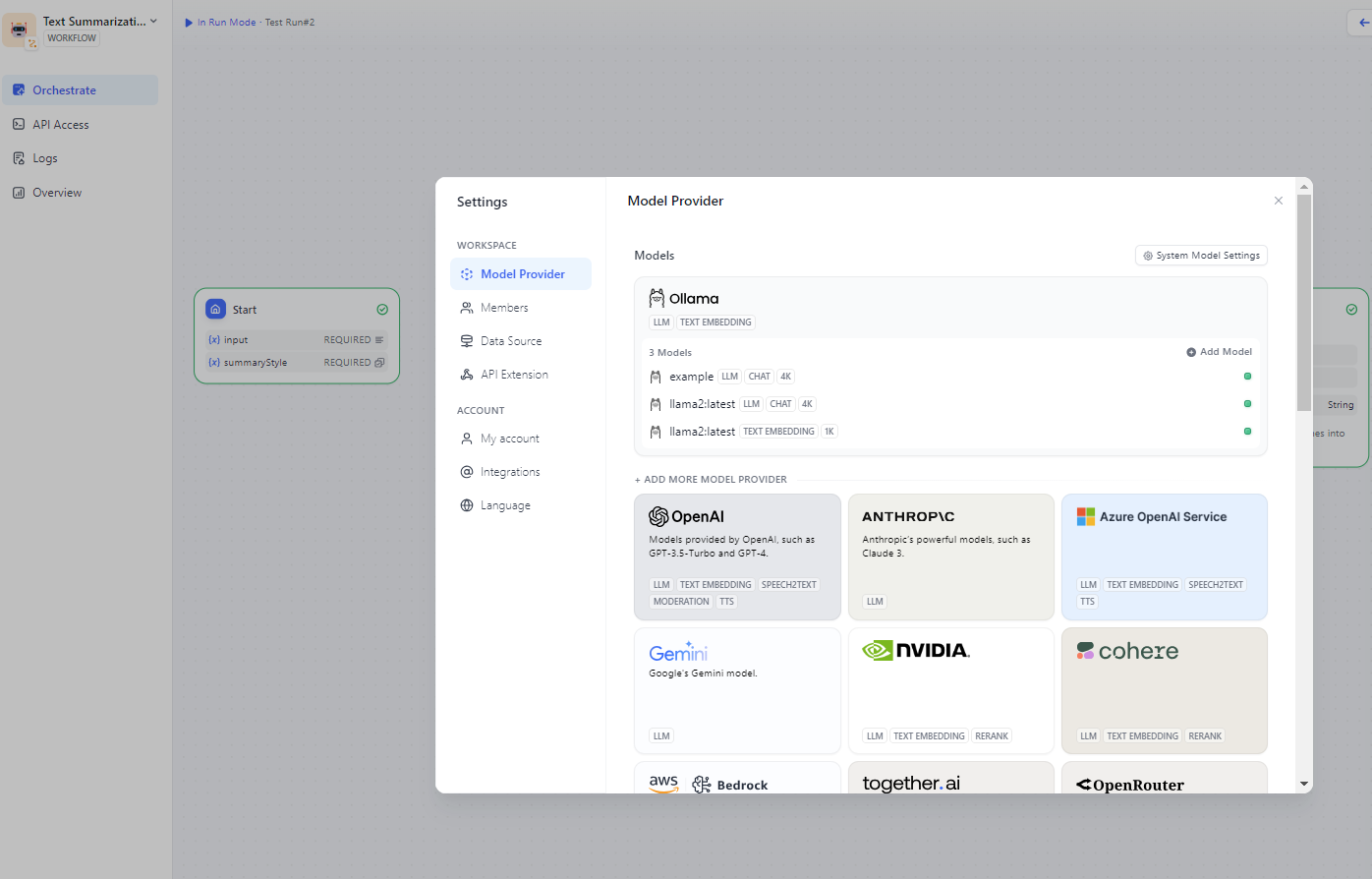

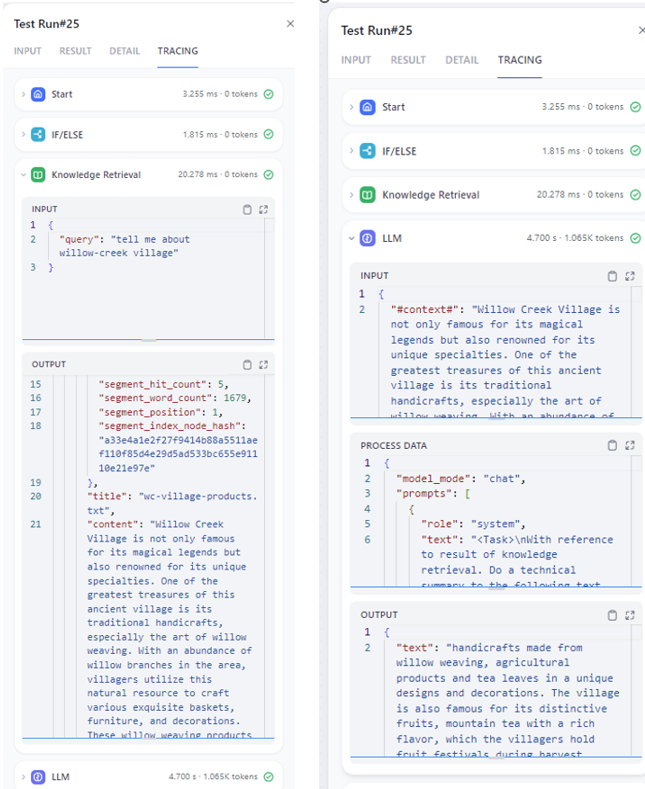

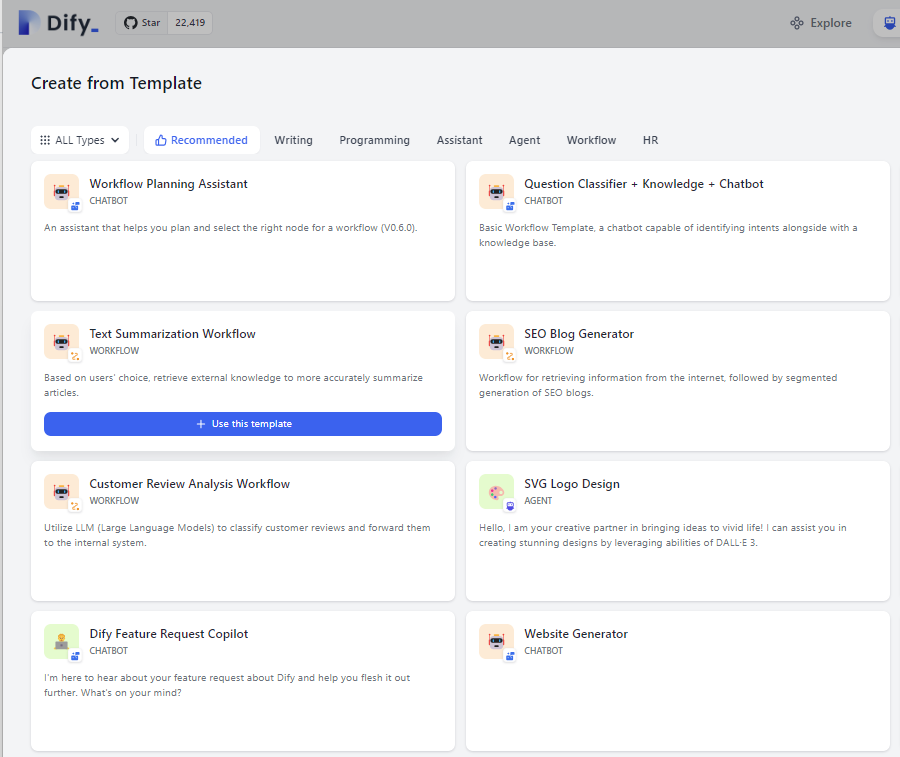

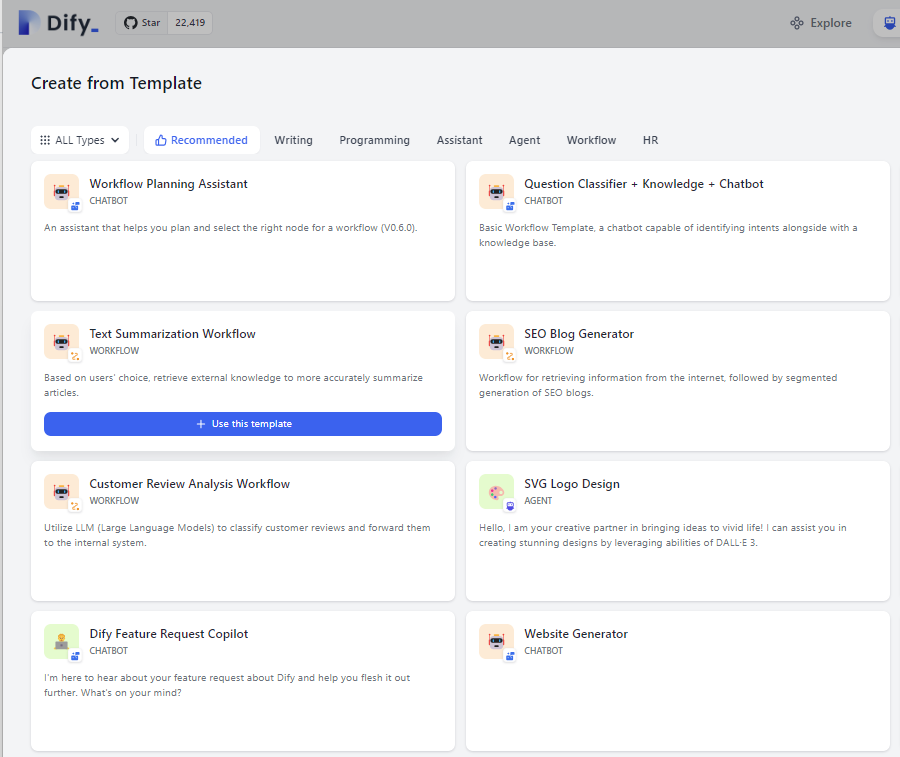

+[**Dify**](https://dify.ai/) is an open-source production-ready LLM app development platform; by integrating it with [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily leverage local LLMs running on Intel GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max) for building complex AI workflows (e.g. RAG).

+

+

+*See the demo of a RAG workflow in Dify running LLaMA2-7B on Intel A770 GPU below.*

+

+

+

+

+## Quickstart

+

+### 1. Install and Start `Ollama` Service on Intel GPU

+

+Follow the steps in [Run Ollama on Intel GPU Guide](./ollama_quickstart.md) to install and run Ollama on Intel GPU. Ensure that `ollama serve` is running correctly and can be accessed through a local URL (e.g., `https://127.0.0.1:11434`) or a remote URL (e.g., `http://your_ip:11434`).

+

+

+

+### 2. Install and Start `Dify`

+

+

+#### 2.1 Download `Dify`

+

+You can either clone the repository or download the source zip from [github](https://github.com/langgenius/dify/archive/refs/heads/main.zip):

```bash

git clone https://github.com/langgenius/dify.git

```

-### Installation of the basic environment

-Server startup requires Python 3.10.x. Anaconda is recommended to create and manage python environment.

-```bash

-conda create -n dify python=3.10

-conda activate dify

-cd api

-cp .env.example .env

-openssl rand -base64 42

-sed -i 's/SECRET_KEY=.*/SECRET_KEY=/' .env

-pip install -r requirements.txt

+

+#### 2.2 Setup Redis and PostgreSQL

+

+Next, deploy PostgreSQL and Redis. You can choose to utilize Docker, following the steps in the [Local Source Code Start Guide](https://docs.dify.ai/getting-started/install-self-hosted/local-source-code#clone-dify), or proceed without Docker using the following instructions:

+

+

+- Install Redis by executing `sudo apt-get install redis-server`. Refer to [this guide](https://www.hostinger.com/tutorials/how-to-install-and-setup-redis-on-ubuntu/) for Redis environment setup, including password configuration and daemon settings.

+

+- Install PostgreSQL by following either [the Official PostgreSQL Tutorial](https://www.postgresql.org/docs/current/tutorial.html) or [a PostgreSQL Quickstart Guide](https://www.digitalocean.com/community/tutorials/how-to-install-postgresql-on-ubuntu-20-04-quickstart). After installation, proceed with the following PostgreSQL commands for setting up Dify. These commands create a username/password for Dify (e.g., `dify_user`, change `'your_password'` as desired), create a new database named `dify`, and grant privileges:

+ ```sql

+ CREATE USER dify_user WITH PASSWORD 'your_password';

+ CREATE DATABASE dify;

+ GRANT ALL PRIVILEGES ON DATABASE dify TO dify_user;

+ ```

+

+Configure Redis and PostgreSQL settings in the `.env` file located under dify source folder `dify/api/`:

+

+```bash dify/api/.env

+### Example dify/api/.env

+## Redis settings

+REDIS_HOST=localhost

+REDIS_PORT=6379

+REDIS_USERNAME=your_redis_user_name # change if needed

+REDIS_PASSWORD=your_redis_password # change if needed

+REDIS_DB=0

+

+## postgreSQL settings

+DB_USERNAME=dify_user # change if needed

+DB_PASSWORD=your_dify_password # change if needed

+DB_HOST=localhost

+DB_PORT=5432

+DB_DATABASE=dify # change if needed

```

-### Prepare for redis, postgres, node and npm.

-* Install Redis by `sudo apt-get install redis-server`. Refer to [page](https://www.hostinger.com/tutorials/how-to-install-and-setup-redis-on-ubuntu/) to setup the Redis environment, including password, demon, etc.

-* install postgres by `sudo apt-get install postgres` and `sudo apt-get install postgres-client`. Setup username, create a database and grant previlidge according to [page](https://www.ruanyifeng.com/blog/2013/12/getting_started_with_postgresql.html)

-* install npm and node by `brew install node@20` according to [nodejs page](https://nodejs.org/en/download/package-manager)

-> Note that set redis and postgres related environment in .env under dify/api/ and set web related environment variable in .env.local under dify/web

-### Install Ollama

-Please install ollama refer to [ollama quick start](./ollama_quickstart.md). Ensure that ollama could run successfully on Intel GPU.

+#### 2.3 Server Deployment

+

+Follow the steps in the [`Server Deployment` section in Local Source Code Start Guide](https://docs.dify.ai/getting-started/install-self-hosted/local-source-code#server-deployment) to deploy and start the Dify Server.

+

+Upon successful deployment, you will see logs in the terminal similar to the following:

+

-### Start service

-1. Open the terminal and set `export no_proxy=localhost,127.0.0.1`

```bash

-flask db upgrade

-flask run --host 0.0.0.0 --port=5001 --debug

-```

-You will see log like below if successfully start the service.

-```

INFO:werkzeug:

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5001

@@ -43,51 +77,70 @@ INFO:werkzeug: * Restarting with stat

WARNING:werkzeug: * Debugger is active!

INFO:werkzeug: * Debugger PIN: 227-697-894

```

-2. Open another terminal and also set `export no_proxy=localhost,127.0.0.1`.

-If Linux system, use the command below.

-```bash

-celery -A app.celery worker -P gevent -c 1 -Q dataset,generation,mail --loglevel INFO

-```

-If windows system, use the command below.

+

+

+#### 2.4 Deploy the frontend page

+

+Refer to the instructions provided in the [`Deploy the frontend page` section in Local Source Code Start Guide](https://docs.dify.ai/getting-started/install-self-hosted/local-source-code#deploy-the-frontend-page) to deploy the frontend page.

+

+Below is an example of environment variable configuration found in `dify/web/.env.local`:

+

+

```bash

-celery -A app.celery worker -P solo --without-gossip --without-mingle -Q dataset,generation,mail --loglevel INFO

+# For production release, change this to PRODUCTION

+NEXT_PUBLIC_DEPLOY_ENV=DEVELOPMENT

+NEXT_PUBLIC_EDITION=SELF_HOSTED

+NEXT_PUBLIC_API_PREFIX=http://localhost:5001/console/api

+NEXT_PUBLIC_PUBLIC_API_PREFIX=http://localhost:5001/api

+NEXT_PUBLIC_SENTRY_DSN=

```

-3. Open another terminal and also set `export no_proxy=localhost,127.0.0.1`. Run the commands below to start the front-end service.

-```bash

-cd web

-npm install

-npm run build

-npm run start

+

+```eval_rst

+

+.. note::

+

+ If you encounter connection problems, you may run `export no_proxy=localhost,127.0.0.1` before starting API servcie, Worker service and frontend.

+

```

-## Example: RAG

-See the demo of running dify with Ollama on an Intel Core Ultra laptop below.

-

+### 3. How to Use `Dify`

+

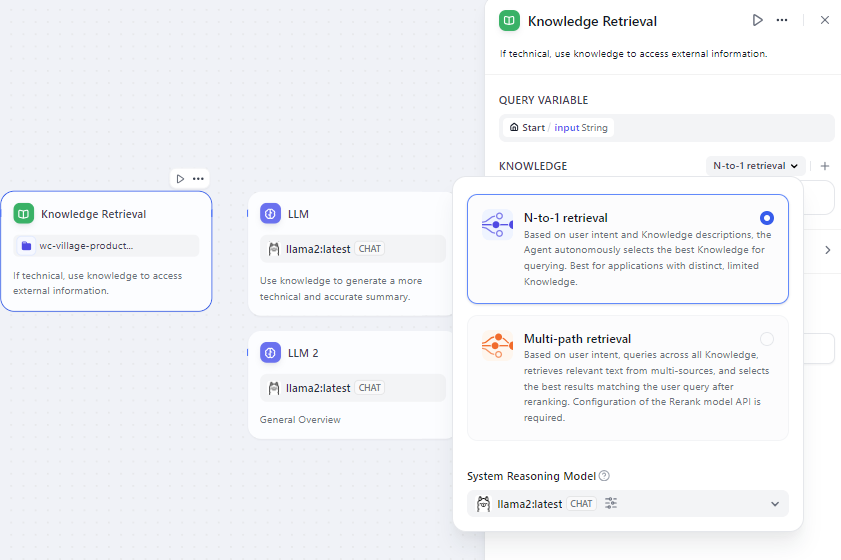

+For comprehensive usage instructions of Dify, please refer to the [Dify Documentation](https://docs.dify.ai/). In this section, we'll only highlight a few key steps for local LLM setup.

+

+

+#### Setup Ollama

+

+Open your browser and access the Dify UI at `http://localhost:3000`.

+

+

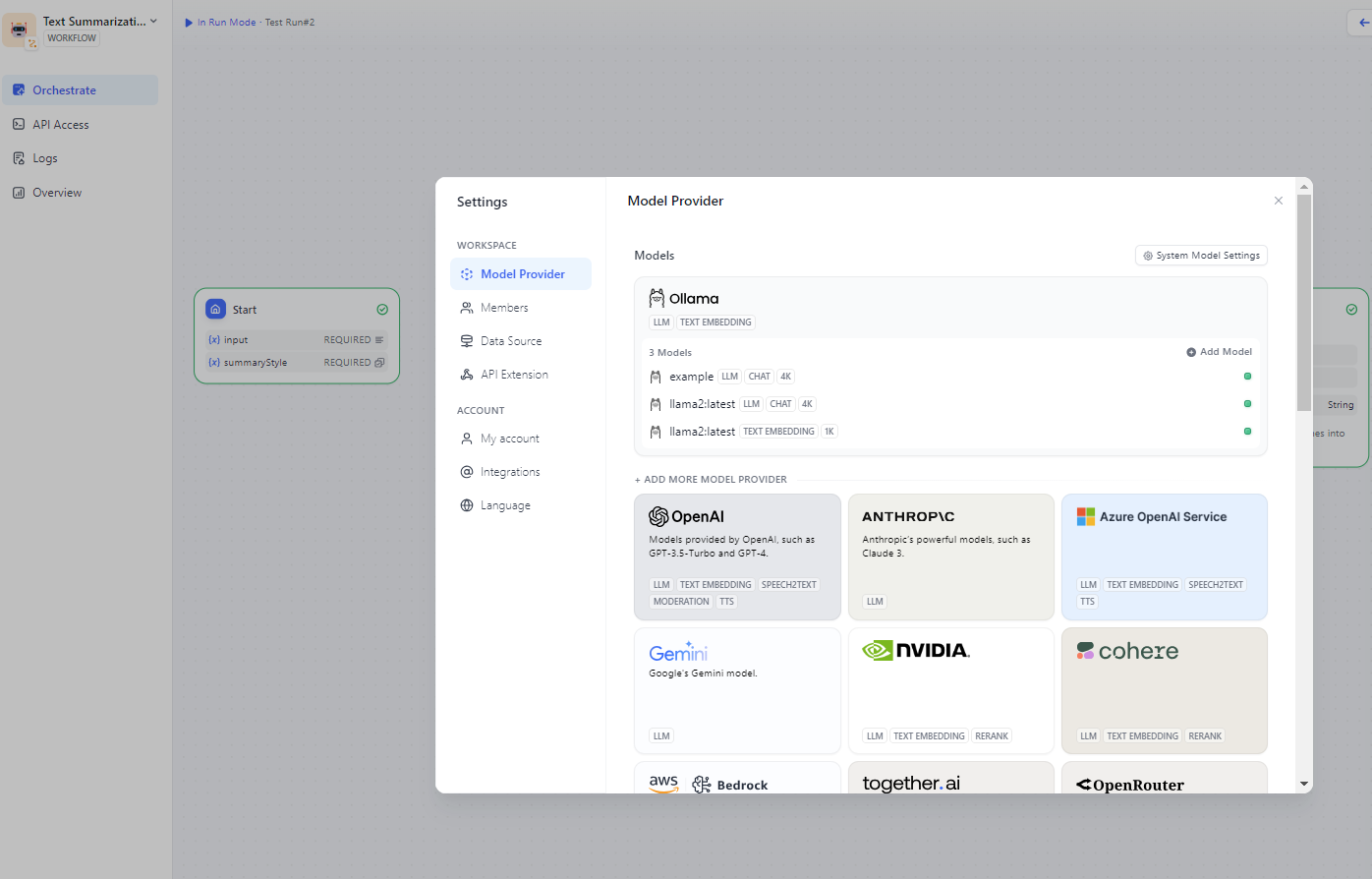

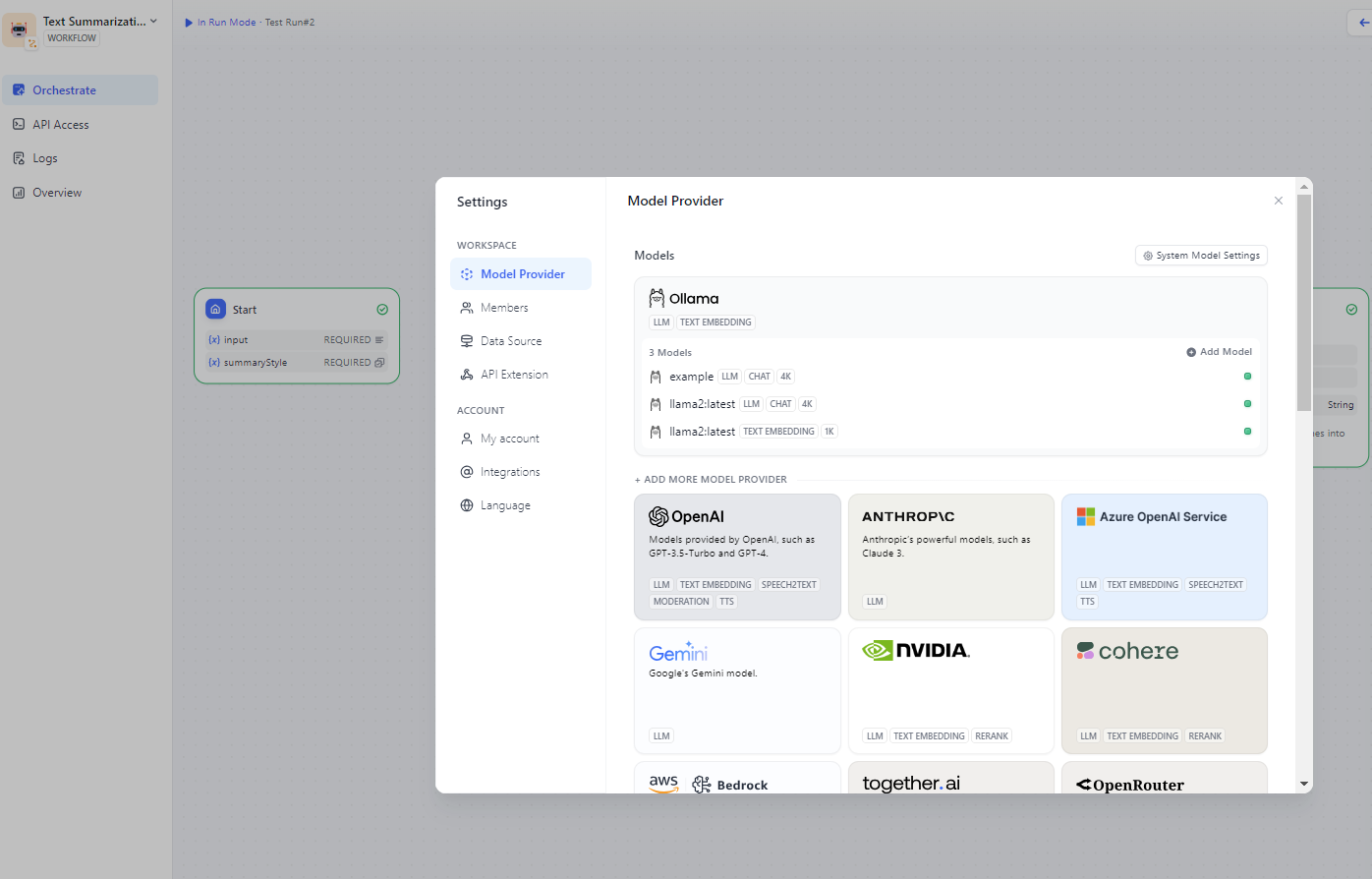

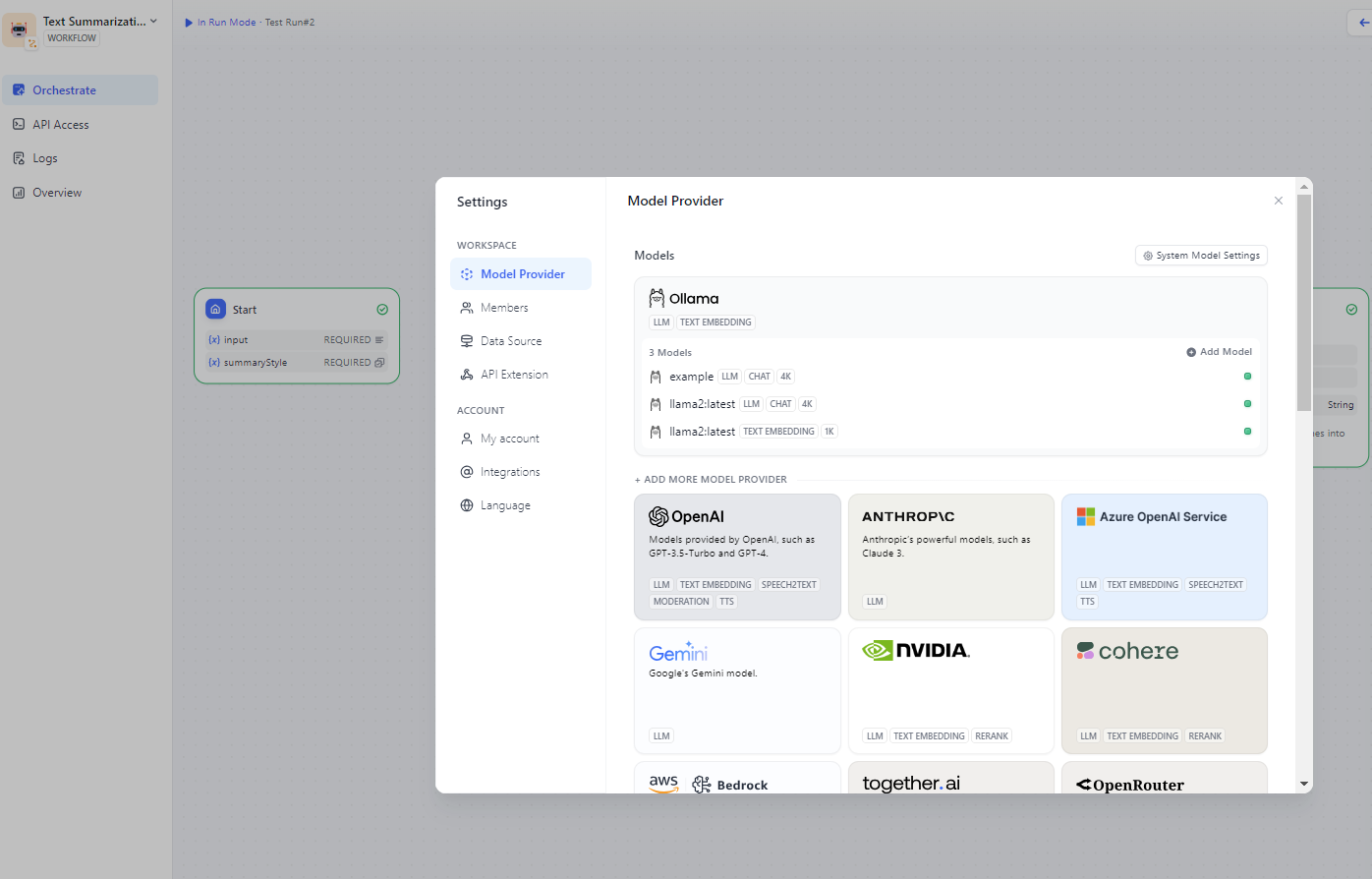

+Configure the Ollama URL in `Settings > Model Providers > Ollama`. For detailed instructions on how to do this, see the [Ollama Guide in the Dify Documentation](https://docs.dify.ai/tutorials/model-configuration/ollama).

+

+

+

+Once Ollama is successfully connected, you will see a list of Ollama models similar to the following:

+

+  +

+

-1. Set up the environment `export no_proxy=localhost,127.0.0.1` and start Ollama locally by `ollama serve`.

-2. Open http://localhost:3000 to view dify and change the model provider in setting including both LLM and embedding. For example, choose ollama.

-

-

-

-

-

-

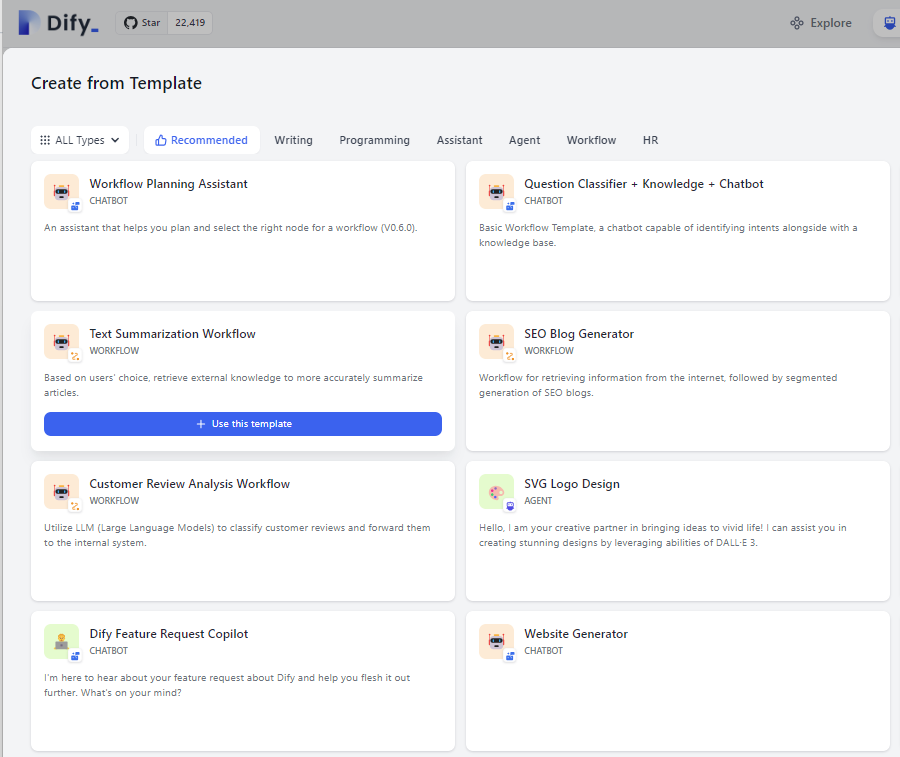

-

+  +

+

-5. Enter input and start to generate. You could find retrieval results and answers generated on the right.

-4. Add knowledge base and specify which type of embedding model to use.

-

-

-

+  +

+

+- Enter your input in the workflow and execute it. You'll find retrieval results and generated answers on the right.

+

+ +

+

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/fastchat_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/fastchat_quickstart.md

index 726b7684a72..2eb44aced0c 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/fastchat_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/fastchat_quickstart.md

@@ -61,6 +61,23 @@ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

python3 -m ipex_llm.serving.fastchat.ipex_llm_worker --model-path REPO_ID_OR_YOUR_MODEL_PATH --low-bit "sym_int4" --trust-remote-code --device "xpu"

```

+#### For self-speculative decoding example:

+

+You can use IPEX-LLM to run `self-speculative decoding` example. Refer to [here](https://github.com/intel-analytics/ipex-llm/tree/c9fac8c26bf1e1e8f7376fa9a62b32951dd9e85d/python/llm/example/GPU/Speculative-Decoding) for more details on intel MAX GPUs. Refer to [here](https://github.com/intel-analytics/ipex-llm/tree/c9fac8c26bf1e1e8f7376fa9a62b32951dd9e85d/python/llm/example/GPU/Speculative-Decoding) for more details on intel CPUs.

+

+```bash

+# Available low_bit format only including bf16 on CPU.

+source ipex-llm-init -t

+python3 -m ipex_llm.serving.fastchat.ipex_llm_worker --model-path lmsys/vicuna-7b-v1.5 --low-bit "bf16" --trust-remote-code --device "cpu" --speculative

+

+# Available low_bit format only including fp16 on GPU.

+source /opt/intel/oneapi/setvars.sh

+export ENABLE_SDP_FUSION=1

+export SYCL_CACHE_PERSISTENT=1

+export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+python3 -m ipex_llm.serving.fastchat.ipex_llm_worker --model-path lmsys/vicuna-7b-v1.5 --low-bit "fp16" --trust-remote-code --device "xpu" --speculative

+```

+

You can get output like this:

```bash

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/index.rst b/docs/readthedocs/source/doc/LLM/Quickstart/index.rst

index 750f84af166..a5c5877117e 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/index.rst

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/index.rst

@@ -16,12 +16,16 @@ This section includes efficient guide to show you how to:

* `Run Local RAG using Langchain-Chatchat on Intel GPU <./chatchat_quickstart.html>`_

* `Run Text Generation WebUI on Intel GPU <./webui_quickstart.html>`_

* `Run Open WebUI on Intel GPU <./open_webui_with_ollama_quickstart.html>`_

+* `Run PrivateGPT with IPEX-LLM on Intel GPU <./privateGPT_quickstart.html>`_

* `Run Coding Copilot (Continue) in VSCode with Intel GPU <./continue_quickstart.html>`_

+* `Run Dify on Intel GPU <./dify_quickstart.html>`_

* `Run llama.cpp with IPEX-LLM on Intel GPU <./llama_cpp_quickstart.html>`_

* `Run Ollama with IPEX-LLM on Intel GPU <./ollama_quickstart.html>`_

* `Run Llama 3 on Intel GPU using llama.cpp and ollama with IPEX-LLM <./llama3_llamacpp_ollama_quickstart.html>`_

* `Run IPEX-LLM Serving with FastChat <./fastchat_quickstart.html>`_

* `Finetune LLM with Axolotl on Intel GPU <./axolotl_quickstart.html>`_

+* `Run IPEX-LLM serving on Multiple Intel GPUs using DeepSpeed AutoTP and FastApi <./deepspeed_autotp_fastapi_quickstart.html>`

+

.. |bigdl_llm_migration_guide| replace:: ``bigdl-llm`` Migration Guide

.. _bigdl_llm_migration_guide: bigdl_llm_migration.html

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/llama3_llamacpp_ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/llama3_llamacpp_ollama_quickstart.md

index 2003360e942..cedb256fea2 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/llama3_llamacpp_ollama_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/llama3_llamacpp_ollama_quickstart.md

@@ -29,9 +29,9 @@ Suppose you have downloaded a [Meta-Llama-3-8B-Instruct-Q4_K_M.gguf](https://hug

#### 1.3 Run Llama3 on Intel GPU using llama.cpp

-##### Set Environment Variables

+#### Runtime Configuration

-Configure oneAPI variables by running the following command:

+To use GPU acceleration, several environment variables are required or recommended before running `llama.cpp`.

```eval_rst

.. tabs::

@@ -40,16 +40,24 @@ Configure oneAPI variables by running the following command:

.. code-block:: bash

source /opt/intel/oneapi/setvars.sh

+ export SYCL_CACHE_PERSISTENT=1

.. tab:: Windows

+

+ .. code-block:: bash

- .. note::

+ set SYCL_CACHE_PERSISTENT=1

- This is a required step for APT or offline installed oneAPI. Skip this step for PIP-installed oneAPI.

+```

- .. code-block:: bash

+```eval_rst

+.. tip::

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ If your local LLM is running on Intel Arc™ A-Series Graphics with Linux OS (Kernel 6.2), it is recommended to additionaly set the following environment variable for optimal performance:

+

+ .. code-block:: bash

+

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

```

@@ -122,9 +130,9 @@ Launch the Ollama service:

export no_proxy=localhost,127.0.0.1

export ZES_ENABLE_SYSMAN=1

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

export OLLAMA_NUM_GPU=999

source /opt/intel/oneapi/setvars.sh

+ export SYCL_CACHE_PERSISTENT=1

./ollama serve

@@ -137,13 +145,23 @@ Launch the Ollama service:

set no_proxy=localhost,127.0.0.1

set ZES_ENABLE_SYSMAN=1

set OLLAMA_NUM_GPU=999

- # Below is a required step for APT or offline installed oneAPI. Skip below step for PIP-installed oneAPI.

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ set SYCL_CACHE_PERSISTENT=1

ollama serve

```

+```eval_rst

+.. tip::

+

+ If your local LLM is running on Intel Arc™ A-Series Graphics with Linux OS (Kernel 6.2), it is recommended to additionaly set the following environment variable for optimal performance before executing `ollama serve`:

+

+ .. code-block:: bash

+

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+

+```

+

```eval_rst

.. note::

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md

index 9fc7e02b130..5b681babd37 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md

@@ -18,17 +18,37 @@ For Linux system, we recommend Ubuntu 20.04 or later (Ubuntu 22.04 is preferred)

Visit the [Install IPEX-LLM on Linux with Intel GPU](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html), follow [Install Intel GPU Driver](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#install-intel-gpu-driver) and [Install oneAPI](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#install-oneapi) to install GPU driver and Intel® oneAPI Base Toolkit 2024.0.

#### Windows

-Visit the [Install IPEX-LLM on Windows with Intel GPU Guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_windows_gpu.html), and follow [Install Prerequisites](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_windows_gpu.html#install-prerequisites) to install [Visual Studio 2022](https://visualstudio.microsoft.com/downloads/) Community Edition, latest [GPU driver](https://www.intel.com/content/www/us/en/download/785597/intel-arc-iris-xe-graphics-windows.html) and Intel® oneAPI Base Toolkit 2024.0.

+Visit the [Install IPEX-LLM on Windows with Intel GPU Guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_windows_gpu.html), and follow [Install Prerequisites](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_windows_gpu.html#install-prerequisites) to install [Visual Studio 2022](https://visualstudio.microsoft.com/downloads/) Community Edition and latest [GPU driver](https://www.intel.com/content/www/us/en/download/785597/intel-arc-iris-xe-graphics-windows.html).

**Note**: IPEX-LLM backend only supports the more recent GPU drivers. Please make sure your GPU driver version is equal or newer than `31.0.101.5333`, otherwise you might find gibberish output.

### 1 Install IPEX-LLM for llama.cpp

To use `llama.cpp` with IPEX-LLM, first ensure that `ipex-llm[cpp]` is installed.

-```cmd

-conda create -n llm-cpp python=3.11

-conda activate llm-cpp

-pip install --pre --upgrade ipex-llm[cpp]

+

+```eval_rst

+.. tabs::

+ .. tab:: Linux

+

+ .. code-block:: bash

+

+ conda create -n llm-cpp python=3.11

+ conda activate llm-cpp

+ pip install --pre --upgrade ipex-llm[cpp]

+

+ .. tab:: Windows

+

+ .. note::

+

+ for Windows, we use pip to install oneAPI.

+

+ .. code-block:: cmd

+

+ conda create -n llm-cpp python=3.11

+ conda activate llm-cpp

+ pip install dpcpp-cpp-rt==2024.0.2 mkl-dpcpp==2024.0.0 onednn==2024.0.0 # install oneapi

+ pip install --pre --upgrade ipex-llm[cpp]

+

```

**After the installation, you should have created a conda environment, named `llm-cpp` for instance, for running `llama.cpp` commands with IPEX-LLM.**

@@ -78,13 +98,9 @@ Then you can use following command to initialize `llama.cpp` with IPEX-LLM:

**Now you can use these executable files by standard llama.cpp's usage.**

-### 3 Example: Running community GGUF models with IPEX-LLM

-

-Here we provide a simple example to show how to run a community GGUF model with IPEX-LLM.

-

-#### Set Environment Variables

+#### Runtime Configuration

-Configure oneAPI variables by running the following command:

+To use GPU acceleration, several environment variables are required or recommended before running `llama.cpp`.

```eval_rst

.. tabs::

@@ -93,19 +109,31 @@ Configure oneAPI variables by running the following command:

.. code-block:: bash

source /opt/intel/oneapi/setvars.sh

+ export SYCL_CACHE_PERSISTENT=1

.. tab:: Windows

+

+ .. code-block:: bash

- .. note::

+ set SYCL_CACHE_PERSISTENT=1

- This is a required step for APT or offline installed oneAPI. Skip this step for PIP-installed oneAPI.

+```

- .. code-block:: bash

+```eval_rst

+.. tip::

+

+ If your local LLM is running on Intel Arc™ A-Series Graphics with Linux OS (Kernel 6.2), it is recommended to additionaly set the following environment variable for optimal performance:

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ .. code-block:: bash

+

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

```

+### 3 Example: Running community GGUF models with IPEX-LLM

+

+Here we provide a simple example to show how to run a community GGUF model with IPEX-LLM.

+

#### Model Download

Before running, you should download or copy community GGUF model to your current directory. For instance, `mistral-7b-instruct-v0.1.Q4_K_M.gguf` of [Mistral-7B-Instruct-v0.1-GGUF](https://huggingface.co/TheBloke/Mistral-7B-Instruct-v0.1-GGUF/tree/main).

@@ -273,3 +301,6 @@ If your program hang after `llm_load_tensors: SYCL_Host buffer size = xx.xx

#### How to set `-ngl` parameter

`-ngl` means the number of layers to store in VRAM. If your VRAM is enough, we recommend putting all layers on GPU, you can just set `-ngl` to a large number like 999 to achieve this goal.

+

+#### How to specificy GPU

+If your machine has multi GPUs, `llama.cpp` will default use all GPUs which may slow down your inference for model which can run on single GPU. You can add `-sm none` in your command to use one GPU only. Also, you can use `ONEAPI_DEVICE_SELECTOR=level_zero:[gpu_id]` to select device before excuting your command, more details can refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/KeyFeatures/multi_gpus_selection.html#oneapi-device-selector).

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

index 695a41dfef0..96d63ed82a4 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

@@ -56,6 +56,7 @@ You may launch the Ollama service as below:

export no_proxy=localhost,127.0.0.1

export ZES_ENABLE_SYSMAN=1

source /opt/intel/oneapi/setvars.sh

+ export SYCL_CACHE_PERSISTENT=1

./ollama serve

@@ -68,8 +69,7 @@ You may launch the Ollama service as below:

set OLLAMA_NUM_GPU=999

set no_proxy=localhost,127.0.0.1

set ZES_ENABLE_SYSMAN=1

- # Below is a required step for APT or offline installed oneAPI. Skip below step for PIP-installed oneAPI.

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ set SYCL_CACHE_PERSISTENT=1

ollama serve

@@ -81,6 +81,17 @@ You may launch the Ollama service as below:

Please set environment variable ``OLLAMA_NUM_GPU`` to ``999`` to make sure all layers of your model are running on Intel GPU, otherwise, some layers may run on CPU.

```

+```eval_rst

+.. tip::

+

+ If your local LLM is running on Intel Arc™ A-Series Graphics with Linux OS (Kernel 6.2), it is recommended to additionaly set the following environment variable for optimal performance before executing `ollama serve`:

+

+ .. code-block:: bash

+

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+

+```

+

```eval_rst

.. note::

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

index ed45d582d16..1eb2ec05418 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

@@ -2,7 +2,7 @@

[Open WebUI](https://github.com/open-webui/open-webui) is a user friendly GUI for running LLM locally; by porting it to [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily run LLM in [Open WebUI](https://github.com/open-webui/open-webui) on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

-See the demo of running Mistral:7B on Intel Arc A770 below.

+*See the demo of running Mistral:7B on Intel Arc A770 below.*

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/privateGPT_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/privateGPT_quickstart.md

new file mode 100644

index 00000000000..b8d662ab691

--- /dev/null

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/privateGPT_quickstart.md

@@ -0,0 +1,102 @@

+# Run PrivateGPT with IPEX-LLM on Intel GPU

+

+[PrivateGPT](https://github.com/zylon-ai/private-gpt) is a production-ready AI project that allows users to chat over documents, etc.; by integrating it with [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily leverage local LLMs running on Intel GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max).

+

+*See the demo of privateGPT running Mistral:7B on Intel Arc A770 below.*

+

+

+

+

+## Quickstart

+

+

+### 1. Install and Start `Ollama` Service on Intel GPU

+

+Follow the steps in [Run Ollama on Intel GPU Guide](./ollama_quickstart.md) to install and run Ollama on Intel GPU. Ensure that `ollama serve` is running correctly and can be accessed through a local URL (e.g., `https://127.0.0.1:11434`) or a remote URL (e.g., `http://your_ip:11434`).

+

+

+### 2. Install PrivateGPT

+

+#### Download PrivateGPT

+

+You can either clone the repository or download the source zip from [github](https://github.com/zylon-ai/private-gpt/archive/refs/heads/main.zip):

+```bash

+git clone https://github.com/zylon-ai/private-gpt

+```

+

+#### Install Dependencies

+

+Execute the following commands in a terminal to install the dependencies of PrivateGPT:

+

+```cmd

+cd private-gpt

+pip install poetry

+pip install ffmpy==0.3.1

+poetry install --extras "ui llms-ollama embeddings-ollama vector-stores-qdrant"

+```

+For more details, refer to the [PrivateGPT installation Guide](https://docs.privategpt.dev/installation/getting-started/main-concepts).

+

+

+### 3. Start PrivateGPT

+

+#### Configure PrivateGPT

+

+Change PrivateGPT settings by modifying `settings.yaml` and `settings-ollama.yaml`.

+

+* `settings.yaml` is always loaded and contains the default configuration. In order to run PrivateGPT locally, you need to replace the tokenizer path under the `llm` option with your local path.

+* `settings-ollama.yaml` is loaded if the ollama profile is specified in the PGPT_PROFILES environment variable. It can override configuration from the default `settings.yaml`. You can modify the settings in this file according to your preference. It is worth noting that to use the options `llm_model: ` and `embedding_model: `, you need to first use `ollama pull` to fetch the models locally.

+

+To learn more about the configuration of PrivatePGT, please refer to [PrivateGPT Main Concepts](https://docs.privategpt.dev/installation/getting-started/main-concepts).

+

+

+#### Start the service

+Please ensure that the Ollama server continues to run in a terminal while you're using the PrivateGPT.

+

+Run below commands to start the service in another terminal:

+

+```eval_rst

+.. tabs::

+ .. tab:: Linux

+

+ .. code-block:: bash

+

+ export no_proxy=localhost,127.0.0.1

+ PGPT_PROFILES=ollama make run

+

+ .. note:

+

+ Setting ``PGPT_PROFILES=ollama`` will load the configuration from ``settings.yaml`` and ``settings-ollama.yaml``.

+

+ .. tab:: Windows

+

+ .. code-block:: bash

+

+ set no_proxy=localhost,127.0.0.1

+ set PGPT_PROFILES=ollama

+ make run

+

+ .. note:

+

+ Setting ``PGPT_PROFILES=ollama`` will load the configuration from ``settings.yaml`` and ``settings-ollama.yaml``.

+```

+

+### 4. Using PrivateGPT

+

+#### Chat with the Model

+

+To chat with the LLM, select the "LLM Chat" option located in the upper left corner of the page. Type your messages at the bottom of the page and click the "Submit" button to receive responses from the model.

+

+

+

+  +

+

+

+

+

+#### Chat over Documents (RAG)

+

+To interact with documents, select the "Query Files" option in the upper left corner of the page. Click the "Upload File(s)" button to upload documents. After the documents have been vectorized, you can type your messages at the bottom of the page and click the "Submit" button to receive responses from the model based on the uploaded content.

+

+

+  +

diff --git a/docs/readthedocs/source/index.rst b/docs/readthedocs/source/index.rst

index 5a307f3f8b1..53b33be96c8 100644

--- a/docs/readthedocs/source/index.rst

+++ b/docs/readthedocs/source/index.rst

@@ -580,6 +580,20 @@ Verified Models

+

diff --git a/docs/readthedocs/source/index.rst b/docs/readthedocs/source/index.rst

index 5a307f3f8b1..53b33be96c8 100644

--- a/docs/readthedocs/source/index.rst

+++ b/docs/readthedocs/source/index.rst

@@ -580,6 +580,20 @@ Verified Models

link |

+

+ | CodeGemma |

+

+ link |

+

+ link |

+

+

+ | Command-R/cohere |

+

+ link |

+

+ link |

+

diff --git a/python/llm/dev/benchmark/all-in-one/run.py b/python/llm/dev/benchmark/all-in-one/run.py

index 31c3ecee8b2..714bb2e915a 100644

--- a/python/llm/dev/benchmark/all-in-one/run.py

+++ b/python/llm/dev/benchmark/all-in-one/run.py

@@ -33,6 +33,7 @@

sys.path.append(benchmark_util_path)

from benchmark_util import BenchmarkWrapper

from ipex_llm.utils.common.log4Error import invalidInputError

+from ipex_llm.utils.common import invalidInputError

LLAMA_IDS = ['meta-llama/Llama-2-7b-chat-hf','meta-llama/Llama-2-13b-chat-hf',

'meta-llama/Llama-2-70b-chat-hf','decapoda-research/llama-7b-hf',

@@ -50,7 +51,7 @@ def run_model_in_thread(model, in_out, tokenizer, result, warm_up, num_beams, in

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

output_ids = output_ids.cpu()

@@ -110,6 +111,8 @@ def run_model(repo_id, test_api, in_out_pairs, local_model_hub=None, warm_up=1,

result = run_speculative_gpu(repo_id, local_model_hub, in_out_pairs, warm_up, num_trials, num_beams, batch_size)

elif test_api == 'pipeline_parallel_gpu':

result = run_pipeline_parallel_gpu(repo_id, local_model_hub, in_out_pairs, warm_up, num_trials, num_beams, low_bit, batch_size, cpu_embedding, fp16=use_fp16_torch_dtype, n_gpu=n_gpu)

+ else:

+ invalidInputError(False, "Unknown test_api " + test_api + ", please check your config.yaml.")

for in_out_pair in in_out_pairs:

if result and result[in_out_pair]:

@@ -238,7 +241,7 @@ def run_transformer_int4(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -304,7 +307,7 @@ def run_pytorch_autocast_bf16(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -374,7 +377,7 @@ def run_optimize_model(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -558,7 +561,7 @@ def run_optimize_model_gpu(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

output_ids = output_ids.cpu()

@@ -630,7 +633,7 @@ def run_ipex_fp16_gpu(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

output_ids = output_ids.cpu()

@@ -708,7 +711,7 @@ def run_bigdl_fp16_gpu(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

output_ids = output_ids.cpu()

@@ -800,7 +803,7 @@ def run_deepspeed_transformer_int4_cpu(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

if local_rank == 0:

print("model generate cost: " + str(end - st))

@@ -887,10 +890,12 @@ def run_transformer_int4_gpu_win(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

if streaming:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

+ output_ids = model.generate(input_ids, do_sample=False,

+ max_new_tokens=out_len, min_new_tokens=out_len,

num_beams=num_beams, streamer=streamer)

else:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

+ output_ids = model.generate(input_ids, do_sample=False,

+ max_new_tokens=out_len, min_new_tokens=out_len,

num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

@@ -994,10 +999,12 @@ def run_transformer_int4_fp16_gpu_win(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

if streaming:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

+ output_ids = model.generate(input_ids, do_sample=False,

+ max_new_tokens=out_len, min_new_tokens=out_len,

num_beams=num_beams, streamer=streamer)

else:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

+ output_ids = model.generate(input_ids, do_sample=False,

+ max_new_tokens=out_len, min_new_tokens=out_len,

num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

@@ -1096,10 +1103,12 @@ def run_transformer_int4_loadlowbit_gpu_win(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

if streaming:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

+ output_ids = model.generate(input_ids, do_sample=False,

+ max_new_tokens=out_len, min_new_tokens=out_len,

num_beams=num_beams, streamer=streamer)

else:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

+ output_ids = model.generate(input_ids, do_sample=False,

+ max_new_tokens=out_len, min_new_tokens=out_len,

num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

@@ -1183,7 +1192,7 @@ def run_transformer_autocast_bf16( repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -1254,7 +1263,7 @@ def run_bigdl_ipex_bf16(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids, total_list = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -1324,7 +1333,7 @@ def run_bigdl_ipex_int4(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids, total_list = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -1394,7 +1403,7 @@ def run_bigdl_ipex_int8(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids, total_list = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -1505,7 +1514,7 @@ def get_int_from_env(env_keys, default):

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

output_ids = output_ids.cpu()

@@ -1584,11 +1593,12 @@ def run_speculative_cpu(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

if _enable_ipex:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams, attention_mask=attention_mask)

+ output_ids = model.generate(input_ids, do_sample=False,

+ max_new_tokens=out_len, min_new_tokens=out_len,

+ num_beams=num_beams, attention_mask=attention_mask)

else:

- output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ output_ids = model.generate(input_ids, do_sample=False,max_new_tokens=out_len,

+ min_new_tokens=out_len, num_beams=num_beams)

end = time.perf_counter()

print("model generate cost: " + str(end - st))

output = tokenizer.batch_decode(output_ids)

@@ -1659,7 +1669,7 @@ def run_speculative_gpu(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

output_ids = output_ids.cpu()

@@ -1779,7 +1789,7 @@ def run_pipeline_parallel_gpu(repo_id,

for i in range(num_trials + warm_up):

st = time.perf_counter()

output_ids = model.generate(input_ids, do_sample=False, max_new_tokens=out_len,

- num_beams=num_beams)

+ min_new_tokens=out_len, num_beams=num_beams)

torch.xpu.synchronize()

end = time.perf_counter()

output_ids = output_ids.cpu()

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Model/codegemma/README.md b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/codegemma/README.md

new file mode 100644

index 00000000000..a959d278db6

--- /dev/null

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/codegemma/README.md

@@ -0,0 +1,75 @@

+# CodeGemma

+In this directory, you will find examples on how you could apply IPEX-LLM INT4 optimizations on CodeGemma models. For illustration purposes, we utilize the [google/codegemma-7b-it](https://huggingface.co/google/codegemma-7b-it) as reference CodeGemma models.

+

+## 0. Requirements

+To run these examples with IPEX-LLM, we have some recommended requirements for your machine, please refer to [here](../README.md#recommended-requirements) for more information.

+

+## Example: Predict Tokens using `generate()` API

+In the example [generate.py](./generate.py), we show a basic use case for a CodeGemma model to predict the next N tokens using `generate()` API, with IPEX-LLM INT4 optimizations.

+### 1. Install

+We suggest using conda to manage the Python environment. For more information about conda installation, please refer to [here](https://docs.conda.io/en/latest/miniconda.html#).

+

+After installing conda, create a Python environment for IPEX-LLM:

+```bash

+conda create -n llm python=3.11 # recommend to use Python 3.11

+conda activate llm

+

+# install ipex-llm with 'all' option

+pip install ipex-llm[all]

+

+# According to CodeGemma's requirement, please make sure you are using a stable version of Transformers, 4.38.1 or newer.

+pip install transformers==4.38.1

+```

+

+### 2. Run

+```

+python ./generate.py --repo-id-or-model-path REPO_ID_OR_MODEL_PATH --prompt PROMPT --n-predict N_PREDICT

+```

+

+Arguments info:

+- `--repo-id-or-model-path REPO_ID_OR_MODEL_PATH`: argument defining the huggingface repo id for the CodeGemma model to be downloaded, or the path to the huggingface checkpoint folder. It is default to be `'google/codegemma-7b-it'`.

+- `--prompt PROMPT`: argument defining the prompt to be infered (with integrated prompt format for chat). It is default to be `'Write a hello world program'`.

+- `--n-predict N_PREDICT`: argument defining the max number of tokens to predict. It is default to be `32`.

+

+> **Note**: When loading the model in 4-bit, IPEX-LLM converts linear layers in the model into INT4 format. In theory, a *X*B model saved in 16-bit will requires approximately 2*X* GB of memory for loading, and ~0.5*X* GB memory for further inference.

+>

+> Please select the appropriate size of the CodeLlama model based on the capabilities of your machine.

+

+#### 2.1 Client

+On client Windows machine, it is recommended to run directly with full utilization of all cores:

+```powershell

+python ./generate.py

+```

+

+#### 2.2 Server

+For optimal performance on server, it is recommended to set several environment variables (refer to [here](../README.md#best-known-configuration-on-linux) for more information), and run the example with all the physical cores of a single socket.

+

+E.g. on Linux,

+```bash

+# set IPEX-LLM env variables

+source ipex-llm-init

+

+# e.g. for a server with 48 cores per socket

+export OMP_NUM_THREADS=48

+numactl -C 0-47 -m 0 python ./generate.py

+```

+

+#### 2.3 Sample Output

+#### [google/codegemma-7b-it](https://huggingface.co/google/codegemma-7b-it)

+```log

+Inference time: xxxx s

+-------------------- Prompt --------------------

+user

+Write a hello world program

+model

+

+-------------------- Output --------------------

+user

+Write a hello world program

+model

+```python

+print("Hello, world!")

+```

+

+This program will print the message "Hello, world!" to the console.

+```

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Model/codegemma/generate.py b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/codegemma/generate.py

new file mode 100644

index 00000000000..8e370f75214

--- /dev/null

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/codegemma/generate.py

@@ -0,0 +1,71 @@

+#

+# Copyright 2016 The BigDL Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+import torch

+import time

+import argparse

+

+from ipex_llm.transformers import AutoModelForCausalLM

+from transformers import AutoTokenizer

+

+# The instruction-tuned models use a chat template that must be adhered to for conversational use.

+# see https://huggingface.co/google/codegemma-7b-it#chat-template.

+chat = [

+ { "role": "user", "content": "Write a hello world program" },

+]

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser(description='Predict Tokens using `generate()` API for CodeGemma model')

+ parser.add_argument('--repo-id-or-model-path', type=str, default="google/codegemma-7b-it",

+ help='The huggingface repo id for the CodeGemma to be downloaded'

+ ', or the path to the huggingface checkpoint folder')

+ parser.add_argument('--prompt', type=str, default="Write a hello world program",

+ help='Prompt to infer')

+ parser.add_argument('--n-predict', type=int, default=32,

+ help='Max tokens to predict')

+

+ args = parser.parse_args()

+ model_path = args.repo_id_or_model_path

+

+ # Load model in 4 bit,

+ # which convert the relevant layers in the model into INT4 format

+ # To fix the issue that the output of codegemma-7b-it is abnormal, skip the 'lm_head' module during optimization

+ model = AutoModelForCausalLM.from_pretrained(model_path,

+ load_in_4bit=True,

+ trust_remote_code=True,

+ use_cache=True,

+ modules_to_not_convert=["lm_head"])

+

+ # Load tokenizer

+ tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

+

+ # Generate predicted tokens

+ with torch.inference_mode():

+ chat[0]['content'] = args.prompt

+ prompt = tokenizer.apply_chat_template(chat, tokenize=False, add_generation_prompt=True)

+ input_ids = tokenizer.encode(prompt, return_tensors="pt")

+

+ # start inference

+ st = time.time()

+ output = model.generate(input_ids,

+ max_new_tokens=args.n_predict)

+ end = time.time()

+ output_str = tokenizer.decode(output[0], skip_special_tokens=True)

+ print(f'Inference time: {end-st} s')

+ print('-'*20, 'Prompt', '-'*20)

+ print(prompt)

+ print('-'*20, 'Output', '-'*20)

+ print(output_str)

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Model/cohere/README.md b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/cohere/README.md

new file mode 100644

index 00000000000..d104c84d89e

--- /dev/null

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/cohere/README.md

@@ -0,0 +1,64 @@

+# CoHere/command-r

+In this directory, you will find examples on how you could apply IPEX-LLM INT4 optimizations on cohere models. For illustration purposes, we utilize the [CohereForAI/c4ai-command-r-v01](https://huggingface.co/CohereForAI/c4ai-command-r-v01) as reference model.

+

+## 0. Requirements

+To run these examples with IPEX-LLM, we have some recommended requirements for your machine, please refer to [here](../README.md#recommended-requirements) for more information.

+

+## Example: Predict Tokens using `generate()` API

+In the example [generate.py](./generate.py), we show a basic use case for a cohere model to predict the next N tokens using `generate()` API, with IPEX-LLM INT4 optimizations.

+### 1. Install

+We suggest using conda to manage environment:

+```bash

+conda create -n llm python=3.11

+conda activate llm

+

+pip install --pre --upgrade ipex-llm[all] # install ipex-llm with 'all' option

+pip install tansformers==4.40.0

+```

+

+### 2. Run

+```

+python ./generate.py --repo-id-or-model-path REPO_ID_OR_MODEL_PATH --prompt PROMPT --n-predict N_PREDICT

+```

+

+Arguments info:

+- `--repo-id-or-model-path REPO_ID_OR_MODEL_PATH`: argument defining the huggingface repo id for the cohere model to be downloaded, or the path to the huggingface checkpoint folder. It is default to be `'CohereForAI/c4ai-command-r-v01'`.

+- `--prompt PROMPT`: argument defining the prompt to be infered (with integrated prompt format for chat). It is default to be `'What is AI?'`.

+- `--n-predict N_PREDICT`: argument defining the max number of tokens to predict. It is default to be `32`.

+

+> **Note**: When loading the model in 4-bit, IPEX-LLM converts linear layers in the model into INT4 format. In theory, a *X*B model saved in 16-bit will requires approximately 2*X* GB of memory for loading, and ~0.5*X* GB memory for further inference.

+>

+> Please select the appropriate size of the cohere model based on the capabilities of your machine.

+

+#### 2.1 Client

+On client Windows machine, it is recommended to run directly with full utilization of all cores:

+```powershell

+python ./generate.py

+```

+

+#### 2.2 Server

+For optimal performance on server, it is recommended to set several environment variables (refer to [here](../README.md#best-known-configuration-on-linux) for more information), and run the example with all the physical cores of a single socket.

+

+E.g. on Linux,

+```bash

+# set IPEX-LLM env variables

+source ipex-llm-init -t

+

+# e.g. for a server with 48 cores per socket

+export OMP_NUM_THREADS=48

+numactl -C 0-47 -m 0 python ./generate.py

+```

+

+#### 2.3 Sample Output

+#### [CohereForAI/c4ai-command-r-v01](https://huggingface.co/CohereForAI/c4ai-command-r-v01)

+```log

+Inference time: xxxxx s

+-------------------- Prompt --------------------

+

+<|START_OF_TURN_TOKEN|><|USER_TOKEN|>What is AI?<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

+

+-------------------- Output --------------------

+

+<|START_OF_TURN_TOKEN|><|USER_TOKEN|>What is AI?<|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

+Artificial Intelligence, or AI, is a fascinating field of study that aims to create intelligent machines that can mimic human cognitive functions and perform complex tasks. AI strives to

+```

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Model/cohere/generate.py b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/cohere/generate.py

new file mode 100644

index 00000000000..d215b00b153

--- /dev/null

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/cohere/generate.py

@@ -0,0 +1,69 @@

+#

+# Copyright 2016 The BigDL Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+#

+

+import torch

+import time

+import argparse

+

+from ipex_llm.transformers import AutoModelForCausalLM

+from transformers import AutoTokenizer

+

+# you could tune the prompt based on your own model,

+# Refer to https://huggingface.co/CohereForAI/c4ai-command-r-v01

+COHERE_PROMPT_FORMAT = """

+<|START_OF_TURN_TOKEN|><|USER_TOKEN|>{prompt}<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

+"""

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser(description='Predict Tokens using `generate()` API for cohere model')

+ parser.add_argument('--repo-id-or-model-path', type=str, default="CohereForAI/c4ai-command-r-v01",

+ help='The huggingface repo id for the cohere to be downloaded'

+ ', or the path to the huggingface checkpoint folder')

+ parser.add_argument('--prompt', type=str, default="What is AI?",

+ help='Prompt to infer')

+ parser.add_argument('--n-predict', type=int, default=32,

+ help='Max tokens to predict')

+

+ args = parser.parse_args()

+ model_path = args.repo_id_or_model_path

+

+ # Load model in 4 bit,

+ # which convert the relevant layers in the model into INT4 format

+ model = AutoModelForCausalLM.from_pretrained(model_path,

+ load_in_4bit=True,

+ trust_remote_code=True)

+

+ # Load tokenizer

+ tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

+

+ # Generate predicted tokens

+ with torch.inference_mode():

+ prompt = COHERE_PROMPT_FORMAT.format(prompt=args.prompt)

+ input_ids = tokenizer.encode(prompt, return_tensors="pt")

+ st = time.time()

+ # if your selected model is capable of utilizing previous key/value attentions

+ # to enhance decoding speed, but has `"use_cache": false` in its model config,

+ # it is important to set `use_cache=True` explicitly in the `generate` function

+ # to obtain optimal performance with IPEX-LLM INT4 optimizations

+ output = model.generate(input_ids,

+ max_new_tokens=args.n_predict)

+ end = time.time()

+ output_str = tokenizer.decode(output[0], skip_special_tokens=True)

+ print(f'Inference time: {end-st} s')

+ print('-'*20, 'Prompt', '-'*20)

+ print(prompt)

+ print('-'*20, 'Output', '-'*20)

+ print(output_str)

diff --git a/python/llm/example/CPU/PyTorch-Models/Model/codegemma/README.md b/python/llm/example/CPU/PyTorch-Models/Model/codegemma/README.md

new file mode 100644

index 00000000000..22bdabc5763

--- /dev/null

+++ b/python/llm/example/CPU/PyTorch-Models/Model/codegemma/README.md

@@ -0,0 +1,73 @@

+# CodeGemma

+In this directory, you will find examples on how you could use IPEX-LLM `optimize_model` API to accelerate CodeGemma models. For illustration purposes, we utilize the [google/codegemma-7b-it](https://huggingface.co/google/codegemma-7b-it) as reference CodeGemma models.

+

+## 0. Requirements

+To run these examples with IPEX-LLM, we have some recommended requirements for your machine, please refer to [here](../README.md#recommended-requirements) for more information.

+

+## Example: Predict Tokens using `generate()` API

+In the example [generate.py](./generate.py), we show a basic use case for a CodeGemma model to predict the next N tokens using `generate()` API, with IPEX-LLM INT4 optimizations.

+### 1. Install

+We suggest using conda to manage the Python environment. For more information about conda installation, please refer to [here](https://docs.conda.io/en/latest/miniconda.html#).

+

+After installing conda, create a Python environment for IPEX-LLM:

+```bash

+conda create -n llm python=3.11 # recommend to use Python 3.11

+conda activate llm

+

+# install ipex-llm with 'all' option

+pip install --pre --upgrade ipex-llm[all]

+

+# According to CodeGemma's requirement, please make sure you are using a stable version of Transformers, 4.38.1 or newer.

+pip install transformers==4.38.1

+```

+

+### 2. Run

+After setting up the Python environment, you could run the example by following steps.

+

+#### 2.1 Client

+On client Windows machines, it is recommended to run directly with full utilization of all cores:

+```powershell

+python ./generate.py --repo-id-or-model-path REPO_ID_OR_MODEL_PATH --prompt PROMPT --n-predict N_PREDICT

+```

+More information about arguments can be found in [Arguments Info](#23-arguments-info) section. The expected output can be found in [Sample Output](#24-sample-output) section.

+

+#### 2.2 Server

+For optimal performance on server, it is recommended to set several environment variables (refer to [here](../README.md#best-known-configuration-on-linux) for more information), and run the example with all the physical cores of a single socket.

+

+E.g. on Linux,

+```bash

+# set IPEX-LLM env variables

+source ipex-llm-init

+

+# e.g. for a server with 48 cores per socket

+export OMP_NUM_THREADS=48

+numactl -C 0-47 -m 0 python ./generate.py --repo-id-or-model-path REPO_ID_OR_MODEL_PATH --prompt PROMPT --n-predict N_PREDICT

+```

+More information about arguments can be found in [Arguments Info](#23-arguments-info) section. The expected output can be found in [Sample Output](#24-sample-output) section.

+

+#### 2.3 Arguments Info

+In the example, several arguments can be passed to satisfy your requirements:

+

+- `--repo-id-or-model-path REPO_ID_OR_MODEL_PATH`: argument defining the huggingface repo id for the CodeGemma model to be downloaded, or the path to the huggingface checkpoint folder. It is default to be `'google/codegemma-7b-it'`.

+- `--prompt PROMPT`: argument defining the prompt to be infered (with integrated prompt format for chat). It is default to be `'Write a hello world program'`.

+- `--n-predict N_PREDICT`: argument defining the max number of tokens to predict. It is default to be `32`.