Introduction to Diffusion Real-Time Event Stream through a simple application using Diffusion Cloud, Apache Kafka and ESPN live REST api.

A simple projects illustrating real-time replication and fan-out of ESPN sports live events from ESPN live REST api to a Kafka event cluster, through Diffusion Cloud instance via the use of our Kafka Adapter and REST Adapter.

This tutorial will help you consume Sports Live Events from ESPN live REST api, filter the portion of data that you are interested in, and transform it on-the-fly via our powerful Topic Views. Then, simply deliver only the portion of the data you care about to a Kafka cluster, and reduce the data transfer by using our Data-Deltas compression, to deliver the same information with up to a 90% data savings.

This tutorial purely focus on the no-code solution to deliver event data between REST apis and Kafka clusters where not all the firehose data from the source needs to be replicated/fanned-out to Kafka.

This tutorial introduces the concept of Topic Views, a dynamic mechanism to map part of a server's Topic Tree to another. This enables real-time data transformation before replicating to a remote cluster as well as to create dynamic data models based on on-the-fly data (eg: Kafka firehose data, or REST api data sources). This lesson also shows how to use our REST Adapter and our Kafka adapter to ingest and broadcast sporst data using Diffusion Topic Views in order to consume what you need.

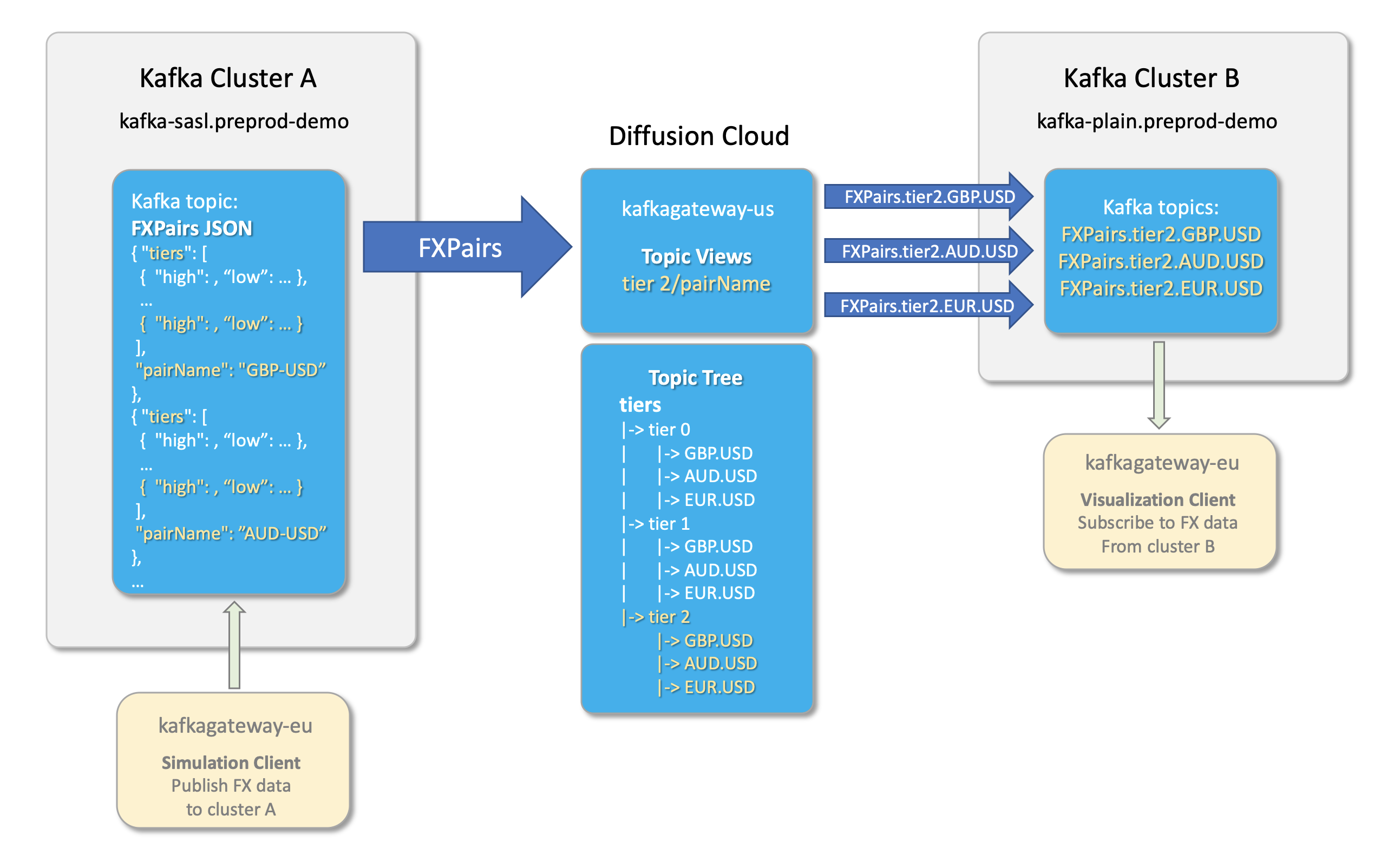

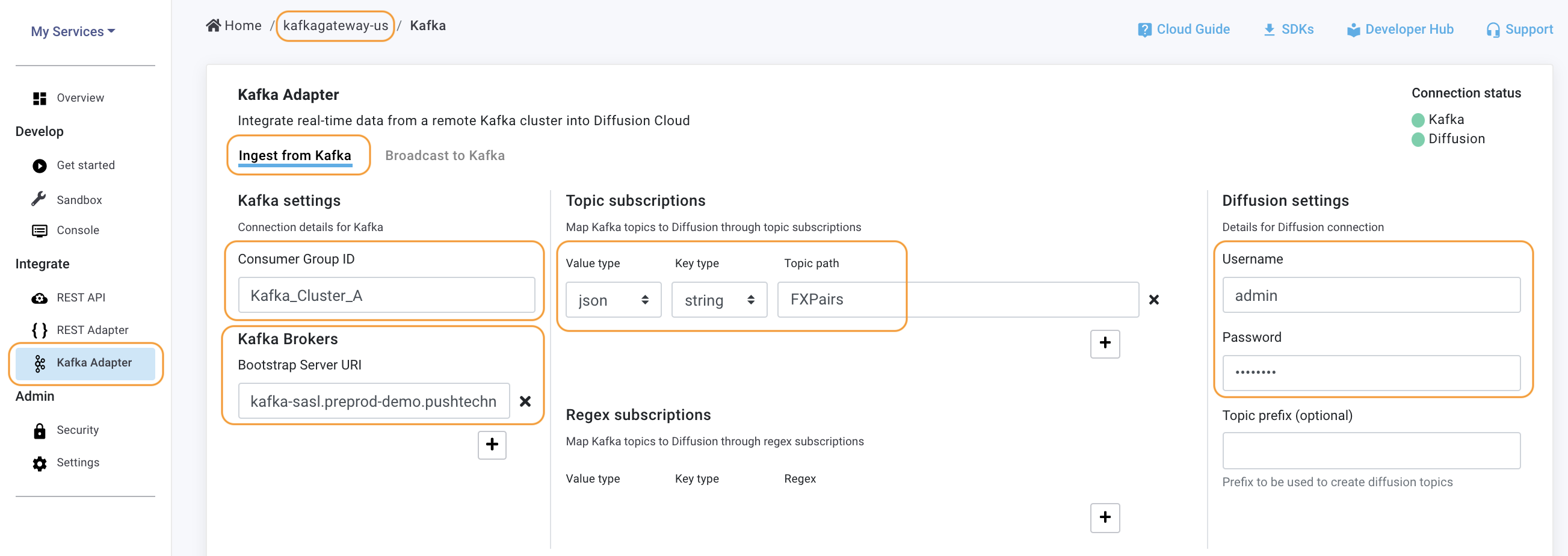

Adapters > Kafka Adapter > Ingest from Kafka Config:

Bootstrap Server > connect to you Kafka cluster A (eg: "kafka-sasl.preprod-demo.pushtechnology")

Diffusion service credentials > admin, password (use the "Security" tab to create a user or admin account)

Kafka Topic subscription > the source topic from your Kafka cluster (eg: "FXPairs")

Kafka Topic value type > we are using JSON, but can be string, integer, byte, etc.

Kafka Topic key type > use string type for this code example.

We can see the events from FXPairs Kafka topic (from cluster A) is now being published to Diffusion topic path: FXPairs. If there are no new events, it might be because the FXPairs topic has not received any updates from Kafka yet.

Step 3: Create Topic Views using Source value directives

Source value directives use the keyword expand() value directive to create multiple reference topics from a single JSON source topic, or value() directive to create a new JSON value with a subset of a JSON source topic.

We are going to map the topic FXPairs stream (we get from Kafka cluster A) to a new Diffusion Topic View with path: pairs/<expand(/value/pairs,/pairName)>/ where /value/pairs,/pairName is the Kafka payload currency pairName (part of the JSON structure in the FXPairs Kafka topic).

This Topic View will take care of dynamic branching and routing of event streams in real-time, by only sending the specific currency pair from Kafka cluster A, to Kafka cluster B, and not the whole stream.

As new events are coming in from the Kafka cluster A firehose, Diffusion is dynamically branching and routing the currency pairs, on-the-fly when replicating and fan-out to Kafka cluster B.

Note: The topic path will dynamically change as new currency pair values come in.

When clicking on the "+" for "tiers" topic tree, the following image shows a Topic View for the following specification:

Lesson 1: Mapping Topics

Lesson 2: Mapping Topic Branches

Lesson 3: Extracting Source Topic Values

Lesson 4: Throttling Reference Topics

Adapters > Kafka Adapter > Broadcast to Kafka Config:

Bootstrap Server > connect to you Kafka cluster A (eg: "kafka-plain.preprod-demo.pushtechnology")

Diffusion service credentials > admin, password (use the "Security" tab to create a user or admin account)

Topic subscription > the source topic from Diffusion to be broadcasted to Kafka cluster B (eg: from "tiers/2/GBP-USD" to "FXPairs.tier2.GBP.USD")

Topic value type > we are using JSON, but can be string, integer, byte, etc.

Kafka Topic key type > use string type for this code example.

- Download our code examples or clone them to your local environment:

git clone https://github.com/diffusion-playground/kafka-integration-no-code/

- A Diffusion service (Cloud or On-Premise), version 6.6 (update to latest preview version) or greater. Create a service here.

- Follow our Quick Start Guide and get your service up in a minute!

Really easy, just open the index.html file locally and off you go!