Add sleuth to the classpath of a Spring Boot application (see below for Maven and Gradle examples), and you will see the correlation data being collected in logs, as long as you are logging requests.

Example HTTP handler:

@RestController

public class DemoController {

private static Logger log = LoggerFactory.getLogger(DemoController.class);

@RequestMapping("/")

public String home() {

log.info("Handling home");

...

return "Hello World";

}

}You will see the calls to home() traced in the logs and in Zipkin, if that is configured.

|

Note

|

instead of logging the request in the handler explicitly, you

could set logging.level.org.springframework.web.servlet.DispatcherServlet=DEBUG

|

|

Note

|

Set spring.application.name=bar (for instance) to see the

service name as well as the trace and span ids.

|

Spring Cloud Sleuth implements a distributed tracing solution for Spring Cloud.

Spring Cloud Sleuth borrows Dapper’s terminology.

Span: The basic unit of work. For example, sending an RPC is a new span, as is sending a response to an RPC. Span’s are identified by a unique 64-bit ID for the span and another 64-bit ID for the trace the span is a part of. Spans also have other data, such as descriptions, timestamped events, key-value annotations (tags), the ID of the span that caused them, and process ID’s (normally IP address).

Spans are started and stopped, and they keep track of their timing information. Once you create a span, you must stop it at some point in the future.

|

Tip

|

The initial span that starts a trace is called a root span. The value of span id

of that span is equal to trace id.

|

Trace: A set of spans forming a tree-like structure. For example, if you are running a distributed big-data store, a trace might be formed by a put request.

Annotation: is used to record existence of an event in time. Some of the core annotations used to define the start and stop of a request are:

-

cs - Client Sent - The client has made a request. This annotation depicts the start of the span.

-

sr - Server Received - The server side got the request and will start processing it. If one subtracts the cs timestamp from this timestamp one will receive the network latency.

-

ss - Server Sent - Annotated upon completion of request processing (when the response got sent back to the client). If one subtracts the sr timestamp from this timestamp one will receive the time needed by the server side to process the request.

-

cr - Client Received - Signifies the end of the span. The client has successfully received the response from the server side. If one subtracts the cs timestamp from this timestamp one will receive the whole time needed by the client to receive the response from the server.

Visualization of what Span and Trace will look in a system together with the Zipkin annotations:

Each color of a note signifies a span (7 spans - from A to G). If you have such information in the note:

Trace Id = X

Span Id = D

Client SentThat means that the current span has Trace-Id set to X, Span-Id set to D. It also has emitted Client Sent event.

This is how the visualization of the parent / child relationship of spans would look like:

In the following sections the example from the image above will be taken into consideration.

Altogether there are 10 spans . If you go to traces in Zipkin you will see this number:

However if you pick a particular trace then you will see 7 spans:

|

Note

|

When picking a particular trace you will see merged spans. That means that if there were 2 spans sent to Zipkin with Server Received and Server Sent / Client Received and Client Sent annotations then they will presented as a single span. |

In the image depicting the visualization of what Span and Trace is you can see 20 colorful labels. How does it happen that in Zipkin 10 spans are received?

-

2 span A labels signify span started and closed. Upon closing a single span is sent to Zipkin.

-

4 span B labels are in fact are single span with 4 annotations. However this span is composed of two separate instances. One sent from service 1 and one from service 2. So in fact two span instances will be sent to Zipkin and merged there.

-

2 span C labels signify span started and closed. Upon closing a single span is sent to Zipkin.

-

4 span B labels are in fact are single span with 4 annotations. However this span is composed of two separate instances. One sent from service 2 and one from service 3. So in fact two span instances will be sent to Zipkin and merged there.

-

2 span E labels signify span started and closed. Upon closing a single span is sent to Zipkin.

-

4 span B labels are in fact are single span with 4 annotations. However this span is composed of two separate instances. One sent from service 2 and one from service 4. So in fact two span instances will be sent to Zipkin and merged there.

-

2 span G labels signify span started and closed. Upon closing a single span is sent to Zipkin.

So 1 span from A, 2 spans from B, 1 span from C, 2 spans from D, 1 span from E, 2 spans from F and 1 from G. Altogether 10 spans.

The dependency graph in Zipkin would look like this:

When grepping the logs of those four applications by trace id equal to e.g. 2485ec27856c56f4 one would get the following:

service1.log:2016-02-26 11:15:47.561 INFO [service1,2485ec27856c56f4,2485ec27856c56f4,true] 68058 --- [nio-8081-exec-1] i.s.c.sleuth.docs.service1.Application : Hello from service1. Calling service2

service2.log:2016-02-26 11:15:47.710 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Hello from service2. Calling service3 and then service4

service3.log:2016-02-26 11:15:47.895 INFO [service3,2485ec27856c56f4,1210be13194bfe5,true] 68060 --- [nio-8083-exec-1] i.s.c.sleuth.docs.service3.Application : Hello from service3

service2.log:2016-02-26 11:15:47.924 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Got response from service3 [Hello from service3]

service4.log:2016-02-26 11:15:48.134 INFO [service4,2485ec27856c56f4,1b1845262ffba49d,true] 68061 --- [nio-8084-exec-1] i.s.c.sleuth.docs.service4.Application : Hello from service4

service2.log:2016-02-26 11:15:48.156 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Got response from service4 [Hello from service4]

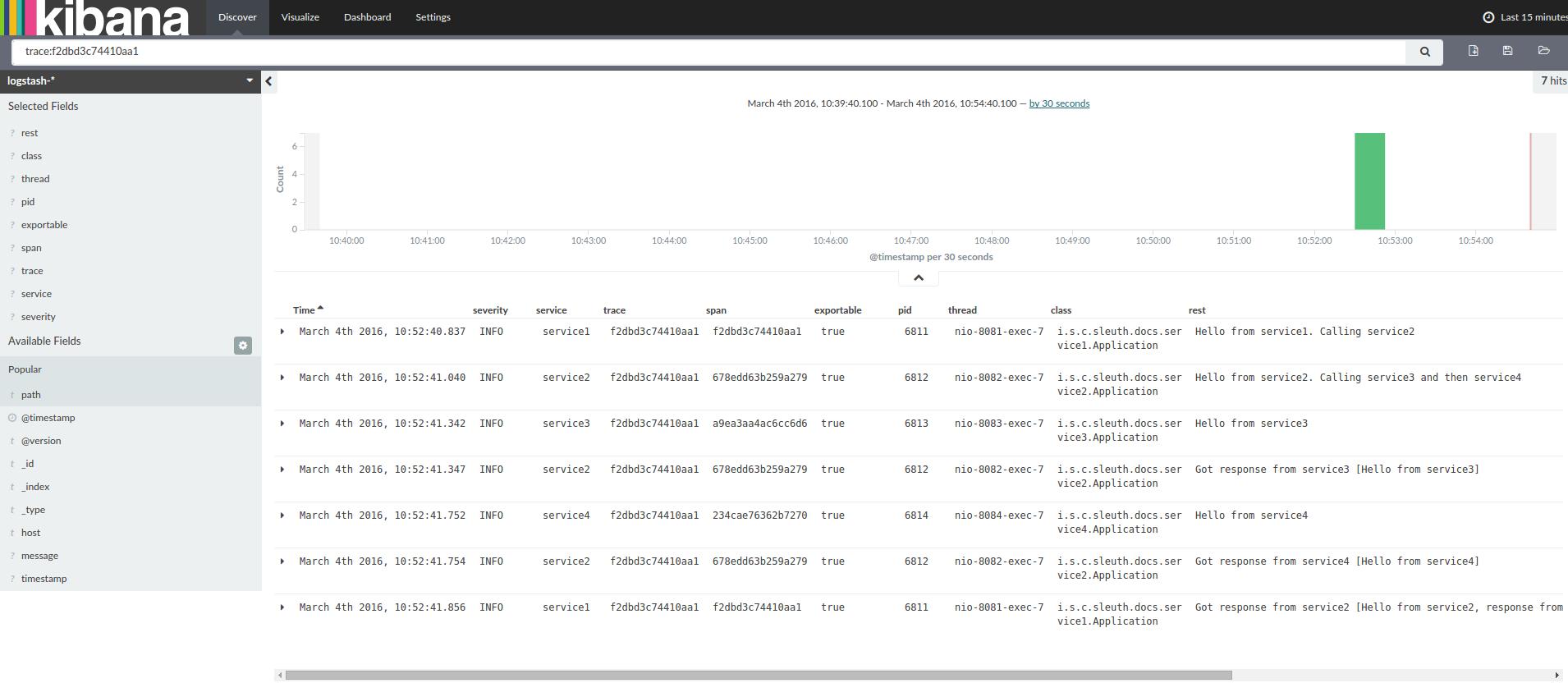

service1.log:2016-02-26 11:15:48.182 INFO [service1,2485ec27856c56f4,2485ec27856c56f4,true] 68058 --- [nio-8081-exec-1] i.s.c.sleuth.docs.service1.Application : Got response from service2 [Hello from service2, response from service3 [Hello from service3] and from service4 [Hello from service4]]If you’re using a log aggregating tool like Kibana, Splunk etc. you can order the events that took place. An example of Kibana would look like this:

If you want to use Logstash here is the Grok pattern for Logstash:

filter {

# pattern matching logback pattern

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp}\s+%{LOGLEVEL:severity}\s+\[%{DATA:service},%{DATA:trace},%{DATA:span},%{DATA:exportable}\]\s+%{DATA:pid}---\s+\[%{DATA:thread}\]\s+%{DATA:class}\s+:\s+%{GREEDYDATA:rest}" }

}

}|

Note

|

If you want to use Grok together with the logs from Cloud Foundry you have to use this pattern: |

filter {

# pattern matching logback pattern

grok {

match => { "message" => "(?m)OUT\s+%{TIMESTAMP_ISO8601:timestamp}\s+%{LOGLEVEL:severity}\s+\[%{DATA:service},%{DATA:trace},%{DATA:span},%{DATA:exportable}\]\s+%{DATA:pid}---\s+\[%{DATA:thread}\]\s+%{DATA:class}\s+:\s+%{GREEDYDATA:rest}" }

}

}Often you do not want to store your logs in a text file but in a JSON file that Logstash can immediately pick. To do that you have to do the following (for readability

we’re passing the dependencies in the groupId:artifactId:version notation.

Dependencies setup

-

Ensure that Logback is on the classpath (

ch.qos.logback:logback-core) -

Add Logstash Logback encode - example for version

4.6:net.logstash.logback:logstash-logback-encoder:4.6

Logback setup

Below you can find an example of a Logback configuration (file named logback-spring.xml) that:

-

logs information from the application in a JSON format to a

build/${spring.application.name}.jsonfile -

has commented out two additional appenders - console and standard log file

-

has the same logging pattern as the one presented in the previous section

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<springProperty scope="context" name="springAppName" source="spring.application.name"/>

<!-- Example for logging into the build folder of your project -->

<property name="LOG_FILE" value="${BUILD_FOLDER:-build}/${springAppName}"/>

<property name="CONSOLE_LOG_PATTERN"

value="%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr([${springAppName:-},%X{X-B3-TraceId:-},%X{X-B3-SpanId:-},%X{X-Span-Export:-}]){yellow} %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}"/>

<!-- Appender to log to console -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<!-- Minimum logging level to be presented in the console logs-->

<level>INFO</level>

</filter>

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file -->

<appender name="flatfile" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file in a JSON format -->

<appender name="logstash" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}.json</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.json.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<pattern>

<pattern>

{

"severity": "%level",

"service": "${springAppName:-}",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"exportable": "%X{X-Span-Export:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger{40}",

"rest": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<root level="INFO">

<!--<appender-ref ref="console"/>-->

<appender-ref ref="logstash"/>

<!--<appender-ref ref="flatfile"/>-->

</root>

</configuration>|

Note

|

If you’re using a custom logback-spring.xml then you have to pass the spring.application.name in

bootstrap instead of application property file. Otherwise your custom logback file won’t read the property properly.

|

If you want to profit only from Spring Cloud Sleuth without the Zipkin integration just add

the spring-cloud-starter-sleuth module to your project.

<dependencyManagement> (1)

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Brixton.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependency> (2)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

</dependency>-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-starter-sleuth

dependencyManagement { (1)

imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:Brixton.RELEASE"

}

}

dependencies { (2)

compile "org.springframework.cloud:spring-cloud-starter-sleuth"

}-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-starter-sleuth

If you want both Sleuth and Zipkin just add the spring-cloud-starter-zipkin dependency.

<dependencyManagement> (1)

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Brixton.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependency> (2)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-zipkin</artifactId>

</dependency>-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-starter-zipkin

dependencyManagement { (1)

imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:Brixton.RELEASE"

}

}

dependencies { (2)

compile "org.springframework.cloud:spring-cloud-starter-zipkin"

}-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-starter-zipkin

If you want both Sleuth and Zipkin just add the spring-cloud-sleuth-stream dependency.

<dependencyManagement> (1)

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Brixton.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependency> (2)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-sleuth-stream</artifactId>

</dependency>

<dependency> (3)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

</dependency>

<!-- EXAMPLE FOR RABBIT BINDING -->

<dependency> (4)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-rabbit</artifactId>

</dependency>-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-sleuth-stream -

Add the dependency to

spring-cloud-starter-sleuth- that way all dependant dependencies will be downloaded -

Add a binder (e.g. Rabbit binder) to tell Spring Cloud Stream what it should bind to

dependencyManagement { (1)

imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:Brixton.RELEASE"

}

}

dependencies {

compile "org.springframework.cloud:spring-cloud-sleuth-stream" (2)

compile "org.springframework.cloud:spring-cloud-starter-sleuth" (3)

// Example for Rabbit binding

compile "org.springframework.cloud:spring-cloud-stream-binder-rabbit" (4)

}-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-sleuth-stream -

Add the dependency to

spring-cloud-starter-sleuth- that way all dependant dependencies will be downloaded -

Add a binder (e.g. Rabbit binder) to tell Spring Cloud Stream what it should bind to

If you want to start a Spring Cloud Sleuth Stream Zipkin collector just add the spring-cloud-sleuth-zipkin-stream

dependency

<dependencyManagement> (1)

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Brixton.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependency> (2)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-sleuth-zipkin-stream</artifactId>

</dependency>

<dependency> (3)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

</dependency>

<!-- EXAMPLE FOR RABBIT BINDING -->

<dependency> (4)

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-rabbit</artifactId>

</dependency>-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-sleuth-zipkin-stream -

Add the dependency to

spring-cloud-starter-sleuth- that way all dependant dependencies will be downloaded -

Add a binder (e.g. Rabbit binder) to tell Spring Cloud Stream what it should bind to

dependencyManagement { (1)

imports {

mavenBom "org.springframework.cloud:spring-cloud-dependencies:Brixton.RELEASE"

}

}

dependencies {

compile "org.springframework.cloud:spring-cloud-sleuth-zipkin-stream" (2)

compile "org.springframework.cloud:spring-cloud-starter-sleuth" (3)

// Example for Rabbit binding

compile "org.springframework.cloud:spring-cloud-stream-binder-rabbit" (4)

}-

In order not to pick versions by yourself it’s much better if you add the dependency management via the Spring BOM

-

Add the dependency to

spring-cloud-sleuth-zipkin-stream -

Add the dependency to

spring-cloud-starter-sleuth- that way all dependant dependencies will be downloaded -

Add a binder (e.g. Rabbit binder) to tell Spring Cloud Stream what it should bind to

and then just annotate your main class with @EnableZipkinStreamServer annotation:

package example;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.cloud.sleuth.zipkin.stream.EnableZipkinStreamServer;

@SpringBootApplication

@EnableZipkinStreamServer

public class ZipkinStreamServerApplication {

public static void main(String[] args) throws Exception {

SpringApplication.run(ZipkinStreamServerApplication.class, args);

}

}-

Adds trace and span ids to the Slf4J MDC, so you can extract all the logs from a given trace or span in a log aggregator. Example logs:

2016-02-02 15:30:57.902 INFO [bar,6bfd228dc00d216b,6bfd228dc00d216b,false] 23030 --- [nio-8081-exec-3] ... 2016-02-02 15:30:58.372 ERROR [bar,6bfd228dc00d216b,6bfd228dc00d216b,false] 23030 --- [nio-8081-exec-3] ... 2016-02-02 15:31:01.936 INFO [bar,46ab0d418373cbc9,46ab0d418373cbc9,false] 23030 --- [nio-8081-exec-4] ...

notice the

[appname,traceId,spanId,exportable]entries from the MDC:-

spanId - the id of a specific operation that took place

-

appname - the name of the application that logged the span

-

traceId - the id of the latency graph that contains the span

-

exportable - whether the log should be exported to Zipkin or not. When would you like the span not to be exportable? In the case in which you want to wrap some operation in a Span and have it written to the logs only.

-

-

Provides an abstraction over common distributed tracing data models: traces, spans (forming a DAG), annotations, key-value annotations. Loosely based on HTrace, but Zipkin (Dapper) compatible.

-

Sleuth records timing information to aid in latency analysis. Using sleuth, you can pinpoint causes of latency in your applications. Sleuth is written to not log too much, and to not cause your production application to crash.

-

propagates structural data about your call-graph in-band, and the rest out-of-band.

-

includes opinionated instrumentation of layers such as HTTP

-

includes sampling policy to manage volume

-

can report to a Zipkin system for query and visualization

-

-

Instruments common ingress and egress points from Spring applications (servlet filter, async endpoints, rest template, scheduled actions, message channels, zuul filters, feign client).

-

Sleuth includes default logic to join a trace across http or messaging boundaries. For example, http propagation works via Zipkin-compatible request headers. This propagation logic is defined and customized via

SpanInjectorandSpanExtractorimplementations. -

Provides simple metrics of accepted / dropped spans.

-

If

spring-cloud-sleuth-zipkinthen the app will generate and collect Zipkin-compatible traces. By default it sends them via HTTP to a Zipkin server on localhost (port 9411). Configure the location of the service usingspring.zipkin.baseUrl. -

If

spring-cloud-sleuth-streamthen the app will generate and collect traces via Spring Cloud Stream. Your app automatically becomes a producer of tracer messages that are sent over your broker of choice (e.g. RabbitMQ, Apache Kafka, Redis).

|

Important

|

If using Zipkin or Stream, configure the percentage of spans exported using spring.sleuth.sampler.percentage

(default 0.1, i.e. 10%). Otherwise you might think that Sleuth is not working cause it’s omitting some spans.

|

|

Note

|

the SLF4J MDC is always set and logback users will immediately see the trace and span ids in logs per the example

above. Other logging systems have to configure their own formatter to get the same result. The default is

logging.pattern.level set to %clr(%5p) %clr([${spring.application.name:},%X{X-B3-TraceId:-},%X{X-B3-SpanId:-},%X{X-Span-Export:-}]){yellow}

(this is a Spring Boot feature for logback users).

This means that if you’re not using SLF4J this pattern WILL NOT be automatically applied.

|

To build the source you will need to install JDK 1.7.

Spring Cloud uses Maven for most build-related activities, and you should be able to get off the ground quite quickly by cloning the project you are interested in and typing

$ ./mvnw install

|

Note

|

You can also install Maven (>=3.3.3) yourself and run the mvn command

in place of ./mvnw in the examples below. If you do that you also

might need to add -P spring if your local Maven settings do not

contain repository declarations for spring pre-release artifacts.

|

|

Note

|

Be aware that you might need to increase the amount of memory

available to Maven by setting a MAVEN_OPTS environment variable with

a value like -Xmx512m -XX:MaxPermSize=128m. We try to cover this in

the .mvn configuration, so if you find you have to do it to make a

build succeed, please raise a ticket to get the settings added to

source control.

|

For hints on how to build the project look in .travis.yml if there

is one. There should be a "script" and maybe "install" command. Also

look at the "services" section to see if any services need to be

running locally (e.g. mongo or rabbit). Ignore the git-related bits

that you might find in "before_install" since they’re related to setting git

credentials and you already have those.

The projects that require middleware generally include a

docker-compose.yml, so consider using

Docker Compose to run the middeware servers

in Docker containers. See the README in the

scripts demo

repository for specific instructions about the common cases of mongo,

rabbit and redis.

|

Note

|

If all else fails, build with the command from .travis.yml (usually

./mvnw install).

|

The spring-cloud-build module has a "docs" profile, and if you switch

that on it will try to build asciidoc sources from

src/main/asciidoc. As part of that process it will look for a

README.adoc and process it by loading all the includes, but not

parsing or rendering it, just copying it to ${main.basedir}

(defaults to ${basedir}, i.e. the root of the project). If there are

any changes in the README it will then show up after a Maven build as

a modified file in the correct place. Just commit it and push the change.

If you don’t have an IDE preference we would recommend that you use Spring Tools Suite or Eclipse when working with the code. We use the m2eclipe eclipse plugin for maven support. Other IDEs and tools should also work without issue as long as they use Maven 3.3.3 or better.

We recommend the m2eclipe eclipse plugin when working with eclipse. If you don’t already have m2eclipse installed it is available from the "eclipse marketplace".

|

Note

|

Older versions of m2e do not support Maven 3.3, so once the

projects are imported into Eclipse you will also need to tell

m2eclipse to use the right profile for the projects. If you

see many different errors related to the POMs in the projects, check

that you have an up to date installation. If you can’t upgrade m2e,

add the "spring" profile to your settings.xml. Alternatively you can

copy the repository settings from the "spring" profile of the parent

pom into your settings.xml.

|

If you prefer not to use m2eclipse you can generate eclipse project metadata using the following command:

$ ./mvnw eclipse:eclipse

The generated eclipse projects can be imported by selecting import existing projects

from the file menu.

|

Important

|

There are 2 different versions of language level used in Spring Cloud Sleuth. Java 1.7 is used for main sources and

Java 1.8 is used for tests. When importing your project to an IDE please activate the ide Maven profile to turn on

Java 1.8 for both main and test sources. Of course remember that you MUST NOT use Java 1.8 features in the main sources. If you do

so your app will break during the Maven build.

|

Spring Cloud is released under the non-restrictive Apache 2.0 license, and follows a very standard Github development process, using Github tracker for issues and merging pull requests into master. If you want to contribute even something trivial please do not hesitate, but follow the guidelines below.

Before we accept a non-trivial patch or pull request we will need you to sign the Contributor License Agreement. Signing the contributor’s agreement does not grant anyone commit rights to the main repository, but it does mean that we can accept your contributions, and you will get an author credit if we do. Active contributors might be asked to join the core team, and given the ability to merge pull requests.

This project adheres to the Contributor Covenant code of conduct. By participating, you are expected to uphold this code. Please report unacceptable behavior to [email protected].

None of these is essential for a pull request, but they will all help. They can also be added after the original pull request but before a merge.

-

Use the Spring Framework code format conventions. If you use Eclipse you can import formatter settings using the

eclipse-code-formatter.xmlfile from the Spring Cloud Build project. If using IntelliJ, you can use the Eclipse Code Formatter Plugin to import the same file. -

Make sure all new

.javafiles to have a simple Javadoc class comment with at least an@authortag identifying you, and preferably at least a paragraph on what the class is for. -

Add the ASF license header comment to all new

.javafiles (copy from existing files in the project) -

Add yourself as an

@authorto the .java files that you modify substantially (more than cosmetic changes). -

Add some Javadocs and, if you change the namespace, some XSD doc elements.

-

A few unit tests would help a lot as well — someone has to do it.

-

If no-one else is using your branch, please rebase it against the current master (or other target branch in the main project).

-

When writing a commit message please follow these conventions, if you are fixing an existing issue please add

Fixes gh-XXXXat the end of the commit message (where XXXX is the issue number).