diff --git a/packages/backend/src/assets/ai.json b/packages/backend/src/assets/ai.json

index 5dcfb19ae..fbe8d19c3 100644

--- a/packages/backend/src/assets/ai.json

+++ b/packages/backend/src/assets/ai.json

@@ -5,7 +5,7 @@

"description" : "This is a Streamlit chat demo application.",

"name" : "ChatBot",

"repository": "https://github.com/containers/ai-lab-recipes",

- "ref": "dd4cf14018ee0da4e822e85aadd6909dc4807d1e",

+ "ref": "901677832328b323af83972e2243b7ce90dc133e",

"icon": "natural-language-processing",

"categories": [

"natural-language-processing"

@@ -29,7 +29,7 @@

"description" : "This is a Streamlit demo application for summarizing text.",

"name" : "Summarizer",

"repository": "https://github.com/containers/ai-lab-recipes",

- "ref": "dd4cf14018ee0da4e822e85aadd6909dc4807d1e",

+ "ref": "901677832328b323af83972e2243b7ce90dc133e",

"icon": "natural-language-processing",

"categories": [

"natural-language-processing"

@@ -53,7 +53,7 @@

"description" : "This is a code-generation demo application.",

"name" : "Code Generation",

"repository": "https://github.com/containers/ai-lab-recipes",

- "ref": "dd4cf14018ee0da4e822e85aadd6909dc4807d1e",

+ "ref": "901677832328b323af83972e2243b7ce90dc133e",

"icon": "generator",

"categories": [

"natural-language-processing"

@@ -73,6 +73,38 @@

"hf.llmware.dragon-mistral-7b-q4_k_m",

"hf.MaziyarPanahi.MixTAO-7Bx2-MoE-Instruct-v7.0.Q4_K_M"

]

+ },

+ {

+ "id": "audio_to_text",

+ "description" : "This is an audio to text demo application.",

+ "name" : "Audio to Text",

+ "repository": "https://github.com/containers/ai-lab-recipes",

+ "ref": "901677832328b323af83972e2243b7ce90dc133e",

+ "icon": "generator",

+ "categories": [

+ "audio"

+ ],

+ "basedir": "recipes/audio/audio_to_text",

+ "readme": "# Audio to Text Application\n\n This sample application is a simple recipe to transcribe an audio file.\n This provides a simple recipe to help developers start building out their own custom LLM enabled\n audio-to-text applications. It consists of two main components; the Model Service and the AI Application.\n\n There are a few options today for local Model Serving, but this recipe will use [`whisper-cpp`](https://github.com/ggerganov/whisper.cpp.git)\n and its included Model Service. There is a Containerfile provided that can be used to build this Model Service within the repo,\n [`model_servers/whispercpp/Containerfile`](/model_servers/whispercpp/Containerfile).\n\n Our AI Application will connect to our Model Service via it's API endpoint.\n\n\n \n

\n

\n\n# Build the Application\n\nIn order to build this application we will need a model, a Model Service and an AI Application. \n\n* [Download a model](#download-a-model)\n* [Build the Model Service](#build-the-model-service)\n* [Deploy the Model Service](#deploy-the-model-service)\n* [Build the AI Application](#build-the-ai-application)\n* [Deploy the AI Application](#deploy-the-ai-application)\n* [Interact with the AI Application](#interact-with-the-ai-application)\n * [Input audio files](#input-audio-files)\n\n### Download a model\n\nIf you are just getting started, we recommend using [ggerganov/whisper.cpp](https://huggingface.co/ggerganov/whisper.cpp).\nThis is a well performant model with an MIT license.\nIt's simple to download a pre-converted whisper model from [huggingface.co](https://huggingface.co)\nhere: https://huggingface.co/ggerganov/whisper.cpp. There are a number of options, but we recommend to start with `ggml-small.bin`. \n\nThe recommended model can be downloaded using the code snippet below:\n\n```bash\ncd models\nwget https://huggingface.co/ggerganov/whisper.cpp/resolve/main/ggml-small.bin \ncd ../\n```\n\n_A full list of supported open models is forthcoming._ \n\n\n### Build the Model Service\n\nThe Model Service can be built from the root directory with the following code snippet:\n\n```bash\ncd model_servers/whispercpp\npodman build -t whispercppserver .\n```\n\n### Deploy the Model Service\n\nThe local Model Service relies on a volume mount to the localhost to access the model files. You can start your local Model Service using the following Podman command:\n```\npodman run --rm -it \\\n -p 8001:8001 \\\n -v Local/path/to/locallm/models:/locallm/models \\\n -e MODEL_PATH=models/ \\\n -e HOST=0.0.0.0 \\\n -e PORT=8001 \\\n whispercppserver\n```\n\n### Build the AI Application\n\nNow that the Model Service is running we want to build and deploy our AI Application. Use the provided Containerfile to build the AI Application\nimage from the `audio-to-text/` directory.\n\n```bash\ncd audio-to-text\npodman build -t audio-to-text . -f builds/Containerfile \n```\n### Deploy the AI Application\n\nMake sure the Model Service is up and running before starting this container image.\nWhen starting the AI Application container image we need to direct it to the correct `MODEL_SERVICE_ENDPOINT`.\nThis could be any appropriately hosted Model Service (running locally or in the cloud) using a compatible API.\nThe following Podman command can be used to run your AI Application:\n\n```bash\npodman run --rm -it -p 8501:8501 -e MODEL_SERVICE_ENDPOINT=http://0.0.0.0:8001/inference audio-to-text \n```\n\n### Interact with the AI Application\n\nOnce the streamlit application is up and running, you should be able to access it at `http://localhost:8501`.\nFrom here, you can upload audio files from your local machine and translate the audio files as shown below.\n\nBy using this recipe and getting this starting point established,\nusers should now have an easier time customizing and building their own LLM enabled applications. \n\n#### Input audio files\n\nWhisper.cpp requires as an input 16-bit WAV audio files.\nTo convert your input audio files to 16-bit WAV format you can use `ffmpeg` like this:\n\n```bash\nffmpeg -i -ar 16000 -ac 1 -c:a pcm_s16le \n```\n",

+ "models": [

+ "hf.ggerganov.whisper.cpp"

+ ]

+ },

+ {

+ "id": "object_detection",

+ "description" : "This is an object detection demo application.",

+ "name" : "Object Detection",

+ "repository": "https://github.com/containers/ai-lab-recipes",

+ "ref": "901677832328b323af83972e2243b7ce90dc133e",

+ "icon": "generator",

+ "categories": [

+ "computer-vision"

+ ],

+ "basedir": "recipes/computer_vision/object_detection",

+ "readme": "# Object Detection\n\nThis recipe provides an example for running an object detection model service and its associated client locally. \n\n## Build and run the model service\n\n```bash\ncd object_detection/model_server\npodman build -t object_detection_service .\n```\n\n```bash\npodman run -it --rm -p 8000:8000 object_detection_service\n```\n\nBy default the model service will use [`facebook/detr-resnet-101`](https://huggingface.co/facebook/detr-resnet-101), which has an apache-2.0 license. The model is relatively small, but it will be downloaded fresh each time the model server is started unless a local model is provided (see additional instructions below). \n\n\n## Use a different or local model\n\nIf you'd like to use a different model hosted on huggingface, simply use the environment variable `MODEL_PATH` and set it to the correct `org/model` path on [huggingface.co](https://huggingface.co/) when starting your container. \n\nIf you'd like to download models locally so that they are not pulled each time the container restarts, you can use the following python snippet to a model to your `models/` directory. \n\n```python\nfrom huggingface_hub import snapshot_download\n\nsnapshot_download(repo_id=\"facebook/detr-resnet-101\",\n revision=\"no_timm\",\n local_dir=\"/locallm/models/vision/object_detection/facebook/detr-resnet-101\",\n local_dir_use_symlinks=False)\n\n```\n\nWhen using a model other than the default, you will need to set the `MODEL_PATH` environment variable. Here is an example of running the model service with a local model:\n\n```bash\n podman run -it --rm -p 8000:8000 -v /locallm/models/vision/:/locallm/models -e MODEL_PATH=models/object_detection/facebook/detr-resnet-50/ object_detection_service\n```\n\n## Build and run the client application\n\n```bash\ncd object_detection/client\npodman build -t object_detection_client .\n```\n\n```bash\npodman run -p 8501:8501 -e MODEL_ENDPOINT=http://10.88.0.1:8000/detection object_detection_client\n```\n\nOnce the client is up a running, you should be able to access it at `http://localhost:8501`. From here you can upload images from your local machine and detect objects in the image as shown below. \n\n\n \n

\n

\n\n\n",

+ "models": [

+ "hf.facebook.detr-resnet-101"

+ ]

}

],

"models": [

@@ -194,6 +226,29 @@

"license": "Apache-2.0",

"url": "https://huggingface.co/MaziyarPanahi/MixTAO-7Bx2-MoE-Instruct-v7.0-GGUF/resolve/main/MixTAO-7Bx2-MoE-Instruct-v7.0.Q4_K_M.gguf",

"memory": 7784628224

+ },

+ {

+ "id": "hf.ggerganov.whisper.cpp",

+ "name": "ggerganov/whisper.cpp",

+ "description": "# OpenAI's Whisper models converted to ggml format\n\n[Available models](https://huggingface.co/ggerganov/whisper.cpp/tree/main)\n",

+ "hw": "CPU",

+ "registry": "Hugging Face",

+ "license": "Apache-2.0",

+ "url": "https://huggingface.co/ggerganov/whisper.cpp/resolve/main/ggml-small.bin",

+ "memory": 487010000

+ },

+ {

+ "id": "hf.facebook.detr-resnet-101",

+ "name": "facebook/detr-resnet-101",

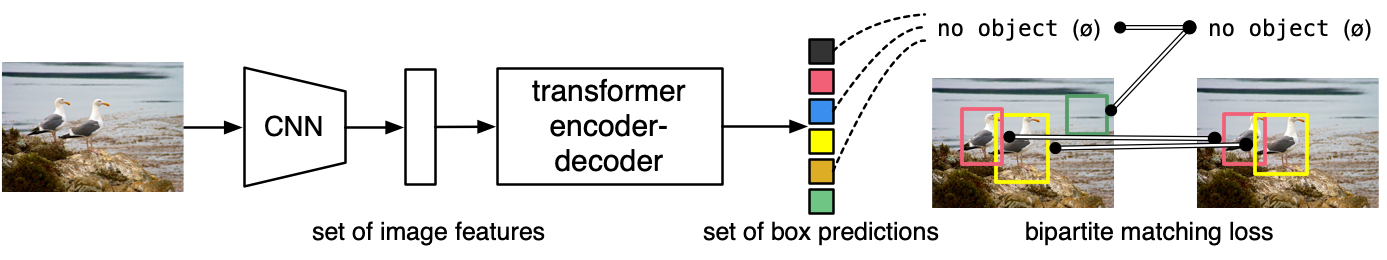

+ "description": "# DETR (End-to-End Object Detection) model with ResNet-101 backbone\n\nDEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr). \n\nDisclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.\n\n## Model description\n\nThe DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100. \n\nThe model is trained using a \"bipartite matching loss\": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a \"no object\" as class and \"no bounding box\" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.\n\n\n\n## Intended uses & limitations\n\nYou can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.",

+ "hw": "CPU",

+ "registry": "Hugging Face",

+ "license": "Apache-2.0",

+ "url": "https://huggingface.co/facebook/detr-resnet-101/resolve/no_timm/pytorch_model.bin",

+ "memory": 242980000,

+ "properties": {

+ "modelName": "facebook/detr-resnet-101"

+ }

}

],

"categories": [

\n

\n \n

\n \n

\n