Jenkins Pipelines enable teams to automate repetitive tasks, and ensure consistent and reliable software releases.

A Jenkins pipeline is defined in a file usually called Jenkinsfile, stored as part of the code repository.

In this file you instruct Jenkins on how to build, test and deploy your application by specifying a series of stages, steps, and configurations.

There are two main types of syntax for defining Jenkins pipelines in a Jenkinsfile: Declarative Syntax and Scripted Syntax.

- Declarative syntax is a more structured and easy. It uses a predefined set of functions (a.k.a directives) to define the pipeline's structure.

- Scripted syntax provides a more flexible and powerful way to define pipelines. It allows you to use Groovy scripting to customize and control every aspect of the pipeline. This pipelines won't be covered in this course.

The Jenkinsfile typically consists of multiple Stages, each of which performs a specific Steps, such as building the code as a Docker image, running tests, or deploying the software to Kubernetes cluster, etc...

Let's recall the pipeline you've created in the previous exercise (the pipeline will be used to build docker image for the [NetflixFrontend][NetflixFrontend] app).

// pipelines/build.Jenkinsfile

pipeline {

agent any

triggers {

githubPush()

}

stages {

stage('Build app container') {

steps {

sh '''

# your pipeline commands here....

# for example list the files in the pipeline workdir

ls

# build an image

docker build -t netflix-front .

'''

}

}

}

}

The Jenkinsfile is written in a Declarative Pipeline syntax. Let's break down each part of the code:

pipeline: This is the outermost block that encapsulates the entire pipeline definition.agent any: This directive specifies the agent where the pipeline stages will run. Theanykeyword indicates that the pipeline can run on any available agent.stages: The stages block contains a series of stages that define the major steps in the pipeline.stage('Build app container'): This directive defines a specific stage named "Build app container". Each stage represents a logical phase of your pipeline, such as building, testing, deploying, etc.steps: Inside the stage block, the steps block contains the individual steps or tasks to be executed within that stage.sh: This sh step executes a shell command.triggers: specifies the conditions that trigger the pipeline.githubPush()triggers the pipeline whenever there is a push to the associated GitHub repository.

This is what happen when your pipeline in being executed:

- Jenkins schedules the job on one of its available agents (also known as nodes).

- Jenkins creates a workspace directory on the agent's file system. This directory serves as the working area for the pipeline job.

- Jenkins checks out the source code into the workspace.

- Jenkins executes your pipeline script step-by-step.

Jenkins should be provided with different credentials in order to integrate with external systems. For example, credentials to push built Docker images to DockerHub, credentials to provision or describe infrastructure in AWS, or credentials to push to GitHub repo.

Jenkins has a standard place to store credentials which is called the System Credentials Provider.

Credentials stored under this provider usually available at the system level and are not restricted to a specific pipeline, folder, or user.

Important

Jenkins, as your main CI/CD server, can potentially hold VERY sensitive credentials. Not everyone (including myself) will be exposing high value credentials to Jenkins, as the way the data is stored may not meet your organization's security policies.

Instead of using the System Credentials Provider, you can use another provider to connect Jenkins to an external source, e.g. AWS Secret Manager credentials provider, or Kubernetes.

- In your Jenkins server main dashboard page, choose Manage Jenkins.

- Choose Credentials.

- Under Stores scoped to Jenkins, choose the System store (the standard provider discussed above).

- Under System, click the Global credentials (unrestricted) domain, then choose Add credentials.

- From the Kind field, choose the Username and password type.

- From the Scope field, choose the Global scope since this credentials should be used from within a pipeline.

- Add the credentials themselves:

- Your DockerHub username (or your AWS access key id if using ECR)

- Your DockerHub password or token (or your AWS secret access key if using ECR)

- Provide a unique ID for the credentials (e.g.,

dockerhub).

- Click Create to save the credentials.

Note

You might want to install the AWS Credentials Plugin, which adds support for storing and using AWS credentials in Jenkins.

Repeat the above steps to create a GitHub credentials:

- The Kind must be Username and password.

- Choose Username to your choice (it'll not be used...). The Password should be a GitHub Personal Access Token with the following scope:

Click here to create a token with this scope.

repo,read:user,user:email,write:repo_hook

The process of transforming the app source code into a runnable application is called "Build". The byproduct of build process is usually known as Artifact.

In our case, the build artifacts are Docker images, ready to be deployed in anywhere.

Note

There are many other build tools used in different programming languages and contexts, e.g. maven, npm, gradle, etc...

We now want to complete the build.Jenkinsfile pipeline, such that on every run of this job,

a new docker image of the app will be built and stored in container registry (either DockerHub or ECR).

Here is a skeleton for the build.Jenkinsfile. Carefully review it, feel free to change according to your needs:

pipeline {

agent any

triggers {

githubPush() // trigger the pipeline upon push event in github

}

options {

timeout(time: 10, unit: 'MINUTES') // discard the build after 10 minutes of running

timestamps() // display timestamp in console output

}

environment {

// GIT_COMMIT = sh(script: 'git rev-parse --short HEAD', returnStdout: true).trim()

// TIMESTAMP = new Date().format("yyyyMMdd-HHmmss")

IMAGE_TAG = "v1.0.$BUILD_NUMBER"

IMAGE_BASE_NAME = "netflix-app"

DOCKER_CREDS = credentials('dockerhub')

DOCKER_USERNAME = "${DOCKER_CREDS_USR}" // The _USR suffix added to access the username value

DOCKER_PASS = "${DOCKER_CREDS_PSW}" // The _PSW suffix added to access the password value

}

stages {

stage('Docker setup') {

steps {

sh '''

docker login -u $DOCKER_USERNAME -p $DOCKER_PASS

'''

}

}

stage('Build & Push') {

steps {

sh '''

IMAGE_FULL_NAME=$DOCKER_USERNAME/$IMAGE_BASE_NAME:$IMAGE_TAG

docker build -t $IMAGE_FULL_NAME .

docker push $IMAGE_FULL_NAME

'''

}

}

}

}

Test your pipeline by commit & push changes and expect a new Docker image to be stored in your container registry.

- We used the

BUILD_NUMBERenvironment variable to tag the images, but you can also use theGIT_COMMITor theTIMESTAMPenv vars for more meaningful tagging. Anyway never use thelatesttag. - We added some useful options.

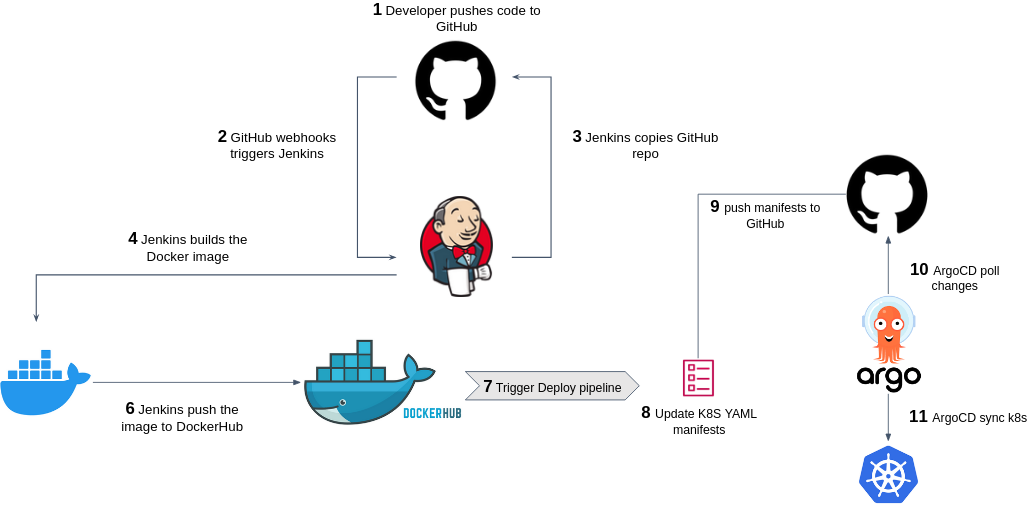

The deploy pipeline deploys the new app version we've just built in the Build pipeline, into your environment. There are many ways to implement deployment pipelines, depending on your system (e.g. Kubernetes, AWS Lambda, EC2 instance, etc...).

In our case, we want to deploy our newly created Docker image into our Kubernetes cluster using ArgoCD.

In the NetflixInfra repo (the dedicated repo you've created for the Kubernetes YAML manifests for the Netflix stack), create another Jenkinsfile under pipelines/deploy.Jenkinsfile, as follows:

pipeline {

agent any

parameters {

string(name: 'SERVICE_NAME', defaultValue: '', description: '')

string(name: 'IMAGE_FULL_NAME_PARAM', defaultValue: '', description: '')

}

stages {

stage('Deploy') {

steps {

/*

Now your turn! implement the pipeline steps ...

- `cd` into the directory corresponding to the SERVICE_NAME variable.

- Change the YAML manifests according to the new $IMAGE_FULL_NAME_PARAM parameter.

You can do so using `yq` or `sed` command, by a simple Python script, or any other method.

- Commit the changes, push them to GitHub.

* Setting global Git user.name and user.email in 'Manage Jenkins > System' is recommended.

* Setting Shell executable to `/bin/bash` in 'Manage Jenkins > System' is recommended.

*/

}

}

}

}

Carefully review the pipeline and complete the step yourself.

In the Jenkins dashboard, create another Jenkins Pipeline (e.g. named NetflixFrontendDeploy). Configure it similarly to the Build pipeline - choose Pipeline script from SCM, and specify the Git URL, branch, path to the Jenkinsfile, as well as your created GitHub credentials (as this pipeline has to push commit on your behalf).

As can be seen, unlike the Build pipeline, the Deploy pipeline is not triggered automatically upon a push event in GitHub (there is no githubPush()...)

but is instead initiated by providing a parameter called IMAGE_FULL_NAME_PARAM, which represents the new Docker image to deploy to your Kubernetes cluster.

Now to complete the Build-Deploy flow, configure the Build pipeline to trigger the Deploy pipeline and provide it with the IMAGE_FULL_NAME_PARAM parameter by adding the following stage after a successful Docker image build:

stages {

...

+ stage('Trigger Deploy') {

+ steps {

+ build job: '<deploy-pipeline-name-here>', wait: false, parameters: [

+ string(name: 'SERVICE_NAME', value: "NetflixFrontend"),

+ string(name: 'IMAGE_FULL_NAME_PARAM', value: "$IMAGE_FULL_NAME")

+ ]

+ }

+ }

}Where <deploy-pipeline-name-here> is the name of your Deploy pipeline (should be NetflixFrontendDeploy if you followed our example).

Test your simple CI/CD pipeline end-to-end.

Use the post directive and the docker system prune -a --force --filter "until=24h" command to cleanup the built Docker images and containers from the disk.

Jenkins does not clean the workspace by default after a build. Jenkins retains the contents of the workspace between builds to improve performance by avoiding the need to re-fetch and recreate the entire workspace each time a build runs.

Cleaning the workspace can help ensure that no artifacts from previous builds interfere with the current build.

Configure stage('Clean Workspace') stage to clean the workspace before or after a build.